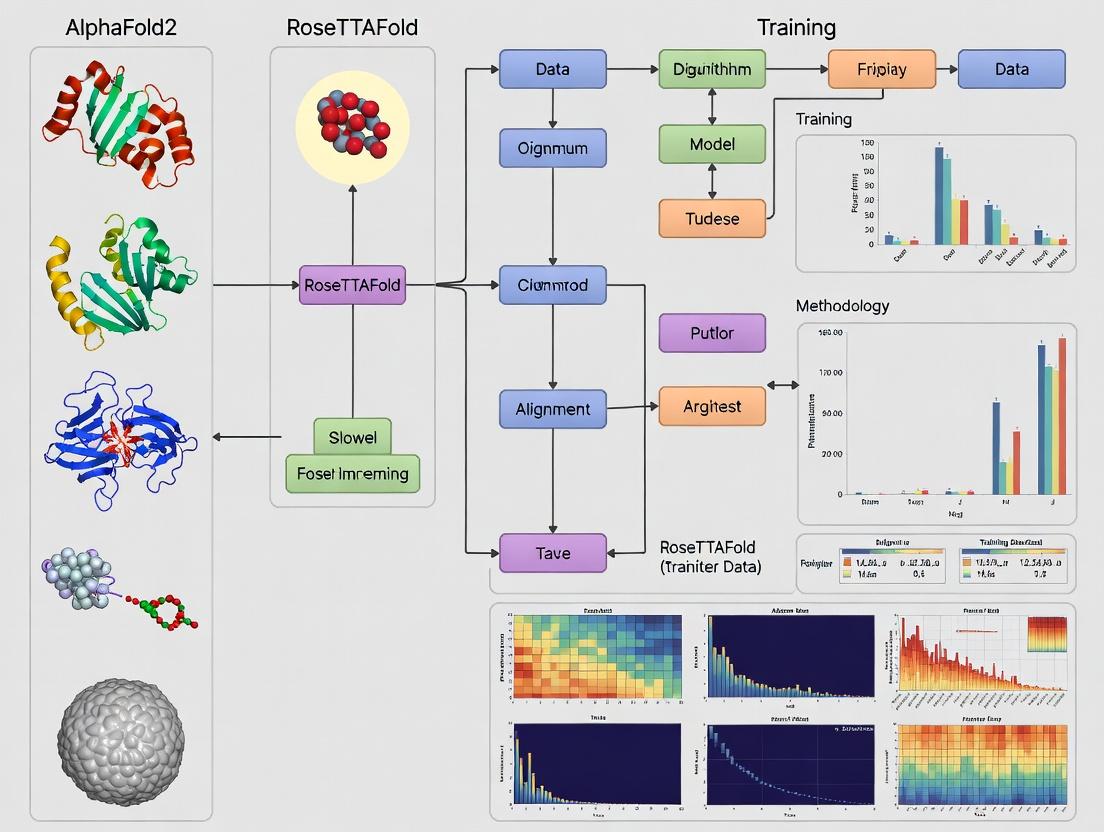

Decoding Protein Folding: A Deep Dive into AlphaFold2 and RoseTTAFold Training Data & AI Methodology

This article provides a comprehensive technical analysis for researchers and drug development professionals on the foundational data and advanced methodologies behind AlphaFold2 and RoseTTAFold.

Decoding Protein Folding: A Deep Dive into AlphaFold2 and RoseTTAFold Training Data & AI Methodology

Abstract

This article provides a comprehensive technical analysis for researchers and drug development professionals on the foundational data and advanced methodologies behind AlphaFold2 and RoseTTAFold. We explore the core architectural innovations, training datasets, and algorithmic principles that enable unprecedented accuracy in protein structure prediction. The content covers practical applications in drug discovery, common pitfalls in model interpretation, and a comparative validation against experimental techniques. Finally, we assess the current limitations and future trajectories of these transformative AI tools in structural biology and therapeutic design.

The Data Revolution: What Fuels AlphaFold2 and RoseTTAFold's Predictions?

Within the groundbreaking methodologies of AlphaFold2 and RoseTTAFold, core training datasets form the essential substrate. These systems do not learn protein folding ab initio; rather, they learn to predict the three-dimensional structure of a protein sequence by leveraging patterns distilled from massive, evolutionarily informed datasets. This whitepaper deconstructs the three pillars of these datasets: the Protein Data Bank (PDB) as the source of structural truths, Multiple Sequence Alignments (MSAs) as the carriers of evolutionary information, and the derived statistical potentials that link sequence to structure. The performance leap in CASP14 and beyond is directly attributable to the sophisticated integration of these components during training.

The Protein Data Bank (PDB): The Structural Ground Truth

The PDB is the canonical repository of experimentally determined 3D structures of proteins, nucleic acids, and complex assemblies. For deep learning models, it serves as the labeled training set, where the input is the amino acid sequence and the output is the atomic coordinates.

PDB Curation and Preprocessing for AlphaFold2/RoseTTAFold

Models employ stringent filtering to ensure data quality and prevent data leakage between training and evaluation sets (e.g., CASP targets). A critical step is the removal of sequences with high similarity to benchmark test sets.

Table 1: Representative PDB Dataset Filtering Statistics (Post-Processing)

| Filtering Criterion | AlphaFold2 (Approx.) | RoseTTAFold (Approx.) | Purpose |

|---|---|---|---|

| Initial PDB Entries | ~170,000 (as of 2018) | ~150,000 | Raw data pool. |

| Resolution Cutoff | ≤ 3.0 Å (most chains) | ≤ 3.2 Å | Ensure structural accuracy. |

| Sequence Identity Clustering | ~90% non-redundancy | 90-95% non-redundancy | Reduce redundancy, expand coverage. |

| Sequence Length Range | ~20 - 2,700 residues | ~20 - 1,500 residues | Manage computational constraints. |

| Final Curated Chains | ~29,000 (UniProt90 set) | ~36,000 | High-quality, non-redundant training set. |

Experimental Protocol: Structure Determination Methods

The PDB contains structures solved primarily via:

- X-ray Crystallography: The most prevalent method. Proteins are crystallized, and the diffraction pattern of X-rays is used to solve the electron density map.

- Protocol: Protein purification → crystallization → X-ray exposure → data collection → phase solving (e.g., molecular replacement) → model building and refinement.

- NMR Spectroscopy: For smaller, soluble proteins in solution.

- Protocol: Isotopic labeling (¹âµN, ¹³C) → multi-dimensional NMR experiments (NOESY, TOCSY) → assignment of peaks → distance restraint calculation → structure calculation via simulated annealing.

- Cryo-Electron Microscopy (Cryo-EM): For large complexes and membrane proteins.

- Protocol: Sample vitrification → grid preparation → automated imaging → particle picking → 2D class averaging → 3D reconstruction → model building.

Diagram Title: PDB as Training Data Pipeline

Multiple Sequence Alignments (MSAs) and Evolutionary Coupling

MSAs are the primary mechanism for injecting evolutionary information. By aligning homologous sequences from diverse organisms, models infer evolutionary constraints, revealing which residue pairs co-vary across evolution, implying spatial proximity.

MSA Construction Protocol

- Sequence Search: The query sequence is searched against large sequence databases (UniRef90, UniRef30, BFD) using highly sensitive tools like HHblits or JackHMMER.

- Alignment Building: Hits are aligned to the query profile, forming a raw MSA.

- Filtering and Weighting: Sequences are clustered by high identity (e.g., 90%) to reduce bias from over-represented species. Sequences are weighted to correct for phylogenetic bias.

- Contextual Features: Additional profiles like Position-Specific Scoring Matrices (PSSMs) and deletion probabilities are computed.

Table 2: Key MSA Database Sources and Parameters

| Database | Content & Size | Typical Use | Tool |

|---|---|---|---|

| UniRef90 | Clustered UniProt sequences at 90% identity. ~50 million clusters. | Primary search for close homologs. | JackHMMER |

| UniRef30 / BFD | Clustered sequences at 30% identity. Massive (~2-4 billion clusters). | Deep homology search for evolutionary signals. | HHblits |

| MGnify | Metagenomic sequences from environmental samples. | Finds distant homologs absent in curated DBs. | Used in expanded searches |

Extracting Evolutionary Couplings

Co-evolution analysis, via methods like Direct Coupling Analysis (DCA), identifies residue pairs (i,j) whose mutations are correlated beyond the independent background. These couplings are strong predictors of contacts in the 3D structure.

Protocol for DCA from MSAs:

- Input: A large, diverse MSA of N sequences and L residues.

- Compute Single & Pair Frequencies: Calculate observed frequencies ( fi(A) ) and ( f{ij}(A,B) ) for amino acids A,B at positions i and j, with sequence weighting.

- Infer the Potts Model: Find parameters ( hi(A) ) and ( e{ij}(A,B) ) of a statistical model (Potts) that reproduces the observed frequencies.

- Calculate Coupling Scores: The strength of the direct interaction is often summarized by the Frobenius norm of the coupling matrix ( e_{ij}(A,B) ). Top-ranked pairs (i,j) are predicted contacts.

Diagram Title: MSA Processing for Model Input

Integration in Neural Network Architectures

AlphaFold2 and RoseTTAFold process PDB data and MSAs through specialized modules.

- AlphaFold2's Evoformer: The core of AlphaFold2's trunk. It takes the MSA and pair representations and applies alternating row-wise (MSA) and column-wise (pair) attention. This allows information to flow between the MSA (evolutionary history) and the evolving pair-wise distance/geometry predictions, effectively "reasoning" about co-evolution.

- RoseTTAFold's 3-Track Network: Operates on three tracks: 1D sequence, 2D distance graph, and 3D coordinates. Information is passed between tracks. The 2D track is initialized with MSA-derived features (PSSM, co-evolutionary couplings), linking evolutionary data directly to spatial reasoning.

Diagram Title: Evolutionary Data Integration in AlphaFold2

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools and Databases for Core Dataset Research

| Tool/Resource | Category | Function in Training Data Pipeline |

|---|---|---|

| HH-suite (HHblits) | Sequence Search | Rapid, sensitive homology detection against clustered databases (UniRef30, BFD). Critical for building deep MSAs. |

| HMMER (JackHMMER) | Sequence Search | Iterative profile HMM search for building MSAs from UniRef90. |

| PSIPRED | Secondary Structure Prediction | Provides predicted secondary structure features used as auxiliary inputs in some models. |

| FreeContact/CCMpred | Co-evolution Analysis | Implements DCA and related methods to extract residue-residue contact predictions from MSAs. |

| PDBx/mmCIF Tools | Structure Data Parsing | Libraries for reading and processing the standard PDB archive format (mmCIF). |

| DSSP | Structure Annotation | Calculates secondary structure and solvent accessibility from 3D coordinates for labeling training data. |

| AlphaFold DB & Model Zoo | Pre-trained Models & Data | Provides open-access predicted structures and, in some cases, associated MSAs for the proteome. |

| ColabFold | MSA Generation & Folding | Integrated pipeline combining fast MMseqs2-based MSA generation with AlphaFold2/RoseTTAFold inference. |

| Palmitodiolein | Palmitodiolein, CAS:2190-30-9, MF:C55H102O6, MW:859.4 g/mol | Chemical Reagent |

| Naproxen glucuronide | Naproxen Glucuronide Reference Standard | Naproxen Glucuronide is a key metabolite of the NSAID naproxen. For research use only. Not for diagnostic or human use. CAS 41945-43-1. |

The revolution in protein structure prediction, exemplified by AlphaFold2 (AF2) and RoseTTAFold, is fundamentally rooted in their training on expansive protein sequence databases. This whitepaper elucidates the technical architecture of these databases, their role in teaching AI models the evolutionary, physical, and geometric constraints of proteins, and the experimental protocols for validating model predictions. Framed within ongoing thesis research on training data and methodology, we provide a detailed guide for researchers leveraging these tools.

The predictive prowess of deep learning models like AF2 and RoseTTAFold is not an inherent "intuition" but a learned understanding distilled from billions of amino acid relationships captured in multiple sequence alignments (MSAs). This section details the primary databases and their quantitative scale.

Core Sequence and Structure Databases

Table 1: Key Databases for AI Protein Model Training

| Database | Primary Content | Size (Approx.) | Role in Training |

|---|---|---|---|

| UniRef90 (UniProt) | Clustered protein sequences | ~150 million clusters (2023) | Source for generating MSAs, teaching evolutionary constraints. |

| BFD (Big Fantastic Database) | Clustered metagenomic sequences | ~2.2 billion clusters | Expands MSA depth, especially for orphan proteins. |

| PDB (Protein Data Bank) | Experimentally solved structures | ~200,000 entries (2023) | Ground truth for supervised learning of structure. |

| MGnify | Metagenomic protein sequences | ~1.7 billion sequences (2023) | Enhances MSA coverage for diverse protein families. |

Methodological Deep Dive: From Database to Prediction

The training pipeline integrates heterogeneous data into a coherent learning signal. The core workflow involves MSA construction, template identification, and end-to-end model training.

Protocol: Generating a Multiple Sequence Alignment (MSA)

Objective: To extract evolutionary co-variance signals from a query protein sequence. Reagents & Tools: HMMER, HH-suite, MMseqs2, computing cluster. Procedure:

- Query Submission: Input target amino acid sequence (e.g., ">Target_A").

- Iterative Search: Use

jackhmmer(HMMER) orhhblits(HH-suite) to search against UniRef90 and BFD.- Command (example):

jackhmmer -N 5 --incE 0.001 -A target.sto target.fasta uniref90.fasta - Iterations (

-N) continue until convergence (--incEthreshold).

- Command (example):

- Alignment Processing: Filter sequences for minimum coverage (e.g., >50% query length) and maximum pairwise identity (e.g., 90%).

- Output: A filtered, aligned MSA file (Stockholm format,

.sto) used as primary input to AF2/RoseTTAFold.

Protocol: Neural Network Training (AlphaFold2 Architecture)

Objective: To train a model that maps an MSA and templates to accurate 3D coordinates. Core Modules: Evoformer (MSA processing) and Structure Module (3D generation). Procedure:

- Input Embedding: The MSA and (optional) PDB template features are encoded into initial representations.

- Evoformer Blocks (48 blocks): Applies axial attention mechanisms.

- MSA Stack: Updates pair representations based on MSA statistics.

- Pair Stack: Updates MSA representations based on pair potentials.

- This iteratively extracts co-evolutionary and physical constraints.

- Structure Module: Iteratively refines a set of residue-specific "rigid bodies" (translations and rotations) to produce final 3D atomic coordinates, including side-chains.

- Loss Function: Minimizes a composite loss: Frame Aligned Point Error (FAPE) on backbone and side chains, distogram prediction error, and confidence metric (pLDDT) error.

Diagram 1: AlphaFold2 Inference Workflow (49 chars)

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Tools for Protein AI Research

| Item | Function | Example/Provider |

|---|---|---|

| ColabFold | Cloud-based AF2/RoseTTAFold suite with fast MMseqs2 search. | GitHub: "sokrypton/ColabFold" |

| HH-suite | Extremely fast protein homology detection & MSA generation. | Toolkit: hhblits, hhsearch |

| PyMOL / ChimeraX | Molecular visualization for analyzing predicted structures. | Schrödinger LLC / UCSF |

| AlphaFold Protein Structure Database | Pre-computed AF2 predictions for the human proteome & model organisms. | EBI / Google DeepMind |

| RoseTTAFold Server | Web server for running the RoseTTAFold model. | University of Washington |

| PDBx/mmCIF Format | Standard file format for representing atomic coordinates and metadata. | wwPDB |

| Biopython | Python library for biological computation, including PDB parsing. | Biopython Project |

| 6-NBDG | 6-NBDG, CAS:108708-22-1, MF:C12H14N4O8, MW:342.26 g/mol | Chemical Reagent |

| Pentafluorobenzenesulfonyl fluorescein | Pentafluorobenzenesulfonyl fluorescein, CAS:728912-45-6, MF:C26H11F5O7S, MW:562.4 g/mol | Chemical Reagent |

Validation Protocols and Quantitative Assessment

Model predictions must be validated against experimental data.

Protocol: Validating a Predicted Structure with Cryo-EM

Objective: To assess the accuracy of an AI-predicted model against an experimentally derived cryo-EM map.

Reagents: Predicted model (PDB format), experimental cryo-EM map (.mrc file), validation software.

Procedure:

- Real-Space Refinement: Fit the predicted atomic model into the cryo-EM density map using software like

PHENIXorREFMAC.- Command (PHENIX):

phenix.real_space_refine predicted_model.pdb map_file.mrc

- Command (PHENIX):

- Quantitative Metrics Calculation:

- Cross-Correlation (CC): Measures global fit between model and map. Target: CC > 0.7.

- Q-Score: Per-residue fit assessment. Target: Q-score > 0.7.

- Ramachandran Outliers: Assess backbone torsion plausibility.

- Analysis: Identify regions of poor fit (low local CC/Q-score), which may indicate conformational flexibility or prediction error.

Diagram 2: Cryo-EM Validation Workflow (32 chars)

Protein sequence databases are the foundational language corpus from which AI models learn the grammar of protein folding. The methodologies outlined here—from MSA generation to experimental validation—form the core of modern structural bioinformatics. Future research, including the author's thesis work, focuses on: 1) Leveraging even larger, more diverse sequence datasets; 2) Training entirely without explicit template information; and 3) Integrating orthogonal data (e.g., SAXS, NMR chemical shifts) directly into the training pipeline to guide predictions for novel protein folds and complexes.

This technical guide delineates the architectural blueprint underlying the transformative success of AlphaFold2 (AF2) and RoseTTAFold in protein structure prediction. The core thesis posits that the unprecedented accuracy of these models stems from a synergistic integration of three conceptual "tracks": a one-dimensional (1D) sequence track, a two-dimensional (2D) distance/geometry track, and a three-dimensional (3D) atomic coordinate track. Central to this integration is the strategic deployment of specialized attention mechanisms that facilitate communication between these tracks, followed by explicit 3D refinement modules that iteratively polish the final atomic model. This architecture represents a paradigm shift from purely physical simulations to learned, data-driven refinement within a physically plausible framework, directly informed by the vast evolutionary, structural, and physicochemical data encoded in their training sets.

Core Architectural Components: Attention and Refinement

The Tripletrack Framework and Inter-Track Communication

The foundational innovation is the tripletrack network, which processes and exchanges information across multiple representations of a protein.

Experimental Protocol for Tripletrack Training:

- Input Embedding: The target protein sequence (length N) is embedded using a multiple sequence alignment (MSA) and pairwise features (e.g., from HHblits, Jackhmmer).

- Track Initialization:

- 1D Track: Initialized with sequence and MSA embeddings.

- 2D Track: Initialized with predicted residue-pair distances (from preliminary networks) and orientations.

- 3D Track: Initialized with a cloud of candidate atomic coordinates, often starting from a backbone frame derived from predicted distances.

- Iterative Propagation (Evoformer & Structure Module): A series of stacked blocks (Evoformer in AF2, equivalent in RoseTTAFold) process the tracks. Key operations include:

- Intra-Track Attention: Within the 1D track, row-wise and column-wise gated self-attention on the MSA representation captures evolutionary couplings.

- Inter-Track Attention: Axial attention operations allow the 1D and 2D tracks to update each other. The 2D track informs the 1D track about geometric constraints, while the 1D track provides sequence context to resolve geometric ambiguities.

- 2D-to-3D Projection: Information from the updated 2D track (distances, angles) is used by the structure module to generate a preliminary 3D atomic model.

- 3D-to-2D Feedback: The generated 3D coordinates are "reprojected" to compute new pairwise distances and orientations, which are fed back to update the 2D track, closing the loop.

3D Refinement Modules

The initial 3D output from the structure module undergoes further refinement.

Experimental Protocol for 3D Refinement:

- Initial Model Generation: The structure module outputs atom positions for all heavy atoms (N, Cα, C, O, CB, etc.).

- Iterative Refinement (as in AF2's recycling): The entire network (or a dedicated refinement sub-network) is run repeatedly. In each recycling step, the outputs (3D coordinates, predicted LDDT confidence scores) are fed back as additional inputs to the network, allowing it to correct errors.

- Physical Relaxation: The final ranked model(s) are subjected to a short, constrained molecular dynamics relaxation using force fields (e.g., Amber) to remove minor steric clashes and optimize bond geometries, while remaining close to the neural network prediction.

- Confidence Metrics: The model outputs per-residue (pLDDT) and predicted TM-score (pTM) for global accuracy assessment.

Diagram 1: Tripletrack Architecture & Information Flow

Quantitative Data & Performance Comparison

Table 1: Core Architectural & Performance Comparison of AF2 and RoseTTAFold

| Feature | AlphaFold2 (DeepMind) | RoseTTAFold (Baker Lab) |

|---|---|---|

| Core Architecture | Tripletrack (1D, 2D, 3D) | Similar Tripletrack (1D, 2D, 3D) |

| Key Attention Mechanism | Evoformer (Row/Column Gated Self-Attention + Triangle Updates) | Tailored 3D Track Attention (integrates 1D,2D,3D info in each block) |

| 3D Initialization | Invariant Point Attention (IPA) within Structure Module | Direct generation from 2D potentials via Foldit-derived methods |

| Refinement Strategy | End-to-end recycling (3-4 cycles) + Amber relaxation | Iterative refinement network (Rosetta-based) after generation |

| Training Data (PDB) | ~170k structures (PDB70) | ~35k structures (initially) |

| Typical CASP14 GDT_TS | ~92 (Dominant performance) | ~85 (Highly competitive) |

| Inference Speed | Minutes to hours (GPU cluster) | Faster, designed for accessibility (single GPU) |

| Key Output | 5 ranked models, per-residue pLDDT, predicted TM-score (pTM) | Ranked models, confidence scores, predicted contacts |

Table 2: Impact of 3D Refinement on Model Quality (Illustrative Metrics)

| Refinement Stage | Typical RMSD Reduction (Ã…)* | Typical clash score (MolProbity) Improvement | Computational Cost (% of total) |

|---|---|---|---|

| Initial Structure Module Output | Baseline (e.g., 5.0 Ã…) | High (>10) | ~70% |

| Iterative Network Recycling | 10-25% (e.g., 4.0 Ã…) | Moderate | ~25% |

| Final Physical Relaxation | Minor (<0.5 Ã…) | Significant (to <2) | ~5% |

*Reduction in backbone RMSD relative to the known experimental structure for a medium-sized protein.

Detailed Experimental Methodology

Protocol for Training an AlphaFold2/RoseTTAFold-Style Model:

Data Curation:

- Compile a non-redundant set of protein structures from the PDB (e.g., PDB70 cluster).

- Generate MSAs for each sequence using sequence databases (UniRef, BFD) with tools like Jackhmmer/HHblits.

- Compute auxiliary features: template structures (via HHSearch), secondary structure (DSSP), solvent accessibility, etc.

Model Architecture Implementation:

- Build the Evoformer block with efficient axial attention mechanisms to handle large MSAs (Nseq x Nres).

- Implement the structure module using IPA or equivalent to perform SE(3)-equivariant transformations.

- Integrate the recycling mechanism by connecting the output 3D coordinates and confidences as input features for subsequent passes.

Loss Functions & Training:

- Frame Aligned Point Error (FAPE): The primary 3D loss, measuring error in atom positions within local reference frames.

- Distogram Loss: Supervises the 2D track using binned distance probabilities.

- Auxiliary Losses: Include masked MSA loss, pLDDT regression loss, and predicted TM-score loss.

- Training is performed on distributed GPU clusters for several weeks, using Adam or similar optimizer with gradient checkpointing.

Inference & Model Selection:

- For a target sequence, generate MSA and features.

- Run the network with multiple random seeds (leading to 5 models in AF2).

- Rank models by their predicted confidence score (pLDDT or pTM).

- Subject the top-ranked models to brief MD relaxation.

Diagram 2: End-to-End Training and Inference Workflow

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Research Reagent Solutions for Methodology Development

| Item / Solution | Function in AF2/RoseTTAFold Research | Example / Provider |

|---|---|---|

| Protein Data Bank (PDB) | Primary source of ground-truth 3D structures for training and benchmarking. | RCSB PDB (rcsb.org) |

| Sequence Databases (UniRef, BFD) | Provide evolutionary information via homologous sequences for MSA construction. | UniProt Consortium, DeepMind's BFD |

| MSA Generation Tools | Software to search sequence databases and build deep, diverse MSAs. | HH-suite (HHblits), Jackhmmer (HMMER) |

| Template Search Tools | Identify structural homologs for use as supplementary input features. | HHSearch, MMseqs2 |

| Deep Learning Framework | Platform for implementing and training large transformer-based models. | JAX (AF2), PyTorch (RoseTTAFold) |

| Molecular Dynamics Engine | For final physical relaxation of predicted models to minimize clashes. | OpenMM (Amber force field), Rosetta |

| Model Evaluation Suites | Software to quantitatively assess predicted model accuracy. | MolProbity (clashes), CASP assessment tools (GDT_TS, RMSD) |

| High-Performance Compute (HPC) | GPU clusters (NVIDIA A100/V100) essential for training (weeks) and rapid inference. | Cloud (Google Cloud, AWS) or institutional HPC centers |

| (+)-Butaclamol hydrochloride | (+)-Butaclamol hydrochloride, CAS:19953-58-3, MF:C13H11N3O, MW:225.25 g/mol | Chemical Reagent |

| Oleyl anilide | Oleanilide | Oleanilide, a fatty acid anilide research chemical. For Research Use Only. Not for human, veterinary, or household use. |

This technical guide examines the evolutionary trajectory of protein structure prediction, culminating in AlphaFold2 and RoseTTAFold. By analyzing key methodologies from Critical Assessment of protein Structure Prediction (CASP) experiments and historical tools, we delineate the technical innovations in training data and architectural design that enabled the modern deep learning revolution. The focus is on extractable lessons for ongoing research in drug development.

The accurate computational prediction of protein tertiary structure from amino acid sequence has been a grand challenge in biology for over 50 years. The breakthroughs of AlphaFold2 (AF2) and RoseTTAFold did not occur in a vacuum but were built upon decades of incremental progress, community benchmarking (notably CASP), and iterative refinement of both physical and knowledge-based approaches. This whitepaper contextualizes their training data and methodology within this historical framework, providing researchers with a clear technical lineage.

The CASP Experiment: The Crucible of Progress

The Critical Assessment of protein Structure Prediction (CASP), established in 1994, is a biennial blind experiment that has served as the definitive benchmark for the field.

CASP Methodology & Metrics

CASP releases amino acid sequences of proteins whose structures are recently solved but not yet public. Predictors submit their models, which are compared to the experimental structures using a suite of metrics.

Key Quantitative Metrics Used in CASP: Table 1: Core CASP Evaluation Metrics

| Metric | Full Name | What it Measures | Interpretation |

|---|---|---|---|

| GDT_TS | Global Distance Test Total Score | Average percentage of Cα atoms under defined distance cutoffs (1, 2, 4, 8 Å) from native position. | 0-100 scale; higher is better. Primary metric for overall fold accuracy. |

| GDT_HA | Global Distance Test High Accuracy | More stringent version of GDT_TS with tighter distance thresholds (0.5, 1, 2, 4 Ã…). | Measures high-accuracy modeling, crucial for drug design. |

| RMSD | Root Mean Square Deviation | Standard deviation of distances between equivalent Cα atoms after optimal superposition. | In Ångströms; lower is better. Sensitive to local errors. |

| TM-score | Template Modeling Score | Scale-invariant measure of structural similarity, less sensitive to local errors than RMSD. | 0-1 scale; >0.5 suggests correct fold, >0.8 high accuracy. |

| lDDT | local Distance Difference Test | Local superposition-independent score evaluating per-residue distance fidelity. | 0-100 scale; used as a training loss in AF2. |

Evolutionary Stages of CASP Performance

CASP results document clear phases of technological advancement.

Table 2: Performance Evolution Across CASP Editions

| CASP Era | Dominant Methodology | Typical GDT_TS Range (Hard Targets) | Key Innovation | Limitation |

|---|---|---|---|---|

| Early (CASP1-3) | Threading, Simple Physics | 20-40 | Use of fragment libraries, pairwise potentials. | Limited by template recognition and force field accuracy. |

| Template-Based (CASP4-7) | Comparative Modeling | 40-70 | Improved sequence alignment, model combination. | Failed on novel folds with no templates. |

| Hybrid/Co-evolution (CASP8-12) | Co-evolution Analysis (DCA), Rosetta | 50-80 | Direct coupling analysis (DCA) for contact prediction. | Contact prediction saturation; difficulty in full-chain folding. |

| Deep Learning I (CASP13) | Deep ResNets for Contacts (AlphaFold1) | 60-85 | End-to-end deep learning for pairwise distances. | Separated geometry generation from prediction. |

| Deep Learning II (CASP14) | End-to-End 3D (AlphaFold2, RoseTTAFold) | 85-95 | SE(3)-equivariant networks, integrated structure module. | Computational cost, conformational dynamics. |

Predecessor Tools and Foundational Methodologies

Knowledge-Based and Homology Modeling

SWISS-MODEL & MODELLER: Automated pipelines for comparative (homology) modeling. They rely on identifying a related protein with a known structure (template) and transferring its fold.

- Protocol: 1) Template identification via BLAST/HHblits. 2) Target-Template alignment. 3) Model building by satisfaction of spatial restraints. 4) Loop modeling and side-chain placement. 5) Energy minimization.

- Lesson: Highlighted the critical importance of accurate multiple sequence alignments (MSAs) and the "template bottleneck."

De Novoand Fragment-Assembly Approaches

Rosetta: A physics-based methodology combining knowledge-based statistics and physical energy terms.

- Protocol: 1) Fragment library generation from PDB (3-9 residue fragments). 2) Monte Carlo fragment insertion to explore conformational space. 3) Full-atom refinement using a detailed energy function (van der Waals, solvation, hydrogen bonding). 4) Clustering of low-energy decoys.

- Lesson: Demonstrated the power of massive conformational sampling and hybrid energy functions. Its failure on large proteins underscored the need for stronger long-range restraints.

Co-evolutionary Contact Prediction

PSICOV, plmDCA, CCMpred: Statistical methods to identify co-evolving residue pairs from MSAs, implying spatial proximity.

- Protocol: 1) Gather deep MSA (>1000 effective sequences). 2) Compute inverse covariance matrix or use pseudo-likelihood maximization to infer direct couplings. 3) Rank residue pair couplings; top-scoring pairs predicted as contacts.

- Experiment: The critical experiment was applying these predicted contacts as restraints in Rosetta or molecular dynamics simulations (e.g., in CASP12). This dramatically improved de novo modeling for proteins with rich MSAs.

- Lesson: Provided the key long-range information needed for folding. Deep learning (AlphaFold1) later subsumed this by predicting a more precise distance distribution.

The Direct Precursors: AlphaFold1 and Related DL Tools

AlphaFold (2018, CASP13)

This system separated the prediction of spatial information from 3D structure generation.

- Training Data & Methodology:

- Input: Deep MSAs and paired amino acid features.

- Architecture: A deep residual network (ResNet) processed the MSA and pairwise features.

- Output: A discretized distance distribution histogram (binning distances) and dihedral angle distributions for each residue pair.

- Structure Generation: The predicted distances and angles were used as restraints in a separate gradient-descent-based optimization to produce a 3D coordinates file (PDB). This was a significant bottleneck.

- Key Innovation: End-to-end learning of pairwise relationships from sequence data, moving beyond co-evolution statistics.

- Limitation: The disjointed two-stage process (predict distances, then fold) limited accuracy and was computationally inefficient.

(Diagram 1: AlphaFold1 (CASP13) Two-Stage Architecture)

trRosetta (2019)

A similar deep learning approach that predicted inter-residue distances and orientations (angles), followed by Rosetta-based folding with restraints.

- Protocol: 1) Network predicts distance and ω/θ/φ angle maps. 2) These are converted to smooth restraint potentials. 3) A fast Rosetta folding simulation under these potentials generates the model.

The Paradigm Shift: Integrated End-to-End Learning

The core lesson learned by the AF2 and RoseTTAFold teams was that the two-stage process was suboptimal. The breakthrough was to train a network to output 3D coordinates directly, using the physics of protein structure implicitly within the network architecture.

Core Technical Lesson: The Importance of SE(3)-Equivariance

Previous networks produced outputs (distograms) that were invariant to rotations/translations of the input. A 3D structure must be equivariant—if the input frame of reference rotates, the output coordinates should rotate identically. AF2's "Structure Module" and RoseTTAFold's "3D Network" are designed to be SE(3)-equivariant, ensuring geometrically consistent predictions.

Training Data Curation: The Unspoken Hero

Both systems leveraged vast, curated datasets:

- Protein Data Bank (PDB): Source of high-resolution experimental structures.

- Sequence Databases (UniRef, BFD, MGnify): Used to build massive MSAs.

- Known Structures for Templates: PDB was used to provide homologous templates (AF2).

- Critical Preprocessing: Rigorous filtering for quality, removal of sequence redundancy, and generation of paired MSAs across genomic databases were essential for learning evolutionary constraints.

(Diagram 2: Integrated End-to-End Training Pipeline)

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for Protein Structure Prediction Research

| Item / Reagent | Category | Function / Purpose | Example/Provider |

|---|---|---|---|

| PDB (Protein Data Bank) | Primary Data | Repository of experimentally determined 3D structures for training, validation, and template sourcing. | rcsb.org |

| UniProt/UniRef | Sequence Database | Curated protein sequence clusters for MSA construction and feature extraction. | uniprot.org |

| MGnify | Metagenomic DB | Expands MSAs with evolutionary diverse sequences from metagenomic samples, crucial for orphan proteins. | ebi.ac.uk/metagenomics |

| HH-suite | Search Software | Sensitive homology detection tools (HHblits, HHsearch) for MSA generation and template identification. | github.com/soedinglab/hh-suite |

| ColabFold | Prediction Server | Integrated AF2/RF system with streamlined MSA generation, enabling fast, accessible predictions. | colabfold.com |

| Rosetta | Modeling Suite | For comparative modeling, de novo design, and refinement of deep learning models. | rosettacommons.org |

| PyMOL / ChimeraX | Visualization | Critical for analyzing, comparing, and presenting predicted vs. experimental 3D models. | Schrödinger LLC / UCSF |

| AlphaFold Protein Structure Database | Prediction DB | Pre-computed AF2 models for the proteomes of key organisms, enabling immediate lookup. | alphafold.ebi.ac.uk |

| Flu-6 | Flu-6, CAS:39235-51-3, MF:C11H13F3N2O, MW:246.23 g/mol | Chemical Reagent | Bench Chemicals |

| Acrylamide-d3 | Acrylamide-d3 Internal Standard|LC-MS/MS | Acrylamide-d3 isotopic internal standard for precise quantification in food safety and toxicology research. For Research Use Only (RUO). Not for human use. | Bench Chemicals |

From Code to Structure: A Step-by-Step Guide to AI-Driven Protein Modeling

This technical guide details the core architectural components of AlphaFold2, a revolutionary deep learning system for protein structure prediction. Developed by DeepMind, this model achieved unprecedented accuracy in the 14th Critical Assessment of protein Structure Prediction (CASP14). The system's success is built upon three tightly integrated components: the Evoformer (a novel attention-based neural network), the Structure Module (a geometry-aware module), and a Recycling mechanism for iterative refinement. This analysis is framed within a broader research thesis investigating the comparative training data strategies and methodologies of AlphaFold2 and RoseTTAFold.

The Evoformer: Integrating Evolutionary and Physical Constraints

The Evoformer is the heart of AlphaFold2's reasoning engine. It operates on two core representations: a multiple sequence alignment (MSA) representation and a pair representation. Its design enables communication between these two streams, allowing evolutionary information from the MSA to inform spatial relationships in the pair representation, and vice versa.

Core Architectural Components

The Evoformer stack consists of 48 blocks. Each block contains two primary types of attention mechanisms applied to the two representations.

Key Operations in an Evoformer Block:

- MSA Row-wise Gated Self-Attention with Pair Bias: Updates each row (sequence) in the MSA representation. Crucially, the attention weights are biased by data from the pair representation, injecting inferred spatial constraints.

- MSA Column-wise Gated Self-Attention: Updates each column (residue position) in the MSA representation, integrating evolutionary information across homologous sequences.

- Transition Layers: Simple two-layer feed-forward networks applied after attention modules.

- Outer Product Mean: A critical operation that projects information from the MSA representation back to the pair representation. It computes an outer product for each pair of positions across MSA features and averages over the sequence dimension.

- Triangular Self-Attention around i and j: Operates on the pair representation. Two separate modules enforce consistency in the triangular inequalities of distances: one where for a given residue i, it attends over all residues j and k, and another where for a given residue j, it attends over all i and k.

- Triangular Multiplicative Update: A more efficient operation that performs a similar function to triangular attention, updating pair features using information from a third residue.

Diagram 1: Data flow within a single Evoformer block (L=seq length, S=seq depth, c=channels).

Input Features and Embeddings

The Evoformer processes a rich set of input features derived from the target sequence and its homologs.

Table 1: Primary Input Features to AlphaFold2 Evoformer

| Feature Category | Specific Features | Dimensionality (per residue/pair) | Source |

|---|---|---|---|

| MSA Features | One-hot encoded MSA | L x S x (22 amino acids) | HHblits/JackHMMER against UniRef90 & BFD/MGnify |

| Deletion probability | L x S x 1 | From MSA profile | |

| Position-Specific Scoring Matrix (PSSM) | L x 1 x 44 | Derived from MSA | |

| Pair Features | Residue Index (Relative & Absolute) | L x L x 64 | Sequence position encoding |

| Predicted Distogram (from pair logits) | L x L x 64 | Initial network pass | |

| Same Chain & Relative Chain One-hot | L x L x 4+ | For multimeric predictions |

The Structure Module: From Patterns to 3D Coordinates

The Structure Module translates the refined pair representation from the Evoformer into atomic coordinates. It is explicitly geometry-aware, using a variant of a spatial transformer that respects the principles of protein backbone geometry.

Architecture and Invariant Point Attention

The module represents each residue as a local frame, defined by a rotation and translation in 3D space. The key innovation is Invariant Point Attention (IPA). Unlike standard attention, IPA operates on points in 3D space and is invariant to global rotations and translations, ensuring the predicted structure is independent of the coordinate frame.

- Inputs: For each residue, a learned embedding from the Evoformer's MSA representation and the current frame (rotation, translation).

- IPA Mechanism: Computes attention weights based on both pairwise feature similarity (from the Evoformer's pair representation) and the relative positions of the residue frames in 3D space.

- Backbone Update: The output of the IPA layer is used to update the rotation and translation of each residue's local frame.

- Side-chain Prediction: After the backbone is predicted, a final network predicts side-chain rotamers (chi angles) using the same pair and backbone information.

Experimental Protocol: Structure Module Training

A critical component of the broader methodology is the training protocol for the Structure Module.

Protocol: End-to-end Training with Frame-Aligned Point Error (FAPE) Loss

- Objective: Minimize the Frame Aligned Point Error (FAPE) between predicted and ground truth atomic coordinates.

- Procedure: a. The Evoformer processes input features, outputting refined MSA and pair representations. b. The Structure Module, initialized with frames at the origin, iteratively refines frames over 8 layers (or "recycles"). c. For a given layer, the FAPE loss is computed. The loss is invariant: it aligns the predicted local frame to the ground truth frame before measuring the distance between corresponding atoms (backbone N, Cα, C). d. The total loss is a weighted sum of FAPE losses from all recycling iterations and auxiliary losses (e.g., distogram loss, masked MSA loss, van der Waals violation loss).

- Key Parameters: Clamping distance for FAPE: 10Ã…. Weight on backbone torsion loss: 0.5. Weight on side-chain torsion loss: 0.5.

Diagram 2: Structure Module workflow with iterative refinement and loss calculation.

Recycling: Iterative Refinement

Recycling is the mechanism that allows AlphaFold2 to refine its predictions iteratively, closing the gap between initial estimates and final high-accuracy structures.

Mechanism

The output from one pass through the entire network (Evoformer + Structure Module) is fed back as an additional input to the next pass.

- Recycled Features: The primary recycled feature is the "pseudo-beta" distance map (distances between Cα and Cβ atoms) derived from the Structure Module's predicted coordinates. This 2D map is added to the initial pair representation input.

- Number of Recycles: The model typically performs 3 recycling iterations during training and inference. The final prediction is taken from the last iteration.

- Gradient Flow: Gradients flow through all recycling steps during training, enabling the model to learn effective refinement strategies.

Table 2: Impact of Recycling on Prediction Accuracy (CASP14 Metrics)

| Metric | No Recycling (1 cycle) | With Recycling (3 cycles) | Improvement |

|---|---|---|---|

| Global Distance Test (GDT_TS) | ~85 (Estimated) | 92.4 (on CASP14 targets) | Significant |

| Local Distance Difference Test (lDDT) | ~80 (Estimated) | ~90+ (on CASP14 targets) | Significant |

| Predicted Aligned Error (PAE) Coherence | Lower | Higher | More self-consistent |

Comparative Methodology: AlphaFold2 vs. RoseTTAFold Recycling

Within the thesis context, a key comparison point is the recycling/refinement strategy.

RoseTTAFold's Approach: Uses a three-track network (1D seq, 2D distance, 3D coord) with continuous information exchange within a single forward pass. It employs a simpler "refinement" step rather than explicit multi-cycle recycling.

AlphaFold2's Approach: Employs explicit, discrete recycling cycles where the entire network is run multiple times with updated inputs. This is a form of iterative refinement inspired by traditional molecular dynamics relaxation.

Diagram 3: AlphaFold2's three-cycle recycling pipeline with gradient flow.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Materials for AlphaFold2 Methodology Research

| Item / Reagent | Function / Purpose | Example/Notes |

|---|---|---|

| MSA Databases | Provide evolutionary information for the Evoformer. | UniRef90 (clustered sequences), BFD (Big Fantastic Database), MGnify (metagenomic). RoseTTAFold often uses UniRef30. |

| Template Databases | Provide structural homologs for initial guidance (optional in AF2). | PDB (Protein Data Bank) archive. Requires MMcif formatting and filtering. |

| HH-suite Tools | Generate deep, sensitive MSAs from sequence databases. | HHblits, HHsearch. Critical for building the initial MSA representation. |

| JackHMMER | Alternative tool for iterative MSA construction. | Used in AlphaFold2's original pipeline against UniRef90. |

| GPU Hardware | Accelerates training and inference of large transformer models. | NVIDIA A100/V100 for full training. Inference possible on high-end consumer GPUs (e.g., RTX 3090). |

| Deep Learning Framework | Implementation and training platform. | AlphaFold2: JAX/Haiku. RoseTTAFold: PyTorch. |

| Open-Source Implementations | Enable methodology study and adaptation. | AlphaFold2 (Open Source) by DeepMind, ColabFold (streamlined), RoseTTAFold by Baker Lab. |

| Structure Evaluation Metrics | Quantify prediction accuracy against ground truth. | pLDDT (predicted confidence), PAE (inter-residue error), TM-score, GDT_TS, DockQ (for complexes). |

| 6-Methylpicolinonitrile | 6-Methylpicolinonitrile, CAS:1620-75-3, MF:C7H6N2, MW:118.14 g/mol | Chemical Reagent |

| Acetyl-6-formylpterin | 2-Acetamido-6-formylpteridin-4-one|RUO | 2-Acetamido-6-formylpteridin-4-one is a high-purity chemical for research applications. This product is For Research Use Only and not for human or veterinary use. |

Within the transformative landscape of protein structure prediction, the release of AlphaFold2 by DeepMind marked a paradigm shift. The subsequent, rapid publication of RoseTTAFold by the Baker laboratory presented a complementary, open-source framework that validated and extended key architectural concepts. A core thesis unifying these systems posits that the revolutionary accuracy stems not merely from increased data or compute, but from the explicit, synergistic integration of heterogeneous data types throughout the network architecture. This whitepaper provides an in-depth technical guide to RoseTTAFold's foundational innovation: its three-track network that concurrently processes one-dimensional (1D) sequence, two-dimensional (2D) distance, and three-dimensional (3D) coordinate information. This design directly addresses the fundamental biomolecular principle that sequence dictates pairwise interactions, which in turn define the three-dimensional folded structure.

Architectural Deep Dive: The Three-Track Network

RoseTTAFold's neural network is engineered as three parallel "tracks" that exchange information iteratively through a transformer-like attention mechanism. Each track specializes in a distinct representation of the protein.

Track 1: 1D Sequence Profile Track This track processes evolutionary information derived from multiple sequence alignments (MSAs). Inputs include position-specific scoring matrices (PSSMs) and residue pair features. It models patterns of co-evolution and conservations along the protein chain.

Track 2: 2D Distance Geometry Track This track operates on a 2D representation of pairwise relationships between residues. It processes an initial, noisy distance map and refines it over many cycles, predicting probabilities for distances between Cβ atoms (Cα for glycine) and relative orientations.

Track 3: 3D Spatial Coordinate Track This track explicitly models the protein backbone in three dimensions. It starts from a random or template-derived initial structure and iteratively refines the 3D coordinates (specifically the backbone frames) based on information flowing from the 1D and 2D tracks.

The critical innovation is the "trunk" module, where information between tracks is exchanged via triangular multiplicative updates and axial attention mechanisms. At each layer, each track receives updated information from the other two, allowing, for example, a detected 3D steric clash to influence the 2D distance predictions, which can then alter the inferred sequence constraints.

Diagram Title: RoseTTAFold Three-Track Architecture & Information Flow

Training Data & Methodology: A Comparative Analysis with AlphaFold2

Both AlphaFold2 and RoseTTAFold leverage the same fundamental data universe but differ in training emphasis and architectural implementation of data integration.

Primary Data Sources:

- Protein Data Bank (PDB): Source of high-resolution experimental structures for training.

- Sequence Databases (UniRef, BFD, MGnify): Used to generate multiple sequence alignments (MSAs) for input proteins.

- Structural Homology Databases (PDB70): Used for template-based modeling features.

A key methodological distinction lies in the generation of training examples. Both systems employ aggressive data augmentation, including:

- Crop-and-replace techniques for generating partial structures.

- Adding noise to MSAs and initial coordinate frames.

- Masking portions of input data to force robust learning.

Table 1: Comparative Training & Architectural Data Usage

| Feature | AlphaFold2 | RoseTTAFold |

|---|---|---|

| Core Network Architecture | Evoformer (2D-focused) + Structure Module (3D) | Integrated Three-Track Network (1D, 2D, 3D in parallel) |

| Primary MSA Processing | Heavy, within Evoformer stack | Lightweight initial processing, deeper integration in tracks |

| Explicit 3D Track | In separate Structure Module | Integrated from the first layer in Track 3 |

| Information Integration | Sequential: Evoformer → Structure Module | Continuous, iterative between all three tracks |

| Template Handling | Separate pair representation | Integrated into the 2D and 1D track inputs |

| Training Compute | ~128 TPUv3 cores for weeks | ~4 GPUs for 10 days (original model) |

| Key Loss Functions | FAPE (Frame Aligned Point Error), distogram, confidence | FAPE, distogram, masked residue recovery, confidence |

Table 2: Key Quantitative Performance Metrics (CAMEO & CASP14)

| Metric | AlphaFold2 (Median) | RoseTTAFold (Median) | Notes |

|---|---|---|---|

| Global Distance Test (GDT_TS) | ~92.4 (CASP14) | ~85-88 (CASP14) | Higher is better (0-100 scale) |

| Local Distance Difference Test (lDDT) | ~90+ (CASP14) | ~85+ (CASP14) | Higher is better (0-1 scale) |

| TM-score | ~0.95 (CASP14) | ~0.88 (CASP14) | >0.5 indicates correct fold |

| Inference Time (per target) | Minutes to hours* | Minutes to hours* | Highly dependent on MSA depth & length |

| Model Parameters | ~93 million | ~48 million | RoseTTAFold is a more compact network |

*RoseTTAFold is generally faster due to its smaller size and efficient MSA generation pipeline.

Experimental Protocol: Key Validation Experiments

Protocol 1: Ablation Study on Track Communication

- Objective: To quantify the contribution of inter-track information exchange to prediction accuracy.

- Methodology:

- Train multiple RoseTTAFold variants with specific communication pathways disabled (e.g., 1D→3D only, 2D→3D only, no communication).

- Evaluate each variant on a standardized test set (e.g., CASP14 targets).

- Measure key metrics: GDT_TS, lDDT, and the accuracy of predicted distograms.

- Result: Full three-track communication consistently outperforms all ablated variants, with the removal of the 3D→2D feedback loop causing the most significant drop in accuracy, underscoring the importance of 3D geometric constraints.

Protocol 2: De Novo vs. Template-Based Modeling Assessment

- Objective: To determine the network's ability to fold proteins without evolutionary or template information.

- Methodology:

- Prepare two input sets for the same target proteins: (a) full MSA + templates, (b) single sequence only (no MSA, no templates).

- Run RoseTTAFold inference on both sets.

- Compare predicted structures to the ground truth.

- Result: While accuracy degrades significantly without MSA data, RoseTTAFold can still produce plausible, low-energy folds for some small proteins, demonstrating that the three-track network learns fundamental physicochemical principles of folding, not just evolutionary statistics.

Diagram Title: RoseTTAFold End-to-End Workflow

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Research Reagent Solutions for RoseTTAFold Methodology

| Item | Function in Research Context |

|---|---|

| RoseTTAFold Software Suite | The core open-source software containing the three-track network model weights and inference scripts. Essential for running predictions. |

| HH-suite3 (HHblits/HHsearch) | Software for generating deep multiple sequence alignments from sequence databases and detecting structural homologs. Provides critical 1D and template input features. |

| PyRosetta or Rosetta | Macromolecular modeling suite. Used for final energy minimization and relaxation of RoseTTAFold's raw outputs to improve stereochemical quality and reduce clashes. |

| AlphaFold2 (Open Source) | Comparative benchmark tool. Running AlphaFold2 on the same targets allows for direct performance comparison and validation of RoseTTAFold predictions. |

| ColabFold (RoseTTAFold & AlphaFold2) | Cloud-based Jupyter notebook integrating MMseqs2 for fast MSA generation with both RoseTTAFold and AlphaFold2 models. Lowers entry barrier for predictions. |

| PDBx/mmCIF File Format | The standard format for representing final predicted 3D atomic coordinates, B-factors (confidence metrics), and associated metadata. |

| MolProbity or PHENIX | Structure validation software. Used to assess the geometric quality, rotamer normality, and clash score of predicted models post-refinement. |

| Custom Python Scripts (BioPython, NumPy, PyTorch) | For parsing inputs, manipulating outputs, automating pipelines, and analyzing confidence metrics (pLDDT, PAE) from predictions. |

| 4-Octylphenol | 4-Octylphenol | High-Purity Endocrine Disruptor | RUO |

| 4-Pyrimidine methanamine | 4-(Aminomethyl)pyrimidine | High-Purity Reagent |

This guide details practical workflows for protein structure prediction using ColabFold, a streamlined integration of AlphaFold2 and RoseTTAFold, within a local server environment. This work is framed within a broader thesis investigating the comparative training data and methodologies of AlphaFold2 (Jumper et al., 2021) and RoseTTAFold (Baek et al., 2021). The thesis posits that the predictive accuracy and efficiency of these models are directly correlated with the breadth of their multiple sequence alignment (MSA) generation strategies and the architectural nuances of their neural networks. Implementing local prediction pipelines allows for scalable, reproducible analysis critical for deconstructing model performance on specialized proteomes.

Core System Architecture & Quantitative Comparison

ColabFold combines the best-performing neural network architectures from AlphaFold2 (with MMseqs2 for MSA) and RoseTTAFold into a single, accessible package. The table below summarizes the key methodological components derived from each parent system.

Table 1: Core Algorithmic Components of AlphaFold2, RoseTTAFold, and ColabFold

| Component | AlphaFold2 | RoseTTAFold | ColabFold Implementation |

|---|---|---|---|

| MSA Generation | JackHMMER (UniRef90, MGnify) | HHblits (UniClust30) | MMseqs2 (UniRef30, Environmental) for speed. |

| Template Search | HMMsearch (PDB70) | HMMsearch (PDB70) | MMseqs2-based (PDB70) or disabled. |

| Core Network | Evoformer (Attention) + Structure Module | 3-track network (Seq, Dist, Coord) | AlphaFold2 (default) or RoseTTAFold selectable. |

| Training Data | ~170k PDB structures, MSAs | ~38k PDB structures, MSAs | No training; leverages pre-trained models. |

| Typical Runtime | 10-30 min (GPU, full DB) | 5-15 min (GPU, full DB) | 3-10 min (GPU, fast MMseqs2 MSA). |

Detailed Experimental Protocol for Local Deployment

Local Server Setup and Installation

Objective: Establish a reproducible, high-throughput prediction environment on a local Linux server with GPU support.

Materials & Protocol:

- Hardware: GPU with ≥16GB VRAM (e.g., NVIDIA A100, V100, RTX 3090), 64GB+ RAM, multi-core CPU, and 2TB+ SSD storage for databases.

- Software Prerequisites: Install Conda, Docker, NVIDIA Container Toolkit, and CUDA drivers.

- Database Installation:

- Verification: Run the test command to verify installation:

Batch Prediction Workflow

Objective: Execute structure predictions for a batch of protein sequences with controlled parameters.

Protocol:

- Input Preparation: Create a FASTA file (

input.fasta) with unique headers.

- Command Execution:

- Output Analysis: The output directory contains predicted structures (

.pdb), confidence scores (.json), alignment files, and visualizations. The rank column in *_scores_rank*.json indicates the top model by pLDDT or pTM score.

Table 2: Key Command-Line Parameters for Experimental Design

Parameter

Options

Function

Impact on Thesis Research

--model-typealphafold2_ptm, alphafold2_multimer_v[1-3], roseTTAFoldSelects the underlying neural network.

Allows direct comparison of AF2 vs RoseTTAFold architecture performance on the same input.

--msa-modemmseqs2_uniref_env, mmseqs2_uniref, single_sequenceControls MSA depth and use of environmental sequences.

Tests the thesis hypothesis on MSA diversity impact by isolating its contribution to accuracy.

--num-recycleInteger (e.g., 3, 6, 12)

Number of iterative refinement cycles in the structure module.

Investigates the relationship between iterative refinement and model convergence.

--num-models1, 3, or 5

Number of models to predict per sequence.

Assesses predictive variance and ensemble reliability.

--rankplddt, ptm, multimerMetric for selecting the top model.

Evaluates which confidence metric best correlates with experimental accuracy for different protein classes.

Visualization of the Integrated Workflow

Title: ColabFold Local Prediction Pipeline

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Software for Local Structure Prediction

Item

Function / Purpose

Notes for Research

NVIDIA GPU (A100/V100/RTX 3090+)

Accelerates deep learning inference.

VRAM ≥16GB is critical for large multimers.

High-Speed SSD Array

Stores and provides fast read-access to multi-TB sequence databases.

Prevents I/O bottlenecks during MSA generation.

Conda / Python Environment

Isolates ColabFold dependencies and ensures reproducibility.

Use exact versions from conda.yaml.

Docker / Singularity

Alternative containerized deployment for cluster environments.

Enhates portability and reproducibility.

MMseqs2 (Local Server)

Ultra-fast, sensitive sequence searching for MSA generation.

Core to ColabFold's speed advantage; configurable sensitivity.

AlphaFold2 & RoseTTAFold Weights

Pre-trained neural network parameters.

Downloaded automatically; represents the core trained models under thesis investigation.

PDBx/mmCIF Tools

Utilities for handling and analyzing output structural models.

Used for model validation and comparison to experimental structures.

Pymol / ChimeraX

Molecular visualization software.

Essential for qualitative assessment of predicted folds and domains.

Boc-D-2-Pal-OH Boc-D-2-Pal-OH, CAS:98266-32-1, MF:C13H18N2O4, MW:266.29 g/mol Chemical Reagent 5(S)15(S)-DiHETE 5(S)15(S)-DiHETE, CAS:82200-87-1, MF:C20H32O4, MW:336.5 g/mol Chemical Reagent

This article is framed within a thesis on AlphaFold2 (AF2) and RoseTTAFold (RF) methodology. The advent of these high-accuracy structure prediction tools has shifted the drug discovery paradigm, enabling the systematic targeting of novel protein folds and protein-protein interfaces (PPIs) previously inaccessible to structural characterization. This technical guide explores contemporary case studies and methodologies leveraging these breakthroughs.

The training data and neural network architectures of AF2 and RF, which integrate evolutionary sequence covariation with physical and geometric constraints, have produced proteome-scale structural libraries. For drug discovery, this means:

- Deorphanization of novel protein families.

- High-confidence modeling of PPIs and allosteric sites.

- Rapid generation of competitor protein structures for selectivity analysis.

Case Study Analysis: Quantitative Outcomes

The following table summarizes key quantitative results from recent drug discovery campaigns targeting novel structures informed by AF2/RF predictions.

Table 1: Quantitative Outcomes of Selected Drug Discovery Case Studies

| Target Class / Name | Predicted Structure Source | Experimental Validation Method | Key Metric (e.g., IC50, Ki) | Achieved Outcome |

|---|---|---|---|---|

| KRAS G12C (Allosteric) | AF2-guided cryptic pocket identification | X-ray Crystallography | IC50: 0.002 µM (Sotorasib) | FDA-approved drug (2021) |

| SARS-CoV-2 Main Protease (Mpro) | RF & AF2 models for ligand docking | Cryo-EM, Enzymatic Assay | Ki: 0.0031 µM (Nirmatrelvir) | FDA-approved drug (Paxlovid, 2021) |

| LARP1 (mTORC1 pathway) | AF2 prediction of PPI interface | SPR, Cell-based Assay | KD: 1.5 µM (Lead compound) | Disrupted PPI, reduced cancer cell proliferation |

| Novel Bacterial Kinase | AF2 models of entire protein family | FRET-based Activity Assay | IC50: 0.12 µM | Identified first-in-class inhibitor scaffold |

Experimental Protocols: From Prediction to Validation

Protocol: Integrating AF2/RF Predictions with Virtual Screening (VS)

Objective: To identify small-molecule binders for a novel, experimentally unresolved protein target.

- Target Selection & Modeling: Input target sequence into ColabFold (integrates AF2/RF). Generate multiple models (e.g., 5). Rank by predicted confidence score (pLDDT/pTM).

- Binding Site Prediction: Use algorithms (e.g., FPocket, SiteMap) on the highest-ranking model to identify potential ligandable pockets.

- Virtual Screening Library Preparation: Prepare a library of 1-10 million commercially available compounds (formats: SDF, SMILES). Generate 3D conformers and assign partial charges.

- Molecular Docking: Dock library compounds into the predicted binding site using software (e.g., Glide, AutoDock Vina). Use standard-precision then extra-precision modes.

- Post-Docking Analysis: Cluster top 1000 poses by chemical similarity. Apply MM-GBSA rescoring to refine affinity predictions.

- Hit Selection: Visually inspect top 50-100 compounds for sensible interactions. Select 20-50 for experimental purchase and testing.

Protocol: Validating a Predicted PPI Interface with Mutagenesis

Objective: To biochemically validate a protein-protein interface predicted by AF2 Multimer or RoseTTAFold.

- Complex Prediction: Input sequences of both interacting partners into AF2 Multimer. Analyze interface residue contacts and confidence (ipTM score).

- Mutant Design: Design alanine-scanning mutations for residues with high predicted interface probability (≥0.7). Design control mutations on a non-interacting surface.

- Protein Expression & Purification: Clone wild-type and mutant genes into expression vectors (e.g., pET series). Express in E. coli or HEK293 cells. Purify via His-tag affinity chromatography.

- Binding Affinity Measurement: Use Surface Plasmon Resonance (SPR) or Isothermal Titration Calorimetry (ITC).

- For SPR: Immobilize one partner on a CMS chip. Flow purified wild-type and mutant partners over the surface at varying concentrations (e.g., 0-100 µM).

- For ITC: Titrate one protein (in syringe) into the other (in cell). Measure heat changes.

- Data Analysis: Fit binding isotherms to a 1:1 binding model. Compare dissociation constant (KD) of mutants to wild-type. A ≥10-fold increase in KD confirms the residue's critical role in the interface.

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Reagents and Materials for Target Validation

| Item | Function / Application | Example Vendor/Product |

|---|---|---|

| ColabFold Notebook | Cloud-based pipeline for running AF2/RF, no local GPU required. | GitHub / Colab Research |

| Homology Modeling Software | For comparative modeling if AF2 confidence is low in specific loops/regions. | Schrödinger Prime, MODELLER |

| Cryo-EM Grids (Quantifoil R1.2/1.3) | High-quality grids for structure validation of novel protein-ligand complexes. | Quantifoil, Thermo Fisher |

| SPR Sensor Chips (CM5) | Gold-standard for label-free, real-time kinetic analysis of protein-ligand and PPI interactions. | Cytiva |

| TR-FRET Assay Kits | High-throughput screening format for enzymatic activity or PPI inhibition. | Cisbio, Invitrogen |

| Mammalian Expression Vectors (pcDNA3.4) | High-yield transient expression of challenging human proteins for functional assays. | Thermo Fisher |

| Fragment Library | A collection of 500-2000 low molecular weight compounds for initial screening against novel pockets. | Enamine, Charles River |

| Latanoprost lactone diol | (3aR,4R,5R,6aS)-5-Hydroxy-4-((R)-3-hydroxy-5-phenylpentyl)hexahydro-2H-cyclopenta[b]furan-2-one | High-purity (3aR,4R,5R,6aS)-5-Hydroxy-4-((R)-3-hydroxy-5-phenylpentyl)hexahydro-2H-cyclopenta[b]furan-2-one for research. For Research Use Only. Not for human or veterinary use. |

| N,N-Dimethylsphingosine | N,N-dimethylsphingosine | SphK Inhibitor | For Research | N,N-dimethylsphingosine is a potent sphingosine kinase inhibitor for cell signaling research. For Research Use Only. Not for human or veterinary use. |

Visualization of Workflows and Pathways

Navigating Pitfalls: Accuracy Limits, Error Analysis, and Model Refinement

Within the ongoing research into AlphaFold2 and RoseTTAFold training data and methodology, interpreting the confidence metrics of predicted structures is paramount. This guide provides a technical deep dive into the two primary metrics: pLDDT (predicted Local Distance Difference Test) and PAE (Predicted Aligned Error), their calculation, interpretation, and critical limitations.

Core Confidence Metrics: Definitions and Calculations

pLDDT: Per-Residue Confidence

pLDDT is a per-residue estimate of the model's confidence on a scale from 0 to 100. It is derived from the inverse covariance matrix (the model's precision matrix) and reflects the expected accuracy of the predicted backbone atom positions for a specific residue.

Experimental Protocol for pLDDT Benchmarking (as cited):

- Training: AlphaFold2 is trained on known structures from the PDB, using the true LDDT score (a measure of local distance differences) as a target for an auxiliary output head.

- Prediction: For a novel sequence, the model outputs a pLDDT value for each residue, representing its confidence in the local atomic placement.

- Validation: Benchmarking involves comparing predicted structures to experimentally solved ones (e.g., in CASP). The per-residue pLDDT is correlated with the observed Local Distance Difference Test (LDDT) score for that residue in the experimental structure.

PAE: Pairwise Confidence

PAE is a 2D matrix that estimates the expected distance error in Ångströms between the predicted positions of residues i and j after optimally aligning the two predicted local structures. Low error indicates high confidence in their relative placement.

Experimental Protocol for PAE Utilization:

- Generation: The PAE matrix is produced by a dedicated "confidence head" in the AlphaFold2 network, trained to predict the expected distance error between aligned residues.

- Analysis: The matrix is used to assess domain packing, identify likely rigid domains (blocks of low intra-domain error), and diagnose inter-domain flexibility (high error between domains).

- Application: Researchers threshold the PAE matrix (e.g., at 10Ã…) to define confident rigid groups and propose possible alternative arrangements for low-confidence regions.

Data Presentation: Quantitative Interpretation Guidelines

Table 1: pLDDT Score Interpretation and Correlations

| pLDDT Range | Confidence Band | Interpretation | Expected Ca RMSD (approx.) |

|---|---|---|---|

| 90 - 100 | Very high | Backbone accuracy ~ atomic-level. Side-chains generally reliable. | < 1.0 Ã… |

| 70 - 90 | Confident | Backbone placement generally correct. Loops may deviate. | ~ 1.0 - 1.5 Ã… |

| 50 - 70 | Low | Potentially incorrect topology. Caution advised. Use for hypothesis generation. | ~ 2.5 - 4.0 Ã… |

| 0 - 50 | Very low | Unreliable prediction. Often corresponds to disordered regions. | > 4.0 Ã… |

Table 2: PAE Matrix Interpretation Guide

| PAE Value Range | Structural Interpretation | Implication for Modeling |

|---|---|---|

| < 5 Ã… | Very high relative confidence | Rigid, well-defined spatial relationship. |

| 5 - 10 Ã… | Moderate confidence | Flexible but likely correct relative orientation. |

| 10 - 15 Ã… | Low confidence | Highly flexible or uncertain orientation. Consider alternative arrangements. |

| > 15 Ã… | Very low confidence | Essentially no informative spatial constraint between residues. |

Visualization of Metric Logic and Workflow

Title: Confidence Metric Generation in AF2/RoseTTAFold

Title: Integrated pLDDT and PAE Analysis Workflow

Table 3: Key Tools for Confidence Metric Analysis

| Tool/Resource | Function & Purpose | Relevance to pLDDT/PAE |

|---|---|---|

| AlphaFold2 ColabFold (https://colab.research.google.com/github/sokrypton/ColabFold) | Accessible pipeline for running AlphaFold2/RoseTTAFold. | Directly outputs pLDDT and PAE data alongside structures. |

| PDBsum (https://www.ebi.ac.uk/pdbsum/) or Mol* Viewer (https://molstar.org/) | 3D structure visualization. | Essential for coloring structures by pLDDT to visually assess confidence. |

| Matplotlib (Python) / ggplot2 (R) | Scientific plotting libraries. | Required for generating customized PAE matrix heatmaps and pLDDT plots. |

| BioPython / Biopython | Python library for computational biology. | Used to parse pLDDT and PAE data from AlphaFold2 output files (.pdb B-factor column, .json files). |

| Phenix or REFMAC (CCP4) | Structure refinement and validation software. | Used in experimental validation pipelines to calculate real LDDT for comparison against pLDDT. |

| PyMOL or ChimeraX (Scripting) | Advanced molecular graphics with scripting. | Allows creation of custom scripts to visualize regions filtered by pLDDT thresholds or to animate alternative conformations suggested by PAE. |

Critical Caveats and Limitations

- pLDDT is Not a Measure of "Correctness": A high pLDDT indicates the model is self-consistent and similar to training examples, not that it is biologically correct. It can be high for confidently wrong predictions if the target is dissimilar to the training set.

- Training Data Bias: Both metrics are predictions from models trained on the PDB. They reflect confidence relative to that distribution. Regions unseen in training (novel folds, disordered regions) will have low scores, but this does not always mean they are incorrectly modeled, only that they are uncertain.

- PAE Describes Precision, Not Accuracy: PAE estimates the model's expected error relative to its own prediction. It does not directly predict error relative to the true, unknown native structure.

- Dynamics and Ensembles: A single PAE matrix cannot represent conformational heterogeneity. A high inter-domain PAE might indicate flexibility, not a single incorrect orientation. Complementary methods like molecular dynamics are needed.

- Ligands and Post-Translational Modifications (PTMs): These metrics are primarily for protein backbone and do not reliably assess the confidence of ligand, ion, or PTM placement, which are often modeled with much lower fidelity.

In conclusion, pLDDT and PAE are indispensable tools for interpreting AI-predicted structures within modern structural biology research. However, their effective use requires a nuanced understanding of their derivation from specific training methodologies and their inherent limitations as predictors, not arbiters, of ground-truth biological structure.

The advent of deep learning-based protein structure prediction tools, notably AlphaFold2 and RoseTTAFold, represents a paradigm shift in structural biology. Their accuracy in predicting single-chain, globular protein domains from the Protein Data Bank (PDB) has been groundbreaking. However, the performance of these models is intrinsically linked to the composition and biases of their training data. This technical guide analyzes three persistent failure modes—intrinsically disordered regions (IDRs), multimers, and novel folds—through the lens of training data and methodological constraints. Understanding these limitations is critical for researchers and drug developers who rely on these tools for target identification and mechanistic studies.

Disordered Regions: The Challenge of Dynamic Unstructure

Intrinsically Disordered Regions (IDRs) lack a stable three-dimensional structure under physiological conditions, yet are functionally crucial in signaling, regulation, and phase separation. AlphaFold2 and RoseTTAFold are trained predominantly on static, ordered structures from the PDB, leading to systematic over-prediction of order.

Quantitative Analysis of IDR Prediction

AlphaFold2 outputs a per-residue confidence metric, the predicted Local Distance Difference Test (pLDDT). Low pLDDT scores (typically <70) are correlated with disorder. However, benchmark studies reveal limitations.

Table 1: Performance Metrics on Disordered Regions

| Benchmark Dataset | # of Proteins/Regions | AlphaFold2 Average pLDDT (Disordered Region) | AlphaFold2 Average pLDDT (Ordered Region) | False Order Prediction Rate* |

|---|---|---|---|---|

| DisProt (Curated IDRs) | 1,532 | 58.3 | 84.7 | 22% |

| Missing Electron Density (PXD) | 4,210 | 51.1 | 86.2 | 31% |

| MoRF (Molecular Recognition Features) | 875 | 65.4 | 82.9 | 38% |

*False Order Prediction Rate: Percentage of residues experimentally defined as disordered but predicted with pLDDT > 70. (Data synthesized from recent literature, 2023-2024).

Experimental Protocol for Validating IDR Predictions

- Objective: To experimentally validate the predicted disorder/order of a protein region.

- Method 1: Nuclear Magnetic Resonance (NMR) Spectroscopy

- Procedure: Express and purify (^{15})N-labeled protein. Acquire (^{1})H-(^{15})N Heteronuclear Single Quantum Coherence (HSQC) spectra. Disordered regions exhibit low chemical shift dispersion (peak clustering between 7.8-8.3 ppm in (^{1})H dimension) and narrow signal linewidths.

- Method 2: Small-Angle X-ray Scattering (SAXS)

- Procedure: Collect scattering data of the protein in solution. Compute the Kratky plot. A plateauing or bell-shaped curve indicates disorder, while a well-defined peak suggests a globular, ordered structure. Fit the data to ensemble models to assess conformational heterogeneity.

- Method 3: Protease Sensitivity Assay

- Procedure: Incubate the purified protein with a non-specific protease (e.g., proteinase K). Sample aliquots at time intervals and analyze by SDS-PAGE. Disordered regions are digested rapidly compared to structured domains.

Multimers: Beyond Single-Chain Logic

While AlphaFold-Multimer and subsequent updates address complexes, performance is non-uniform. Accuracy degrades for heteromeric vs. homomeric complexes, transient interactions, and complexes with significant conformational change upon binding.

Quantitative Analysis of Multimer Prediction

Table 2: Multimer Prediction Benchmark (Recent Assessments)

| Complex Type | Test Set Size (Pairs/Complexes) | DockQ Score (Average)* | Success Rate (DockQ ≥ 0.23) | Notable Challenge |

|---|---|---|---|---|

| Homodimers (PDB) | 1,204 | 0.65 | 78% | Interface symmetry enforcement |

| Heterodimers (Transient) | 337 | 0.41 | 45% | Weak, allosteric interfaces |

| Large Complexes (>4 chains) | 89 | 0.38 | 31% | Symmetry & long-range effects |

| Antibody-Antigen | 253 | 0.52 | 62% | CDR loop flexibility |

*DockQ is a composite score measuring interface accuracy (0-1 scale). (Compiled from CASP15, recent preprints, and AlphaFold-Multimer v2.3 documentation).

Experimental Protocol for Validating Predicted Complexes

- Objective: Validate the structure and stoichiometry of a predicted protein-protein complex.

- Method 1: Size-Exclusion Chromatography with Multi-Angle Light Scattering (SEC-MALS)

- Procedure: Purify individual components and the co-purified complex. Inject onto an SEC column coupled to UV, refractive index (RI), and MALS detectors. MALS analysis directly calculates the absolute molecular weight of the eluting species in solution, confirming complex stoichiometry independent of shape.

- Method 2: Cross-Linking Mass Spectrometry (XL-MS)

- Procedure: Incubate the complex with a lysine-reactive cross-linker (e.g., BS3). Digest with trypsin, analyze by LC-MS/MS. Identify cross-linked peptides to derive distance restraints (Cα-Cα ~24-30 Å). Use these restraints to validate or filter computational models.

- Method 3: Surface Plasmon Resonance (SPR) or Bio-Layer Interferometry (BLI)

- Procedure: Immobilize one partner on a sensor chip/dipstick. Measure binding kinetics (ka, kd) and affinity (KD) of the flowing analyte partner. This confirms interaction and provides biophysical parameters consistent (or not) with the predicted interface.

Novel Folds: The Abyss of Sequence Divergence

"Novel folds" are structures not represented in the training set. AlphaFold2's Evoformer architecture relies heavily on co-evolutionary signals from multiple sequence alignments (MSAs). For orphan sequences or those with few homologs, performance collapses.

Quantitative Analysis of Novel Fold Prediction

Table 3: Performance on Sequences with Low MSA Depth

| MSA Depth (Effective Sequences) | Average TM-score* (vs. Experimental) | pLDDT (Global) | Comment |

|---|---|---|---|

| Neff > 100 (Rich) | 0.89 | 88.5 | Standard high-accuracy regime |