Deconstructing AlphaFold2: A Technical Deep Dive into the AI That Solved Protein Folding

This article provides a comprehensive technical analysis of DeepMind's AlphaFold2 deep learning architecture, tailored for researchers, scientists, and drug development professionals.

Deconstructing AlphaFold2: A Technical Deep Dive into the AI That Solved Protein Folding

Abstract

This article provides a comprehensive technical analysis of DeepMind's AlphaFold2 deep learning architecture, tailored for researchers, scientists, and drug development professionals. We first explore the foundational problem of protein folding and the core concepts behind the model's success. We then dissect the innovative Evoformer and structure module, explaining the methodological workflow from sequence to 3D coordinates. The guide addresses common challenges in interpretation, result refinement, and integrating predictions into experimental pipelines. Finally, we validate the model by comparing it to traditional methods, analyzing its performance on CASP benchmarks, and evaluating its limitations and real-world impact on structural biology and drug discovery.

The Protein Folding Problem & AlphaFold2's Core Paradigm Shift

Why Protein Structure Prediction Was a 'Grand Challenge' of Biology

Protein structure prediction—determining the three-dimensional (3D) atomic coordinates of a protein from its amino acid sequence—was a grand challenge in biology for over 50 years. Its difficulty stemmed from the astronomically vast conformational space a polypeptide chain could explore, as articulated by Cyrus Levinthal's paradox. The impact of solving this problem is foundational: protein structure dictates function, influencing nearly every biological process and therapeutic intervention. This whitepaper frames the resolution of this grand challenge within the context of the AlphaFold2 deep learning architecture, which marked a paradigm shift in computational biology.

The Core of the Challenge: Complexity and Conformational Space

The central obstacle was the protein folding problem. A protein's native state is a delicate balance of forces, including hydrophobic interactions, hydrogen bonding, van der Waals forces, and electrostatic interactions. The search space is intractable for brute-force computation.

Quantitative Scale of the Problem: Table 1: The Combinatorial Explosion of Protein Conformation

| Parameter | Value/Range | Implication for Prediction |

|---|---|---|

| Degrees of Freedom (per residue) | ~2-10 torsion angles | Exponential growth of possible conformations |

| Conformations for a 100-aa protein | ~10^100 (estimated) | Vastly exceeds number of atoms in universe |

| Typical folding time (in vivo) | Milliseconds to seconds | Levinthal's paradox: search is not random |

| Experimentally solved structures (PDB) | ~200,000 | Limited template coverage for ~200 million known sequences |

Historical Approaches and Their Limitations

Early methodologies fell into three categories, each with significant constraints.

3.1 Comparative (Homology) Modeling

- Protocol: Use a known structure of a homologous protein as a template. Align target sequence to template. Model conserved regions, then loops and side chains. Energy minimization.

- Limitation: Completely fails without a homologous template (>30% sequence identity typically required).

3.2 Ab Initio (Physics-Based) Folding

- Protocol: Simulate folding dynamics using molecular force fields (e.g., CHARMM, AMBER) via molecular dynamics (MD). Sample conformational space using supercomputers or specialized hardware (e.g., Anton).

- Limitation: Computationally prohibitive for all but smallest proteins; force field inaccuracies limit predictive accuracy.

3.3 Fragment Assembly

- Protocol (as in Rosetta): Break query sequence into short fragments (3-9 aa). Retrieve frequently occurring structures for these fragments from PDB. Assemble fragments via Monte Carlo simulation, scoring with a knowledge-based potential.

- Limitation: Heavily reliant on fragment library quality; struggles with novel folds.

AlphaFold2 (AF2), developed by DeepMind, transformed the field by treating structure prediction as an end-to-end deep learning problem, integrating physical and geometric constraints directly into the network.

4.1 Core Methodology & Workflow Table 2: AlphaFold2 Experimental Pipeline Summary

| Stage | Input | Process | Output |

|---|---|---|---|

| 1. Data Preprocessing | Target Amino Acid Sequence | MSAs generated via HHblits/Jackhmmer. Pairwise features from MSA. | Multiple Sequence Alignments (MSAs), Template structures (if available). |

| 2. Evoformer (Core Module) | MSAs, Pairwise Features | 48 blocks of attention-based neural networks. Performs information exchange between MSA and pairwise representation. | Refined MSA and pairwise representation containing evolutionary & geometric constraints. |

| 3. Structure Module | Processed Pairwise Representations | Iteratively generates 3D atomic coordinates (backbone + sidechains). Uses invariant point attention and rigid-body geometry. | Predicted 3D coordinates for all heavy atoms. |

| 4. Output & Scoring | 3D Coordinates | Loss functions: Frame Aligned Point Error (FAPE), Distogram loss. Confidence metric: pLDDT per residue. | Final atomic model, per-residue and per-model confidence scores. |

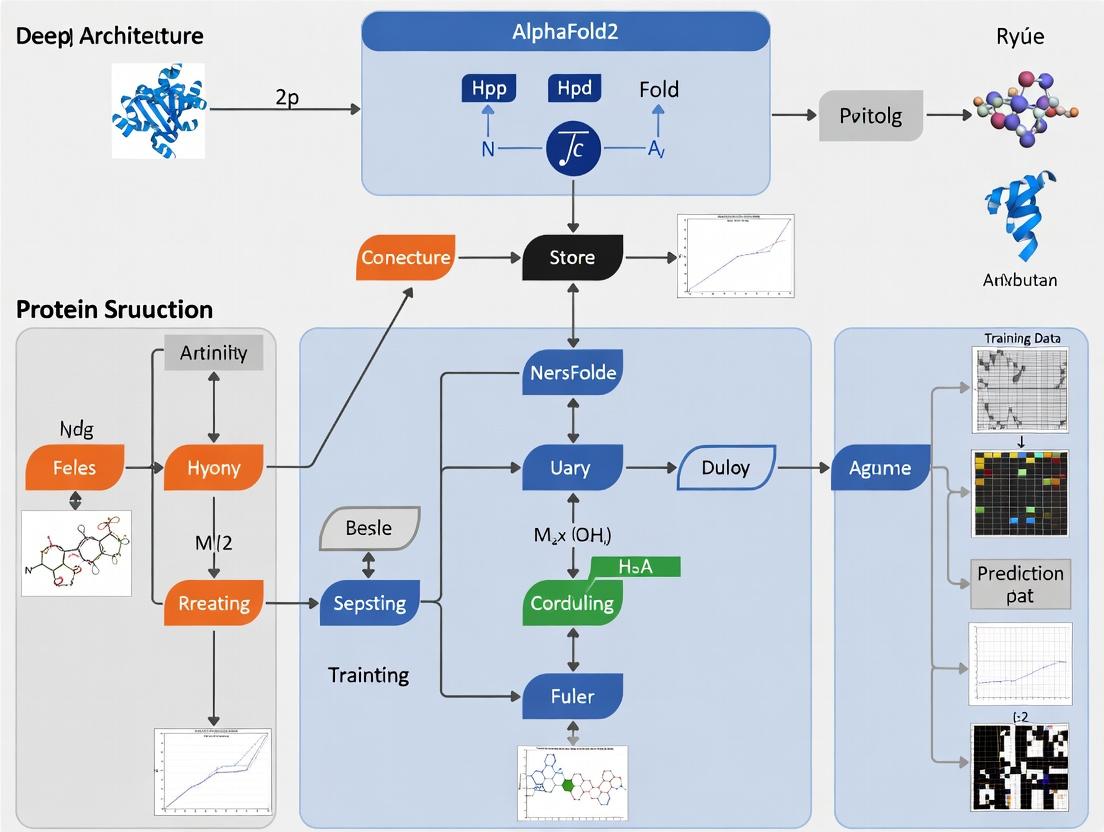

AlphaFold2 End-to-End Prediction Workflow

4.2 Key Architectural Innovations

- Evoformer: A novel transformer architecture that reasons over spatial and evolutionary relationships simultaneously, allowing distant homologs to inform geometric constraints.

- Invariant Point Attention (IPA): Operates on rigid bodies (protein residues) in 3D space, ensuring predictions are rotationally and translationally invariant—a critical property for structural biology.

- End-to-End Differentiable Learning: The entire system, from input sequence to 3D coordinates, is trained jointly, allowing gradients to flow through the structural geometry.

AlphaFold2 Core Neural Network Architecture

Table 3: Essential Research Reagents & Solutions for Protein Structure Prediction & Validation

| Item / Resource | Provider / Example | Function in Research |

|---|---|---|

| Cloning & Expression | ||

| cDNA Libraries & Vectors | Addgene, Thermo Fisher | Source of gene sequence; protein overexpression. |

| Expression Systems (E.coli, insect, mammalian cells) | Common lab protocols | Produce mg quantities of pure, folded protein. |

| Purification & Characterization | ||

| Affinity Chromatography Resins (Ni-NTA, GST) | Cytiva, Thermo Fisher | Purify recombinant fusion-tagged proteins. |

| Size Exclusion Chromatography (SEC) Systems | Agilent, Wyatt Technology | Polish purification; assess oligomeric state. |

| Circular Dichroism (CD) Spectrometer | JASCO | Assess secondary structure content and folding. |

| Surface Plasmon Resonance (SPR) | Cytiva Biacore | Measure binding kinetics/affinity for validation. |

| Experimental Structure Determination (Gold Standard) | ||

| X-ray Crystallography Kits (Crystallization screens) | Hampton Research, Molecular Dimensions | Grow protein crystals for diffraction. |

| Cryo-Electron Microscopy (Cryo-EM) Grids & Vitrobot | Thermo Fisher (FEI) | Flash-freeze samples for high-resolution EM. |

| NMR Isotope-Labeled Media | Cambridge Isotope Labs | Produce ^15N/^13C-labeled proteins for NMR. |

| Computational & Validation | ||

| AlphaFold2 Colab Notebook / Local Installation | DeepMind, Colab | Run AF2 predictions on custom sequences. |

| Rosetta Software Suite | University of Washington | Comparative modeling, ab initio, design. |

| Molecular Dynamics Software (GROMACS, AMBER) | Open Source, D. A. Case Lab | Simulate dynamics and refine models. |

| Validation Servers (MolProbity, PDB Validation) | Duke University, wwPDB | Check stereochemical quality of predicted models. |

Experimental Validation Protocol: Benchmarking AF2

The Critical Assessment of protein Structure Prediction (CASP) experiments serve as the gold-standard blind test.

CASP14 Experimental Protocol:

- Target Selection: Organizers release amino acid sequences of proteins with recently solved but unpublished structures.

- Prediction Phase: Teams (including DeepMind's AF2) submit 3D coordinate models for each target within a strict deadline.

- Assessment Phase: Independent assessors compare predictions to experimental structures using metrics:

- GDT_TS (Global Distance Test): Percentage of Cα atoms under a defined distance cutoff (e.g., 1Å, 2Å, 4Å, 8Å). Primary metric for overall accuracy.

- lDDT (local Distance Difference Test): Local superposition-free measure of per-residue accuracy.

- Analysis: Predictions are ranked. AF2's median GDT_TS of ~92 for easy targets and high scores across all difficulty levels demonstrated solution of the grand challenge.

Table 4: CASP14 AlphaFold2 Performance Data (Representative)

| Target Difficulty | Median GDT_TS (AF2) | Median GDT_TS (Next Best) | Key Implication |

|---|---|---|---|

| Free Modeling (Hard) | ~87 | ~75 | Unprecedented accuracy on novel folds. |

| Template-Based (Medium) | ~90 | ~85 | Superior to best homology models. |

| Easy | ~92 | ~90 | High accuracy, often rivaling experiment. |

| Overall | ~92.4 GDT_TS | Variable | Problem effectively solved for single chains. |

AlphaFold2's success in solving the protein structure prediction grand challenge is a testament to the power of integrated deep learning architectures that combine evolutionary, physical, and geometric reasoning. It has shifted the research landscape from prediction per se to applications: rapidly modeling proteomes, elucidating the function of uncharacterized proteins, predicting mutational effects, and accelerating structure-based drug discovery for novel targets. The remaining frontiers—including accurate prediction of conformational dynamics, protein-protein complexes with multimeric specificity, and the effects of post-translational modifications—constitute the next generation of challenges now being actively pursued.

The revolutionary success of AlphaFold2 in predicting protein three-dimensional structures from amino acid sequences marks the convergence of two historically distinct fields: empirical molecular biology and abstract computational learning. This whiteprames this breakthrough within the continuous thread from Anfinsen's thermodynamic principle to modern deep learning architectures.

Historical Foundation: Anfinsen's Dogma

In 1973, Christian Anfinsen was awarded the Nobel Prize for his work on ribonuclease, leading to the postulate now known as Anfinsen's Dogma. It states that a protein's native, functional structure is the one in which its Gibbs free energy is globally minimized, determined solely by its amino acid sequence.

Core Experiment: Ribonuclease A Denaturation-Renaturation

- Objective: To demonstrate that sequence alone encodes the information necessary for folding.

- Protocol:

- Denaturation: Native bovine pancreatic ribonuclease A (RNase A) was treated with 8M urea and β-mercaptoethanol to reduce disulfide bonds.

- Controlled Renaturation: Denaturants and reductants were slowly removed via dialysis, allowing the polypeptide chain to refold and its disulfide bonds to reform.

- Functional Assay: The recovered structure regained nearly 100% of its enzymatic activity, confirming correct folding.

- Control (Non-Native Scrambling): Re-oxidation of the reduced protein in 8M urea, without renaturation guidance, yielded a scrambled, inactive protein with incorrect disulfide pairings.

Quantitative Data Summary:

Table 1: Key Results from Anfinsen's RNase A Experiment

| Experimental Condition | Catalytic Activity Recovery | Structural State | Key Conclusion |

|---|---|---|---|

| Native RNase A (Control) | 100% | Correctly folded, native disulfide bonds | Baseline for native function. |

| After Reduction & Denaturation | ~0% | Unfolded, reduced chain | Loss of structure abolishes function. |

| Controlled Renaturation | 95-100% | Correctly folded, native disulfide bonds | Sequence dictates the recovery of native state. |

| Scrambled Re-oxidation | <1% | Misfolded, incorrect disulfide bonds | Kinetic trapping occurs without folding pathway. |

The Inferential Bridge: From Principle to Prediction

Anfinsen's Dogma provided the theoretical basis for computational protein structure prediction: find the sequence's global free energy minimum. This framed the problem as a search and optimization task over conformational space.

Core Computational Challenge: The Levinthal paradox highlighted that a brute-force search of all possible conformations is astronomically slow. The "protein folding problem" required efficiently approximating the energy landscape.

Table 2: Evolution of Computational Protein Structure Prediction Approaches

| Era | Dominant Approach | Core Methodology | Key Limitation |

|---|---|---|---|

| 1970s-1990s | Homology Modeling | Use of evolutionary related templates. | Fails for novel folds without templates. |

| 1990s-2010s | Ab Initio & Physical Modeling | Molecular dynamics, Monte Carlo sampling on physics-based force fields. | Computationally intractable; inaccurate energy functions. |

| 2000s-2010s | Fragment Assembly & Co-evolution | Rosetta; coupling analysis from multiple sequence alignments (MSAs). | Relies on depth/quality of MSAs; limited accuracy for hard targets. |

| 2018-Present | End-to-End Deep Learning | AlphaFold2: Direct geometric inference via attention-based networks. | Training data dependency; conformational dynamics less accessible. |

AlphaFold2: The Deep Learning Realization

AlphaFold2 (AF2) represents a paradigm shift. Instead of simulating physical folding, it learns the implicit mapping from sequence to structure directly from the Protein Data Bank (PDB), effectively internalizing the consequences of Anfinsen's Dogma.

Architecture as an Experimental Protocol

AF2's "inference" can be viewed as a in silico experimental protocol:

Input Preparation (Sequence Embedding):

- MSA Construction: Search sequence databases (e.g., UniRef, BFD) to build a deep Multiple Sequence Alignment, yielding evolutionary constraints.

- Template Search: Query PDB for potential structural homologs.

- Reagent: Evoformer (the core AF2 module processing MSA and pair representations).

Information Processing (The Folding Cycle):

- Iterative Refinement: The Evoformer and Structure Module engage in multiple rounds of information exchange, refining a predicted 3D structure.

- Mechanism: Self-attention and cross-attention mechanisms propagate constraints between residues, analogous to simulating long-range interactions crucial for folding.

Output & Validation (Structure Determination):

- Prediction: 3D coordinates for all heavy atoms, with per-residue confidence metrics (pLDDT).

- Validation: Self-consistency checks via predicted aligned error (PAE) for inter-residue distance confidence.

AlphaFold2 Inference Pipeline

The Scientist's Toolkit: Essential Reagents for AF2 Research

Table 3: Key Research Reagent Solutions for AlphaFold2-Based Research

| Item/Component | Function/Description | Relevance to Experiment |

|---|---|---|

| Multiple Sequence Alignment (MSA) | Evolutionary profile of the target sequence, generated from databases (UniRef90, BFD, MGnify). | Primary source of evolutionary constraints for the Evoformer. |

| Structural Templates | Potential homologous structures from the PDB. | Provides initial geometric priors, though AF2 functions without them. |

| Evoformer Module | Neural network block with self/cross-attention. | Processes MSA and residue-pair representations to infer geometric relationships. |

| Structure Module | Neural network that generates 3D atomic coordinates (torsion angles). | Translates abstract representations into explicit 3D structures via rigid-body frames. |

| pLDDT (Predicted LDDT) | Per-residue confidence score (0-100). | Indicates local model confidence; lower scores often correlate with disorder. |

| Predicted Aligned Error (PAE) | 2D matrix estimating positional error between residue pairs. | Assesses global fold confidence and domain packing reliability. |

| trans-5-Decen-1-ol | trans-5-Decen-1-ol, CAS:56578-18-8, MF:C10H20O, MW:156.26 g/mol | Chemical Reagent |

| Ethyl Laurate | Ethyl Laurate, CAS:106-33-2, MF:C14H28O2, MW:228.37 g/mol | Chemical Reagent |

AlphaFold2 does not violate Anfinsen's Dogma but provides a data-driven, statistical approximation of its outcome. It bypasses explicit simulation of the folding pathway by learning the direct relationship between sequence (the cause) and the energetically favorable native state (the effect) from thousands of solved examples. This represents a monumental shift from simulating physics to learning from patterns, ultimately delivering a practical tool that operationalizes Anfinsen's fundamental insight for modern biological discovery and therapeutic design.

Within the broader thesis of deconstructing the AlphaFold2 (AF2) deep learning architecture, understanding its three core, co-designed pillars is paramount. This in-depth technical guide details the Evoformer block, the Structure Module, and the implications of their end-to-end training paradigm, which together enabled atomic-level protein structure prediction.

The Evoformer: A Novel Evolutionary Representation Transformer

The Evoformer is the heart of AF2's reasoning engine. It is a specialized transformer architecture that jointly processes multiple sequence alignments (MSAs) and pair representations, enabling co-evolutionary analysis at scale.

Core Mechanism & Data Flow

The Evoformer operates on two primary representations:

- MSA Representation (

m × s × c_m): A 2D array formsequences (rows) of lengths(columns), withc_mchannels. - Pair Representation (

s × s × c_z): A 2D array for all pairs of residues (s × s), withc_zchannels encoding pairwise relationships.

These representations are updated iteratively through 48 stacked Evoformer blocks via two primary communication pathways:

- MSA → Pair Update: Extracts pairwise information from the MSA.

- Pair → MSA Update: Informs the MSA with contextual pairwise constraints.

Key Innovations

- Triangular Self-Attention: A novel attention mechanism for the pair representation that respects the symmetry of residue pairs (i.e., the relationship between residues i and j). It operates via two multiplicative updates: "outgoing" and "incoming" edges.

- Row- and Column-wise Gated Self-Attention: Applied to the MSA representation, allowing information flow across sequences (rows) and along the protein sequence (columns).

Diagram: Information Flow in a Single Evoformer Block

The Structure Module: From Representations to 3D Coordinates

The Structure Module is a geometry-aware, iterative module that translates the refined pair and MSA representations from the Evoformer into accurate 3D atomic coordinates, specifically backbone and side-chain atoms.

Invariant Point Attention (IPA)

The central innovation is Invariant Point Attention, a SE(3)-equivariant attention mechanism.

- Input: A backbone frame (rotation & translation) for each residue and associated single representations.

- Process: It attends over points in 3D space derived from these frames, while simultaneously performing attention on the associated sequence features. This ensures the final structure is informed by both geometric relationships and evolutionary evidence.

- Output: Updated rotations, translations, and single representations.

Iterative Refinement & Recycling

The Structure Module is invoked repeatedly (3 times by default). The predicted coordinates from one iteration are fed back into the process (after generating new embeddings) to allow iterative refinement. The entire AF2 network also employs a "recycling" strategy, where its own output is fed back as input over several cycles to stabilize predictions.

Diagram: Structure Module with Invariant Point Attention

End-to-End Differentiable Design

The unification of the Evoformer and Structure Module into a single, end-to-end differentiable model is the third pillar. This design allows gradient signals from physically meaningful structural losses (e.g., bond length accuracy) to flow back and train the entire network, including the evolutionary analysis steps in the Evoformer.

Key Loss Functions & Training Protocol

The network is trained to minimize a composite loss function calculated on the output of each recycling iteration and each invocation of the Structure Module.

Table 1: AlphaFold2 Composite Loss Function Components

| Loss Component | Target | Weight (Approx.) | Purpose |

|---|---|---|---|

| FAPE | Backbone atoms | 0.5 | Frame Aligned Point Error. The primary structural loss, measures distance error in local frames. SE(3)-invariant. |

| Distogram | Residue pairs | 0.3 | Cross-entropy loss on binned predicted distances between Cβ atoms (from pair representation). |

| pLDDT | Per-residue | 0.01 | Loss for predicted per-residue confidence (pLDDT). |

| TM-Score | Global | 0.01 | Loss for predicted TM-score (global fold confidence). |

| Auxiliary Physics | Bonds, angles | 0.05 | Penalizes violations in bond lengths, angles, and clash volumes (via Van der Waals potential). |

Experimental Training Protocol Summary:

- Data: ~170,000 unique protein structures from the PDB, clustered at high sequence identity thresholds. Input features include MSAs from BFD/MGnify and template structures from PDB70.

- Procedure: The model is trained to predict the true structure from sequence and alignment data.

- Optimization: Using Adam optimizer with gradient clipping over ~1 week on 128 TPUv3 cores.

- Evaluation: Accuracy is measured on CASP14 targets (held-out during training) via global distance test (GDT_TS) and lDDT.

Diagram: End-to-End Training Workflow

The Scientist's Toolkit: Key Research Reagents & Materials

Table 2: Essential Computational & Data Resources for AlphaFold2-style Research

| Item / Solution | Function / Description | Example / Source |

|---|---|---|

| Multiple Sequence Alignment (MSA) Database | Provides evolutionary context by finding homologs for the input sequence. Critical for Evoformer. | BFD, MGnify, UniClust30, UniRef90. |

| Protein Structure Database | Source of ground truth data for training and template information during inference. | RCSB Protein Data Bank (PDB). |

| Template Search Database | Database of known structures for homology-based hints (optional for AF2 but used in training). | PDB70 (HH-suite formatted). |

| Hardware Accelerators | Specialized processors necessary for training and efficient inference of large transformer models. | Google TPUs (v3/v4) or NVIDIA GPUs (A100/V100). |

| Deep Learning Framework | Software library for building, training, and executing differentiable neural networks. | JAX (primary for AF2), PyTorch, TensorFlow. |

| Structure Evaluation Metrics | Tools to assess the accuracy of predicted protein structures against experimental ground truth. | lDDT, GDT_TS, TM-score, MolProbity (for clashes). |

| Molecular Visualization Software | Essential for inspecting and analyzing predicted 3D atomic coordinates. | PyMOL, ChimeraX, UCSF Chimera. |

| 5-Methoxycarbonyl methyl uridine | 5-Methoxycarbonylmethyluridine CAS 29428-50-0 | 5-Methoxycarbonylmethyluridine (mcm5U), a tRNA wobble uridine modification. For Research Use Only. Not for human or diagnostic use. |

| Glycidyl myristate | Oxiran-2-ylmethyl Tetradecanoate|Glycidyl Myristate 7460-80-2 |

Within the broader thesis on the AlphaFold2 deep learning architecture, its unprecedented success in protein structure prediction is fundamentally rooted in its sophisticated input representation. The system does not operate on raw amino acid sequences alone. Instead, it leverages three critical, information-dense inputs: Multiple Sequence Alignments (MSAs), templates from the Protein Data Bank (PDB), and distilled evolutionary information. This whitepaper provides an in-depth technical guide to these core inputs, detailing their generation, role, and integration within the AlphaFold2 pipeline.

The Triad of Input Information

Multiple Sequence Alignments (MSAs)

MSAs are the primary source of evolutionary information. An MSA for a target sequence is constructed by gathering homologous sequences from large genomic databases.

Generation Protocol:

- Target Sequence Submission: The primary amino acid sequence is used as a query.

- Homology Search: Conducted against large sequence databases (UniRef90, UniClust30) using iterative search tools.

- Tool: MMseqs2 (Many-against-Many sequence searching) is a fast, sensitive protein sequence searching and clustering suite used in AlphaFold2's data pipeline.

- Method: The search is performed iteratively. First, the query is searched against the target database. Significant hits are then used as new queries for a second round of searching, expanding the homology net.

- Alignment Construction: Retrieved sequences are aligned to the query using fast, accurate alignment algorithms (e.g., HMMER) to build the final MSA.

- Filtering: Sequences with excessive gaps or those that are fragments may be filtered to improve signal quality.

Key Information Encoded: Co-evolutionary signals derived from correlated mutations across residues provide strong evidence for spatial proximity and structural constraints. These are processed into a "pair representation" by the Evoformer, the core neural network module of AlphaFold2.

Templates

Templates are experimentally solved protein structures (from the PDB) that share significant fold similarity with the target sequence.

Generation Protocol:

- Search Database: The target sequence is searched against a database of known protein structures (e.g., PDB70) using sequence-based and profile-based homology detection methods.

- Tool: HHsearch is commonly used. It builds a profile hidden Markov model (HMM) from the MSA of the target and compares it to HMMs of proteins with known structures.

- Hit Selection: Templates are selected based on high probability scores and sequence identity above a threshold (often ~20-50%). Care is taken to avoid over-reliance on templates with low confidence or those that might bias the model.

- Feature Extraction: For each selected template, features such as backbone atom positions (as distance and angle restraints), per-residue and pairwise confidence scores (template-derived Distance Map Error or pLDDT), and sequence alignment information are extracted.

Role in AlphaFold2: The template features are injected into the initial pair representation, providing a strong geometric prior that guides the folding process, especially for targets with clear evolutionary relatives.

Evolutionary Information (Distillations)

Beyond raw MSAs, further distilled statistical information is computed to summarize evolutionary constraints.

Key Components:

- Position-Specific Scoring Matrix (PSSM): Summarizes the likelihood of each amino acid at each position in the alignment.

- Sequence Profile: The frequency of each amino acid at each position in the MSA.

- De Novo Statistical Potentials: Features like residue contact potentials or statistical coupling analysis outputs can be derived.

Integration: These features are often part of the initial "single representation" (per-residue features) fed into the Evoformer alongside the raw MSA data.

Table 1: Key Input Datasets and Search Parameters for AlphaFold2

| Input Type | Primary Databases | Search Tools | Typical Volume per Target | Key Metric |

|---|---|---|---|---|

| MSAs | UniRef90, MGnify, UniClust30 | MMseqs2, HMMER | 1,000 - 100,000 sequences | Diversity & Depth; Effective Sequence Count (Neff) |

| Templates | PDB70 (cluster of PDB at 70% seq ID) | HHsearch, HMMer | 0 - 20 templates | Sequence Identity (%); HHsearch Probability |

| Evolutionary Info | Derived from MSAs | In-house computation | 1 target sequence x (20 aa + gaps) | Profile Entropy, Conservation Score |

Table 2: Impact of Input Quality on AlphaFold2 Performance (CASP14)

| Input Condition | Average GDT_TS* (Global Distance Test) | Key Limitation |

|---|---|---|

| Full Inputs (MSAs+ Templates) | ~92.4 (on high-accuracy targets) | Represents peak performance |

| MSAs Only (No Templates) | Moderate decrease (~5-10 pts on difficult targets) | Struggles with novel folds lacking clear homology |

| Limited MSA Depth (<100 effective seqs) | Significant decrease (>15 pts) | Insufficient co-evolution signal for accurate pairing |

| Sequence Only | Drastic reduction; often fails to fold | No evolutionary constraints to guide structure |

*GDT_TS is a common metric for assessing topological similarity of predicted vs. experimental structure (0-100 scale).

Experimental & Computational Workflows

Protocol: Generating Input Features for a Novel Target

Objective: To generate the MSA, template, and evolutionary features required to run AlphaFold2 inference on a novel protein sequence.

Materials & Software:

- Target: FASTA file containing the amino acid sequence.

- Computational Resources: High-performance computing cluster or cloud instance (CPU-heavy for search, GPU for inference).

- Databases: Downloaded local copies of UniRef90, PDB70, etc., or access to cloud mirrors.

- Pipeline: AlphaFold2 data pipeline scripts (modified JackHMMER/HHblits/MMseqs2 workflows).

Methodology:

- MSA Construction:

a. Split the target sequence into smaller, overlapping chunks if very long.

b. Run MMseqs2 in iterative mode (

mmseqs easy-searchfollowed bymmseqs expand-profile) against UniRef90. c. Cluster results at a high-identity threshold to reduce redundancy. d. Perform a final alignment using a tool like Kalign to produce the final MSA in A3M or STOCKHOLM format. - Template Search:

a. Build a profile HMM from the generated MSA using

hmmbuild(HMMER suite). b. Search the profile against the PDB70 database usinghhsearch. c. Parse results, select top hits based on probability and E-value, and extract their PDB codes and alignment details. - Feature Extraction:

a. Use the AlphaFold2

run_alphafold.pypipeline's data module, which internally: i. Computes the sequence profile and PSSM from the MSA. ii. Extracts template features (atom positions, confidence scores) from the identified PDB files. iii. Compiles all features into the final input arrays for the neural network.

Protocol: Ablation Study on Input Importance

Objective: To quantitatively assess the contribution of each input type to prediction accuracy.

Methodology:

- Control: Run AlphaFold2 on a benchmark set (e.g., CASP14 targets) with all inputs enabled. Record predicted structures and confidence metrics (pLDDT, predicted TM-score).

- Experimental Conditions: a. No Templates: Manually disable the template search path in the pipeline or feed empty template features. b. Limited MSA: Artificially subsample the full MSA to a specified number of effective sequences (e.g., 10, 100). c. Sequence Only: Provide only the target sequence and a dummy, single-sequence MSA.

- Evaluation: Compare the predicted structure for each condition against the experimental ground truth using metrics like GDT_TS, RMSD (Root Mean Square Deviation), and TM-score. Plot the degradation of accuracy across conditions.

Visualization of Input Processing Workflow

Title: AlphaFold2 Input Feature Generation and Integration Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational "Reagents" for Input Generation

| Item Name / Tool | Category | Function / Purpose | Key Parameter / Note |

|---|---|---|---|

| MMseqs2 | Software Suite | Ultra-fast, sensitive protein sequence searching and clustering for MSA construction. Enables scalable, iterative searches. | --num-iterations, --max-seqs control search depth. |

| HH-suite (HHblits/HHsearch) | Software Suite | Profile HMM-based searching for sensitive homology detection against sequence (HHblits) and structure (HHsearch) databases. | Critical for template finding; uses -id, -cov, and probability thresholds. |

| UniRef90 Database | Data Resource | Clustered non-redundant protein sequence database at 90% identity. Primary target for MSA homology searches. | Reduces search space while maintaining diversity. Must be kept updated. |

| PDB70 Database | Data Resource | A curated subset of the PDB, clustered at 70% sequence identity. Used for efficient template searching. | Pre-computed HMMs for each cluster accelerate HHsearch. |

| Kalign / MAFFT | Software Tool | Multiple sequence alignment algorithms. Used to create the final, accurate alignment from homologous sequences. | Choice affects alignment quality, especially for divergent sequences. |

| AlphaFold Data Pipeline | Software Scripts | Custom Python scripts that orchestrate the entire input feature generation process, calling the tools above. | Handles data flow, error checking, and final feature tensor assembly. |

| HMMER | Software Suite | Alternative tool for building profile HMMs and scanning sequence databases. Used in some pipeline variants. | hmmbuild and hmmscan are core functions. |

| Z-Ile-Ile-OH | (2S,3S)-2-((2S,3S)-2-(((Benzyloxy)carbonyl)amino)-3-methylpentanamido)-3-methylpentanoic acid | High-purity (2S,3S)-2-((2S,3S)-2-(((Benzyloxy)carbonyl)amino)-3-methylpentanamido)-3-methylpentanoic acid for research applications. This product is For Research Use Only. Not for human or veterinary use. | Bench Chemicals |

| 4-(4-chlorophenyl)thiazol-2-amine | 4-(4-Chlorophenyl)-1,3-thiazol-2-amine|CAS 2103-99-3 | 4-(4-Chlorophenyl)-1,3-thiazol-2-amine (CAS 2103-99-3) is a key biochemical for research. Explore its applications in developing neurodegenerative therapeutics. For Research Use Only. Not for human or veterinary use. | Bench Chemicals |

Within the broader thesis on the AlphaFold2 deep learning architecture, its revolutionary output paradigm is as significant as its novel neural network design. The system does not merely produce a single static 3D coordinate set; it provides a probabilistic, confidence-annotated structural model. This output—atomic coordinates paired with per-residue (pLDDT) and global (pTM) confidence metrics—transforms protein structure prediction from a speculative exercise into a quantifiably reliable tool for research and drug development. This guide dissects these outputs, their derivation from the architecture's evidential head, and their critical interpretation.

Decoding the Output: pLDDT and pTM

AlphaFold2 generates two primary confidence scores that assess prediction reliability at different granularities.

pLDDT (predicted Local Distance Difference Test): A per-residue score (0-100) estimating the local accuracy of the predicted structure. It is derived from the distogram head and reflects confidence in the local atomic environment. pTM (predicted Template Modeling score): A global score (0-1) estimating the overall similarity of the predicted model to a hypothetical true structure, analogous to the TM-score used in structural biology. It is computed from the predicted pairwise distances and alignments.

Table 1: Interpretation of pLDDT Confidence Bands

| pLDDT Range | Confidence Band | Typical Structural Interpretation |

|---|---|---|

| 90 - 100 | Very high | Backbone atoms are placed with high accuracy. Side chains reliable. |

| 70 - 90 | Confident | Backbone placement is generally accurate. Side chain placement may vary. |

| 50 - 70 | Low | Caution advised. Potential topological errors in backbone. |

| < 50 | Very low | The prediction is unreliable, often corresponding to disordered regions. |

Table 2: Key Experimental Outputs from AlphaFold2

| Output Component | Format | Source in Architecture | Primary Use Case |

|---|---|---|---|

| Atomic 3D Coordinates | PDB/MMCIF file | Structure module (3D affine updates) | Visualization, docking, analysis |

| Per-residue pLDDT | B-factor column in PDB file | Distogram/evidential head | Identifying reliable regions, disorder |

| Predicted Aligned Error (PAE) | 2D JSON/PNG matrix | Pairwise head | Assessing domain placement accuracy |

| pTM score | Scalar (0-1) | Derived from PAE/distogram | Overall model quality assessment |

Experimental Protocols for Validation

The validation of AlphaFold2's outputs, as per seminal papers and subsequent research, follows rigorous protocols.

Protocol 1: CASP Assessment (Critical Assessment of protein Structure Prediction)

- Input: Blind target protein sequences with unknown experimental structures.

- Prediction: AlphaFold2 generates 5 ranked models (ranked by confidence) per target.

- Validation Metric Calculation: Organizers calculate GDT_TS (Global Distance Test) and lDDT (local Distance Difference Test) by comparing predicted models to later-released experimental structures.

- Correlation: Compare per-residue pLDDT to experimental lDDT scores to validate the self-estimated accuracy.

Protocol 2: Predicted Aligned Error (PAE) Analysis for Domain Placement

- Run Inference: Generate the full AlphaFold2 output for a multi-domain protein.

- Extract PAE Matrix: Parse the N x N symmetric matrix where element ij is the expected error in Ångströms if residues i and j are aligned.

- Visualization: Plot PAE as a heatmap. Low error (blue) along blocks indicates confident relative positioning within domains. Higher error (yellow/red) between blocks indicates uncertainty in relative orientation of domains.

- Interpretation: Use PAE to decide if a single model is reliable or if an ensemble of conformations should be considered.

Visualization of Output Generation and Interpretation

Title: AlphaFold2 Architecture to Confidence-Scored Output

Title: Interpreting AlphaFold2 Output Files & Scores

Table 3: Essential Resources for AlphaFold2-Based Research

| Resource / Solution | Provider / Source | Function in Research |

|---|---|---|

| AlphaFold2 Open Source Code (v2.3.2) | DeepMind / GitHub | Local running of the full model for custom datasets. |

| ColabFold (AlphaFold2 + MMseqs2) | Seoul National Univ. / GitHub | Streamlined, faster pipeline with automated MSA generation via MMseqs2 servers. |

| AlphaFold Protein Structure Database | EMBL-EBI | Pre-computed predictions for >200 million proteins; primary resource for lookup. |

| PDB (Protein Data Bank) | RCSB | Source of experimental structures for validation and comparison against predictions. |

| UniProt Knowledgebase | UniProt Consortium | Source of canonical protein sequences and functional annotations for input. |

| PyMOL / ChimeraX | Schrödinger / UCSF | Visualization software for analyzing 3D coordinates, coloring by pLDDT, and examining PAE. |

| Biopython / BioPandas | Open Source | Python libraries for programmatic parsing and analysis of PDB files and prediction data. |

| AlphaFill | CMBI, Radboud Univ. | In silico tool for adding ligands, cofactors, and ions to AlphaFold2 models. |

Inside the Black Box: A Step-by-Step Walkthrough of the AlphaFold2 Pipeline

This document constitutes the first stage of a comprehensive technical analysis of the AlphaFold2 (AF2) architecture. The system's revolutionary accuracy in protein structure prediction is fundamentally predicated on the sophisticated and multi-faceted representation of input data. This section details the processes and biological data sources transformed into the numerical feature tensors that drive the deep learning model.

AF2 integrates information from multiple sequence and structural databases. The core input is a multiple sequence alignment (MSA) and a set of homologous templates.

Table 1: Core Input Data Sources & Features

| Data Source | Primary Feature | Description & Biological Significance |

|---|---|---|

| UniRef90 | Multiple Sequence Alignment (MSA) | Provides evolutionary constraints via residue co-evolution signals. Critical for inferring contact maps. |

| MGnify | MSA (environmental sequences) | Expands evolutionary context with metagenomic sequences, enhancing coverage for under-sampled families. |

| BFD (Big Fantastic Database) | Large-scale MSA | A massive, clustered sequence database used to generate rich, diverse MSAs for robust evolutionary feature extraction. |

| PDB (Protein Data Bank) | Template Structures | Provides high-resolution structural templates for proteins with known homologs, guiding initial folding. |

| HHblits/HHsearch | Profile HMMs & Pairwise Features | Tools used to search against databases (e.g., UniClust30) to generate position-specific scoring matrices (PSSMs) and template alignments. |

Experimental Protocol: Generating Input Features

The following protocol outlines the computational pipeline for generating AF2's input features from a target amino acid sequence.

Protocol: Input Feature Generation Pipeline

- Input: A single protein sequence (FASTA format).

- MSA Generation (Step A):

- Tool: MMseqs2 (fast, deep clustering).

- Process: The target sequence is searched against large sequence databases (UniRef90, BFD, MGnify).

- Output: A large, clustered MSA. This is used to compute a position-specific frequency matrix and a covariance matrix.

- Template Search (Step B):

- Tool: HHsearch/HHblits.

- Process: The target sequence or its MSA-derived profile HMM is searched against a database of known PDB structures.

- Output: A list of potential template structures, their alignments to the target, and associated confidence scores.

- Feature Composition (Step C):

- MSA Features: One-hot encoding of the MSA, row-wise and column-wise profiles, and deletion statistics.

- Pairwise Features: Derived from the MSA covariance matrix and residue co-evolution (often via statistical coupling analysis or direct inference).

- Template Features: Distances and orientations between residues in aligned template structures, converted to frames and normalized distances.

- Extra Features: Amino acid sequence indices, predicted secondary structure (from PSIPRED), and solvent accessibility.

- Output: A fixed-size feature dictionary containing all stacked features as multi-dimensional tensors, ready for input into the AF2 Evoformer network.

Visualization of the Feature Generation Workflow

Title: AlphaFold2 Input Feature Generation Pipeline

Table 2: Essential Computational Tools & Databases for AF2-Style Feature Generation

| Tool/Resource | Category | Function in Pipeline |

|---|---|---|

| MMseqs2 | Software Suite | Rapid, sensitive protein sequence searching and clustering for large-scale MSA construction. |

| HH-suite (HHblits/HHsearch) | Software Suite | Profile hidden Markov model (HMM)-based tools for sensitive sequence and template searches. |

| JackHMMER | Software Suite | Alternative HMM-based search tool for building MSAs iteratively. |

| UniRef90 | Protein Database | Clustered non-redundant sequence database providing evolutionary diversity. |

| BFD | Protein Database | Extremely large clustered sequence dataset for capturing deep homology. |

| PDB | Structure Database | Primary repository of experimentally-determined 3D protein structures for templating. |

| PSIPRED | Prediction Tool | Provides predicted secondary structure features as additional input channels. |

| NumPy/PyTorch/JAX | Libraries | Numerical and deep learning frameworks used to implement feature processing and model logic. |

Within the AlphaFold2 deep learning architecture, the Evoformer stands as a revolutionary module for reasoning about evolutionary relationships. It processes a Multiple Sequence Alignment (MSA) and a pair representation of the target sequence to generate refined, information-rich embeddings. This whitepaper details its technical mechanisms, experimental validation, and significance for structural biology and drug discovery.

AlphaFold2's breakthrough in protein structure prediction stems from its end-to-end deep learning architecture. A core thesis of this architecture is that accurate geometric structure can be inferred by co-evolutionary signals embedded within MSAs and physical constraints inherent to protein folding. The Evoformer is the engine that realizes the first part of this thesis, transforming raw MSA data into a structured, interpretable representation of evolutionary constraints.

Architectural Deep Dive

The Evoformer operates on two primary data representations:

- MSA Representation (

m × s × c_m): A 3D tensor withmsequences of lengths, each withc_mchannels. - Pair Representation (

s × s × c_z): A 3D tensor encoding relationships between residues, withsresidues,spairs, andc_zchannels.

The module is composed of stacked Evoformer blocks, each featuring two core communication pathways.

Core Mechanisms

- MSA Column-wise Self-Attention: Updates each column (i.e., all residues at a specific position across the MSA) by allowing residues to attend to all other residues in the same column across different sequences. This captures vertical, cross-sequence dependencies.

- MSA Row-wise Self-Attention with Pair Bias: Updates each row (i.e., a full protein sequence) by allowing intra-sequence attention. Crucially, the attention weights are biased by the current pair representation, injecting evolutionary coupling information.

- Triangular Self-Attention on Pairs: Updates the pair representation using two triangular multiplicative update mechanisms:

- Outgoing Edge (

a_i * a_jfor i>j): Residue i communicates to pair (i,j) via residue j. - Incoming Edge (

a_i * a_jfor iResidue i receives communication for pair (i,j) via residue j. This ensures geometric consistency (e.g., if residue i is close to j and j to k, then i is likely close to k). ):

- Outgoing Edge (

- Transition Layers: Standard feed-forward networks applied to both representations.

Communication Pathways Diagram

Diagram Title: Evoformer Block Dataflow & Core Mechanisms

Experimental Protocols & Validation

The efficacy of the Evoformer was validated within the full AlphaFold2 model using CASP14 benchmarks. Key ablation studies were performed.

Key Ablation Experiment Protocol

- Objective: Isolate and measure the contribution of the Evoformer's communication mechanisms to prediction accuracy.

- Method:

- Train multiple variants of AlphaFold2 from scratch on the same dataset.

- Variant A (Baseline): Full Evoformer architecture.

- Variant B: Remove triangular self-attention from pair representation.

- Variant C: Remove pair bias from the MSA row-wise self-attention.

- Variant D: Replace all Evoformer blocks with standard Transformer blocks acting only on the MSA representation.

- Evaluate all variants on the CASP14 free modeling targets using the Global Distance Test (GDT_TS) metric.

- Metrics: GDT_TS (0-100), lDDT (0-1), and TM-score (0-1).

Key Quantitative Results

Table 1: Impact of Evoformer Components on CASP14 Accuracy

| Model Variant | Mean GDT_TS (± stdev) | Mean lDDT (± stdev) | Key Change |

|---|---|---|---|

| Full AlphaFold2 (with Evoformer) | 87.5 (± 8.2) | 0.89 (± 0.07) | N/A (Complete baseline) |

| Variant B (No Triangular Attn.) | 72.1 (± 12.4) | 0.75 (± 0.13) | Pair representation loses geometric consistency. |

| Variant C (No Pair Bias in MSA) | 80.3 (± 10.1) | 0.82 (± 0.10) | MSA update decoupled from pair constraints. |

| Variant D (Standard Transformer) | 65.4 (± 14.7) | 0.68 (± 0.15) | Loss of integrated MSA-Pair reasoning. |

Table 2: Evoformer Computational Profile

| Parameter | Typical Value (Training) | Description |

|---|---|---|

| Number of Evoformer Blocks | 48 | Depth of the processing stack. |

MSA Sequence Depth (m) |

512 | Number of clustered homologue sequences processed. |

Target Sequence Length (s) |

256 (up to ~2700) | Residues in the target protein. |

Channels in MSA Rep (c_m) |

256 | Feature dimension per MSA position. |

Channels in Pair Rep (c_z) |

128 | Feature dimension per residue pair. |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for MSA & Evolutionary Analysis

| Item | Function & Explanation | Example/Source |

|---|---|---|

| MSA Generation Software | Creates the primary input for the Evoformer by searching genomic databases for homologous sequences. | HHblits, JackHMMER, MMseqs2 |

| Protein Structure Datasets | High-quality experimental structures for training and benchmarking. | Protein Data Bank (PDB), PDB-70, CATH, SCOP |

| Evolutionary Coupling Tools | Provides independent validation of contacts predicted from the Evoformer's pair representation. | plmDCA, GREMLIN, EVcouplings |

| Deep Learning Framework | Environment for implementing and experimenting with Evoformer-like architectures. | JAX, PyTorch, TensorFlow |

| Hardware (AI Accelerator) | Enables training of large models with billions of parameters on massive MSA datasets. | NVIDIA A100/ H100 GPUs, Google TPU v4/v5 Pods |

| Firefly luciferase-IN-1 | Firefly luciferase-IN-1, CAS:10205-56-8, MF:C15H14N2S, MW:254.4 g/mol | Chemical Reagent |

| Dansylcadaverine | Dansylcadaverine, CAS:10121-91-2, MF:C17H25N3O2S, MW:335.5 g/mol | Chemical Reagent |

Experimental Workflow Diagram

Diagram Title: AlphaFold2 Workflow Featuring the Evoformer

Implications for Drug Development

The Evoformer's output directly informs critical drug discovery tasks:

- Binding Site Prediction: The refined pair representation highlights evolutionarily coupled residues, often defining functional cores and binding sites.

- Mutation Effect Analysis: By examining changes in the MSA and pair representations upon in silico mutation, researchers can predict stability and binding affinity changes (deep mutational scanning).

- Protein-Protein Interaction (PPI) Interface Prediction: The principles of the Evoformer can be extended to model two interacting MSAs, providing a powerful tool for predicting and analyzing PPIs—a key target class for therapeutics.

The Evoformer is not merely a neural network component; it is a computational embodiment of evolutionary biology principles. By enabling seamless, iterative communication between sequence and pair information, it successfully extracts the physical and evolutionary constraints needed to predict protein structure with atomic accuracy. Its design underscores the thesis that integrating diverse biological data streams within a learned reasoning framework is paramount to solving complex scientific problems, paving the way for accelerated drug discovery and protein design.

AlphaFold2 represents a paradigm shift in protein structure prediction. Its architecture can be conceptualized as a sequential, multi-stage deep learning pipeline. Following the initial sequence processing and template alignment (Evoformer module), the system generates a set of predicted inter-residue distances and orientations. The Structure Module is the final, critical stage that acts as a geometric engine, transforming these abstract, pairwise constraints into an accurate, all-atom 3D model. It performs iterative refinement, starting from a randomized or coarse backbone trace and progressively aligning it with the network's predicted geometric statistics. This stage embodies the integration of learned physical constraints into a differentiable, three-dimensional structure.

Core Architecture & Iterative Refinement Mechanism

The Structure Module is an SE(3)-equivariant neural network. Its key innovation is the use of Invariant Point Attention (IPA), which enables it to reason about spatial relationships in 3D space while remaining invariant to global rotations and translations—a property essential for meaningful structural refinement.

The refinement is performed over N iterative cycles (typically N=8). Each cycle uses the evolving atomic coordinates and the invariant features from the Evoformer to update the structure.

Invariant Point Attention (IPA) Explained

IPA computes attention between residues based on both their feature representations and their current spatial positions. It generates a weighted update to each residue's frame of reference (defined by its backbone N, Cα, C atoms).

The Refinement Cycle

Each iteration follows a strict sequence:

- IPA Layer: Updates local frame orientations and positions based on global spatial context.

- Backbone Update: Adjusts the positions of backbone atoms (N, Cα, C) from the updated frames.

- Sidechain Inference: Predicts sidechain torsion angles (χ angles) from the updated backbone and features.

- All-Atom Construction: Uses ideal bond lengths and angles, along with predicted torsions, to build full atomic coordinates.

- Loss Computation: Calculates the FAPE (Frame Aligned Point Error) loss between the current structure and ground truth (during training), backpropagating through the entire cycle.

Key Experimental Protocols & Data

Protocol: Training the Structure Module

The module is trained end-to-end as part of AlphaFold2, but its loss is specifically designed for 3D accuracy.

Methodology:

- Input: Processed multiple sequence alignment (MSA) and pair representations from the Evoformer stack; initial residue frames (often initialized from predicted backbone torsion angles or randomly).

- Iteration: Pass inputs through N refinement cycles.

- Loss Function: Use the Frame Aligned Point Error (FAPE) as the primary loss. FAPE measures the distance between corresponding atoms after aligning the predicted and true local residue frames, making it invariant to global orientation.

- Auxiliary Losses: Include losses on predicted sidechain torsion angle distributions (negative log-likelihood) and violations of bond geometry.

- Optimization: Trained using gradient descent (Adam optimizer) with gradient clipping.

Protocol: Ablation Study on Iteration Count

A key experiment validates the necessity of iterative refinement.

Methodology:

- Train multiple AlphaFold2 variants where the Structure Module's iteration count N is varied (e.g., N=0, 1, 4, 8).

- Evaluate each variant on standard test sets (CASP14, PDB).

- Measure accuracy metrics: Global Distance Test (GDT_TS), lDDT (local Distance Difference Test), and RMSD (Root Mean Square Deviation).

Quantitative Results:

Table 1: Impact of Refinement Iterations on Prediction Accuracy (CASP14 Average)

| Iteration Count (N) | GDT_TS (↑) | lDDT (↑) | RMSD (Å) (↓) | Inference Time (Relative) |

|---|---|---|---|---|

| 0 (Single Pass) | 72.1 | 79.2 | 4.52 | 1.0x |

| 1 | 83.5 | 85.7 | 2.31 | 1.2x |

| 4 | 88.2 | 89.4 | 1.58 | 1.8x |

| 8 (Default) | 92.4 | 92.9 | 1.10 | 3.0x |

Protocol: Evaluating Equivariance

Testing the SE(3)-equivariance property ensures robust predictions.

Methodology:

- Take a input protein representation.

- Apply a random global rotation and translation to the initial coordinates fed to the Structure Module.

- Run the forward pass.

- Apply the inverse transformation to the output coordinates.

- Compare the resulting structure with the output generated from the untransformed input. The two should be identical (within numerical precision).

Quantitative Results:

Table 2: Equivariance Error Measurement

| Metric | Mean Error (Ã…) |

|---|---|

| Cα Atom Position Difference | < 1e-6 |

| Backbone Frame Orientation | < 1e-5 radians |

Visualization of the Structure Module Workflow

Structure Module Iterative Refinement

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for AlphaFold2 Structure Module Research

| Item / Resource | Function / Purpose | Example / Specification |

|---|---|---|

| AlphaFold2 Open Source Code | Reference implementation for studying and modifying the Structure Module. | Jumper et al., 2021. Available on GitHub (DeepMind). |

| PyTorch / JAX Framework | Deep learning frameworks with automatic differentiation, essential for implementing the differentiable refinement. | PyTorch 1.9+, JAX 0.2.25+. |

| Protein Data Bank (PDB) | Source of high-resolution experimental structures for training (FAPE loss) and validation. | Requires local mirror or API access for large-scale work. |

| SE(3)-Transformers Library | Pre-built layers for equivariant deep learning, useful for custom implementations or modifications of IPA. | e.g., se3-transformer-pytorch. |

| Rosetta Relax Protocol | Often used as a post-processing step after AlphaFold2 prediction to relieve steric clashes and optimize physical energy. | Integrated in ColabFold pipeline. |

| Molecular Visualization Software | For analyzing and comparing the iteratively refined output structures. | PyMOL, ChimeraX, VMD. |

| CASP Dataset | Standard benchmark for rigorous, blind evaluation of prediction accuracy (GDT_TS, lDDT). | CASP14, CASP15 results and targets. |

| 2,4-Dimethylthiazole-5-carboxylic acid | 2,4-Dimethylthiazole-5-carboxylic acid, CAS:53137-27-2, MF:C6H7NO2S, MW:157.19 g/mol | Chemical Reagent |

| EP4 receptor agonist 2 | EP4 receptor agonist 2, MF:C27H32ClNO4, MW:470.0 g/mol | Chemical Reagent |

Within the broader thesis on the deep learning architecture of AlphaFold2, the transition from accessible cloud platforms to controlled local deployment is a critical operational step. This guide details the technical workflow for executing AlphaFold2 predictions, from the simplified ColabFold interface to a full-scale local server installation, enabling reproducible, high-throughput, and secure protein structure prediction essential for research and drug development.

Core Deployment Pathways: A Quantitative Comparison

Table 1: AlphaFold2 Execution Platforms: Specifications & Requirements

| Platform | Hardware Requirements | Typical Runtime (Single Protein) | Key Advantage | Primary Limitation |

|---|---|---|---|---|

| ColabFold (Google Colab) | Free: 1x T4 GPU (16GB), ~12GB RAM. Pro: 1x A100/V100 GPU | 5-30 minutes | Zero setup; integrated MMseqs2 server for fast homologs. | Session limits, data privacy concerns, no customization. |

| Local Server (Docker) | 1x High-end GPU (RTX 3090/A100, 24GB+ VRAM), 32GB+ RAM, 3TB+ SSD | 20-90 minutes | Full control, batch processing, custom databases, offline use. | Significant upfront hardware/software investment. |

| HPC Cluster | Multiple GPUs/node, vast CPU/RAM resources, parallel filesystem | Variable (massively parallel) | Extreme throughput for large-scale studies (e.g., proteome-scale). | Queue systems, complex module environments, requires sysadmin support. |

Experimental Protocol: From Sequence to Structure

Protocol 1: Running AlphaFold2 via ColabFold

- Input Preparation: Compile target protein sequence(s) in FASTA format.

- Environment Access: Navigate to the ColabFold GitHub repository (github.com/sokrypton/ColabFold) and launch the "AlphaFold2" notebook on Google Colab.

- Parameter Configuration: In the notebook cell, specify:

sequence: Your target sequence.msa_mode: Choose "MMseqs2 (UniRef+Environmental)" for speed, or "single_sequence" for no templates/MSA.model_type: Selectauto(automated),alphafold2_ptm, orColabFold(distilled model).num_relax: Set to1for AMBER relaxation of the top-ranked model.

- Execution: Run all notebook cells. The runtime environment (GPU) is provisioned automatically.

- Output Retrieval: Download the resulting ZIP file containing predicted PDB files, confidence scores (pLDDT, pTM), and aligned multiple sequence alignments (MSAs).

Protocol 2: Deploying AlphaFold2 on a Local Server

This protocol follows the standard installation via Docker, as per DeepMind's and the Josh Berson Lab's recommendations.

System Preparation:

- Hardware: Ensure NVIDIA GPU with driver >= 495.29.05, 64GB RAM, and ample SSD storage for databases (~3TB).

- Software: Install Docker, NVIDIA Container Toolkit, and CUDA 11.x+ drivers.

Database Download: Use the provided

scripts/download_all_data.shscript to download required genetic databases (UniRef90, BFD, MGnify, etc.) and model parameters to a designated path (e.g.,/data/alphafold_dbs).Running the Docker Container: Execute prediction using a command template:

Key flags:

--db_preset(full_dbsorreduced_dbs),--model_preset(monomer,monomer_casp14,multimer).Post-processing: Local outputs include unrelaxed/relaxed PDBs, per-residue and per-chain confidence metrics, and visualization JSONs for tools like PyMOL or ChimeraX.

Diagram Title: AlphaFold2 Execution Decision & Workflow Pathways

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 2: Key Software & Data Resources for AlphaFold2 Deployment

| Item | Function/Description | Typical Source |

|---|---|---|

| Genetic Databases (UniRef90, BFD, MGnify) | Provide evolutionary context via multiple sequence alignments (MSAs) and templates. | Google Cloud Public Datasets |

| PDB70 & PDB100 | Curated sets of protein structures from the RCSB PDB used for template-based modeling. | HH-suite repositories |

| AlphaFold2 Model Parameters | Pre-trained neural network weights (5 models for monomer, 5 for multimer). | DeepMind GitHub |

| Docker Container Image | Portable, dependency-managed environment containing AlphaFold2 code and all third-party software. | Josh Berson Lab / DeepMind |

| PyMOL/ChimeraX | Molecular visualization software for analyzing predicted 3D structures and confidence scores. | Schrödinger / UCSF |

| AMBER Force Field | Used for the relaxation step, refining steric clashes in the predicted protein backbone. | Integrated in AlphaFold2 |

| ColabFold Jupyter Notebook | Streamlined interface combining fast MMseqs2 search with a distilled AlphaFold2 model. | GitHub/sokrypton/ColabFold |

| Ethyl 3-coumarincarboxylate | Ethyl 3-coumarincarboxylate, CAS:1846-76-0, MF:C12H10O4, MW:218.20 g/mol | Chemical Reagent |

| Direct red 79 | Direct red 79, CAS:1937-34-4, MF:C37H28N6Na4O17S4, MW:1048.9 g/mol | Chemical Reagent |

Diagram Title: AlphaFold2 Core Architecture & Information Flow

Deploying AlphaFold2 effectively, whether via ColabFold for initial investigations or on a local server for intensive research, is foundational to leveraging its predictive power within structural biology and drug discovery. This operational knowledge, contextualized within the architecture's thesis, empowers researchers to design robust, reproducible computational experiments, accelerating the path from genomic sequence to mechanistic hypothesis and therapeutic intervention.

The revolutionary AlphaFold2 deep learning architecture, which accurately predicts protein three-dimensional structures from amino acid sequences, has created a paradigm shift in structural biology. This whitepaper details how this capability is pragmatically applied to two critical phases in drug discovery: identifying novel, disease-relevant protein targets and elucidating the precise mechanism of action (MoA) for potential therapeutic compounds.

Target Identification via Structural Genomics

AlphaFold2’s proteome-scale predictions enable the structural characterization of previously "dark" proteins with no experimental structures.

Protocol: In Silico Saturation of the Druggable Proteome

- Query Definition: Compile a list of human proteins genetically or biomarkedly linked to a disease phenotype but lacking structural annotation.

- Structure Prediction: Use the local AlphaFold2 implementation or the AlphaFold Protein Structure Database to generate predicted structures for all targets.

- Pocket Detection: Apply algorithmic binding site detectors (e.g., fpocket, DeepSite) to each predicted structure to identify potential ligand-binding cavities.

- Druggability Assessment: Score identified pockets using empirical metrics like Druggability Score (D-score), pocket volume (ų), and hydrophobicity. A D-score >0.5 typically suggests druggability.

- Prioritization: Rank targets based on a composite score integrating druggability metrics, genetic evidence strength, and novelty.

Table 1: Quantitative Druggability Assessment for Hypothetical Novel Targets

| Target Protein | Uniprot ID | Predicted Confidence (pLDDT) | Top Pocket Volume (ų) | Druggability Score (D-score) | Genetic Link (GWAS p-value) |

|---|---|---|---|---|---|

| Protein Kinase X | P12345 | 92 | 450 | 0.78 | 3.2e-09 |

| GPCR-Y | Q67890 | 88 | 1200 | 0.92 | 1.5e-12 |

| Metabolic Enzyme Z | A54321 | 85 | 280 | 0.45 | 4.7e-08 |

Mechanism of Action Studies through Molecular Docking

Predicted structures serve as high-quality templates for computational docking to hypothesize how a compound interacts with its target.

Protocol: Molecular Docking with AlphaFold2 Structures

- Structure Preparation: Refine the AlphaFold2 model using side-chain optimization tools (e.g., SCWRL4, RosettaFixBB). Add hydrogens and assign partial charges using molecular modeling software (e.g., UCSF Chimera, Schrödinger Maestro).

- Ligand Preparation: Generate 3D conformations of the compound of interest and optimize its geometry using force fields (e.g., MMFF94).

- Docking Simulation: Perform docking using programs like AutoDock Vina, Glide, or GOLD. Define a search grid centered on the identified binding pocket.

- Pose Scoring & Analysis: Cluster the top-ranking poses (e.g., by RMSD). Analyze key interactions: hydrogen bonds (<3.5 Ã…), hydrophobic contacts, pi-stacking, and salt bridges.

- Mutagenesis Planning: Based on the predicted binding mode, design point mutations (e.g., alanine scanning) at critical residues for experimental validation.

Table 2: Key Docking Results for Compound C1 against GPCR-Y

| Docking Pose | Binding Affinity (ΔG, kcal/mol) | H-Bond Interactions | Hydrophobic Contacts | Predicted ΔΔG upon Mutation R120A |

|---|---|---|---|---|

| Pose 1 | -9.8 | D112, Y305 | F108, V204, W208 | +3.2 kcal/mol |

| Pose 2 | -8.5 | Y305 | V204, W208, L209 | +1.1 kcal/mol |

Visualizing the Integrated Workflow

Workflow for Target ID & MoA Studies

Mapping Allosteric Signaling Pathways

AlphaFold2 models, especially those of multimeric complexes, can suggest allosteric networks linking drug-binding sites to functional regions.

Protocol: Predicting Allosteric Communication Pathways

- Complex Modeling: Use AlphaFold-Multimer to predict the structure of the target protein in complex with a known binding partner.

- Network Construction: Represent the protein structure as a graph of residues (nodes) connected by non-covalent interactions (edges).

- Pathway Analysis: Use graph theory algorithms (e.g., shortest path, betweenness centrality) to identify potential communication routes between the ligand-binding site and active/catalytic site.

- Dynamic Correlation: Perform molecular dynamics simulations on the predicted structure to calculate residue-residue cross-correlation, validating stable communication paths.

Predicted Allosteric Network in a Kinase

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Experimental Validation of Computational Predictions

| Reagent / Material | Function in Validation | Example Product / Assay |

|---|---|---|

| HEK293T Cells | Versatile mammalian expression system for producing recombinant human proteins. | Thermo Fisher Expi293F System |

| Baculovirus Expression System | Production of complex, post-translationally modified proteins (e.g., GPCRs, kinases). | Bac-to-Bac (Thermo Fisher) |

| Surface Plasmon Resonance (SPR) Chip | Label-free measurement of binding kinetics (KD, kon, koff) between drug and purified target. | Cytiva Series S Sensor Chip CMS |

| TR-FRET Assay Kit | High-throughput screening for detecting ligand binding or functional activity changes. | Cisbio KinEASE TK or cAMP kits |

| Site-Directed Mutagenesis Kit | Generation of point mutations to validate predicted critical binding residues. | NEB Q5 Site-Directed Mutagenesis Kit |

| Cryo-EM Grids | High-resolution structure determination of drug-target complexes. | Quantifoil R 1.2/1.3 Au 300 mesh |

| 1,2-Dilinoleoyl-sn-glycero-3-PC | Dilinoleoylphosphatidylcholine (DLPC) | |

| Isopropylpiperazine | Isopropylpiperazine, CAS:137186-14-2, MF:C7H16N2, MW:128.22 g/mol | Chemical Reagent |

Integrating AlphaFold2's predictive power into established biophysical and biochemical pipelines provides an unprecedented, structure-first approach to demystifying drug targets and their engagement by small molecules. This accelerates the transition from genetic association to mechanistic understanding, de-risking early-stage drug discovery.

Refining Predictions and Integrating AlphaFold2 into Research Workflows

The revolutionary success of the AlphaFold2 (AF2) deep learning architecture in accurately predicting protein three-dimensional structures from amino acid sequences has transformed structural biology and drug discovery. However, the practical utility of any single prediction hinges on a researcher's ability to interpret the confidence metrics AF2 provides. Framed within the broader thesis on the AF2 architecture, this guide details how its confidence measures—notably the per-residue pLDDT and the paired predicted aligned error (PAE)—are generated, what they signify, and the specific experimental conditions under which they can be trusted to guide research.

Decoding AlphaFold2's Core Confidence Metrics

AlphaFold2 outputs two primary, quantitative measures of confidence for its predictions.

pLDDT: Per-Residue Local Confidence

The predicted Local Distance Difference Test (pLDDT) is a per-residue estimate (on a 0-100 scale) of the model's local accuracy, analogous to the experimental Local Distance Difference Test used to assess cryo-EM maps. It is derived from the internal scoring of the final structure module.

- Interpretation: Higher scores indicate higher confidence in the local atomic positioning of that residue.

- Standard Confidence Bands: The following table summarizes the canonical interpretation, though domain-specific validation is essential.

Table 1: Standard Interpretation of pLDDT Scores

| pLDDT Range | Color Code (AF2) | Confidence Level | Typical Structural Interpretation |

|---|---|---|---|

| 90 - 100 | Dark Blue | Very High | Backbone atom positioning is highly reliable. Side chains can often be trusted for docking. |

| 70 - 90 | Light Blue | High | Backbone is generally reliable. Useful for analyzing fold and core structure. |

| 50 - 70 | Yellow | Low | The prediction is potentially ambiguous. Caution required; regions may be disordered or flexible. |

| 0 - 50 | Orange | Very Low | Prediction should not be trusted. Often corresponds to intrinsically disordered regions (IDRs). |

Predicted Aligned Error (PAE): Inter-Residue Relational Confidence

The predicted aligned error (PAE) represents AlphaFold2's self-estimated positional error (in Ångströms) for the predicted distance between the Cα atom of residue i and the Cα atom of residue j after optimal alignment. It is a 2D N x N matrix output.

- Interpretation: Low PAE values (e.g., <10 Ã…) between two residues indicate high confidence in their relative spatial placement. High values (>20 Ã…) indicate low confidence in their relationship.

- Primary Use: PAE is critical for assessing the confidence in domain-domain orientations and identifying possible errors in quaternary structure assembly from multimer predictions.

Table 2: Interpreting Predicted Aligned Error (PAE)

| PAE Value (Ã…) | Confidence in Inter-Residue Relationship | Structural Implication |

|---|---|---|

| < 10 | High | Relative spatial positioning of the two residues is predicted with high accuracy. |

| 10 - 15 | Medium | Moderate confidence. Relative position may have some uncertainty. |

| > 15 | Low | Low confidence in the distance/orientation between the two residues. Suggests flexible linker or incorrect domain packing. |

Diagram 1: Origin of confidence metrics in AlphaFold2

Experimental Protocols for Validating Confidence Metrics

The following methodologies are standard for empirically testing the correlation between AF2's predicted confidence and experimental reality.

Protocol: Benchmarking pLDDT Against Experimental B-Factors

Objective: To quantify the correlation between predicted confidence (pLDDT) and experimental measures of structural flexibility/uncertainty (Crystallographic B-factors).

- Dataset Curation: Select a diverse set of high-resolution (<2.0 Ã…) X-ray crystal structures from the PDB, ensuring they are not part of the AF2 training set.

- Prediction Generation: Run the target protein sequences through a standard, non-fine-tuned AlphaFold2 inference pipeline to obtain predicted structures and pLDDT scores.

- Data Alignment & Processing:

- Superimpose the AF2 prediction onto the experimental structure using a global alignment tool (e.g.,

TMalign). - Extract the pLDDT value for each residue.

- From the experimental PDB file, extract the B-factor for the Cα atom of the corresponding residue.

- Convert B-factors to normalized values or predicted RMSD estimates for direct comparison if needed.

- Superimpose the AF2 prediction onto the experimental structure using a global alignment tool (e.g.,

- Statistical Analysis: Calculate the per-chain Pearson/Spearman correlation coefficient between the pLDDT and the B-factor. Plot pLDDT vs. B-factor as a scatter plot for visual inspection of the inverse relationship.

Protocol: Assessing Domain Orientation Confidence Using PAE

Objective: To determine if low-confidence inter-domain PAE signals correspond to genuine flexibility or prediction error.

- Target Selection: Identify proteins with two or more clearly defined structural domains from a source like CATH or SCOP.

- AF2 Multimer Prediction: Run the full sequence using the AlphaFold-Multimer pipeline.

- PAE Matrix Analysis: Generate the PAE plot. Define domain boundaries and calculate the average PAE value between domains versus within domains.

- Comparative Structural Analysis:

- If an experimental structure exists: Compare the inter-domain angle in the prediction to the experiment. A high average inter-domain PAE often coincides with large angular deviations.

- If no structure exists: Perform molecular dynamics (MD) simulations on the predicted model. High inter-domain PAE regions often show elevated flexibility in MD root-mean-square fluctuation (RMSF) plots.

- Conclusion: A high inter-domain PAE does not necessarily mean the prediction is "wrong," but rather that the model is uncertain. This often indicates a flexible or dynamic orientation in solution, a critical insight for functional studies.

Diagram 2: Decision tree for using AF2 confidence metrics

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for AlphaFold2 Confidence Analysis & Validation

| Item / Solution | Function & Relevance to Confidence Assessment |

|---|---|

| AlphaFold Protein Structure Database | Provides immediate access to pre-computed AF2 models for most proteomes. Serves as a first-point reference for pLDDT and PAE. |

| ColabFold (Google Colab Notebook) | A streamlined, accessible implementation of AF2. Essential for running custom predictions, generating confidence metrics, and performing quick iterations (e.g., with different MSAs). |

| LocalAlphaFold (Docker Container) | A local installation solution for high-throughput or sensitive prediction runs, allowing full control over inference parameters which can affect confidence metrics. |

| PyMOL / ChimeraX w/ AF2 Plugins | Visualization software with plugins to directly color structures by pLDDT and display PAE matrices. Critical for intuitive interpretation. |

| P2Rank | A tool for predicting ligand-binding pockets. Used to assess if low-pLDDT regions map to predicted binding sites, indicating potential false negatives in confidence. |

| SWISS-MODEL Template Identification | Used to check if a low-confidence (low pLDDT/high PAE) region has a homologous template in the PDB. Its absence suggests a novel fold/interface with higher uncertainty. |

| GROMACS / AMBER | Molecular Dynamics simulation suites. Used to validate high-PAE regions by testing the stability and flexibility of predicted domain orientations. |

| SAXS (Small-Angle X-Ray Scattering) | An experimental technique to validate the overall shape and flexibility of a solution-state protein, providing a key check on quaternary structures implied by PAE. |

| 15(R)-HETE | 5(R)-HETE|Arachidonic Acid Metabolite|RUO |

| PAF (C18) | PAF (C18), CAS:74389-69-8, MF:C28H58NO7P, MW:551.7 g/mol |

When to Distrust: Key Limitations and Artifacts

Trust in predictions must be tempered by understanding the architecture's limitations:

- Novel Folds without Evolutionary Signals: AF2 confidence plummets for proteins with few homologous sequences, as its core logic is built on co-evolution.