From Single Layers to Systems Biology: A Complete Guide to Modern Multi-Omics Analysis for Drug Discovery

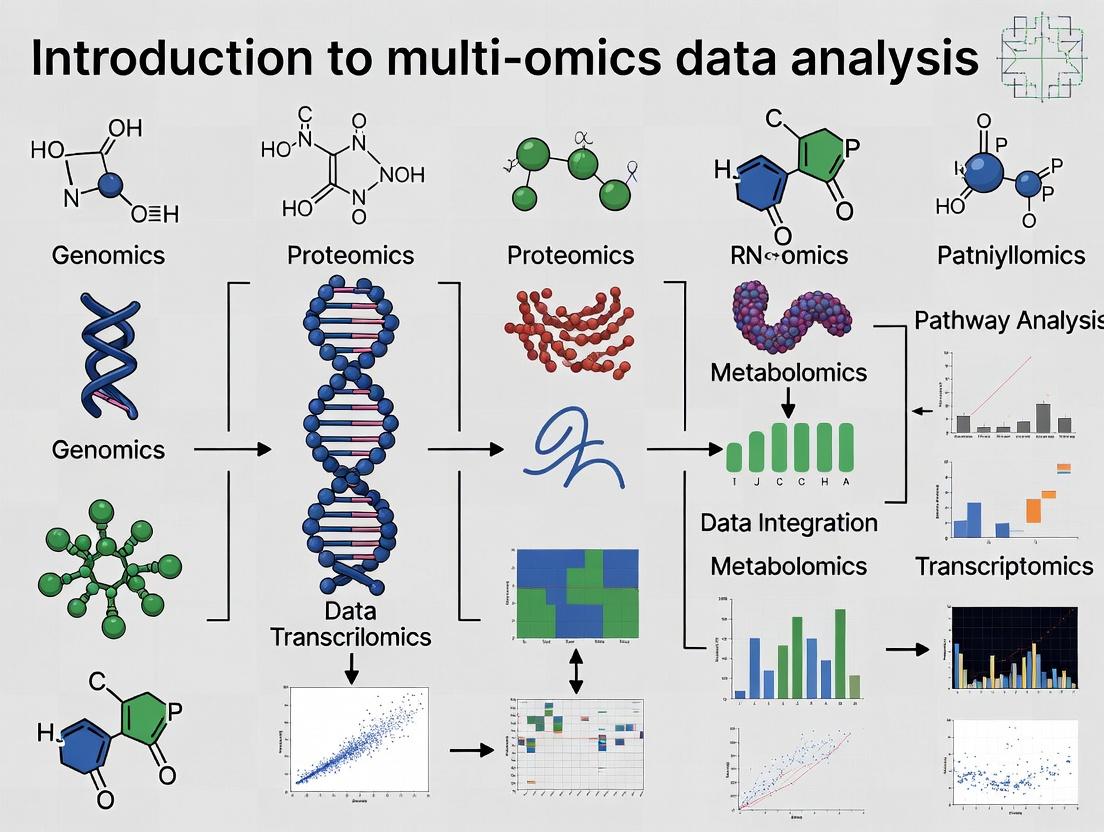

This comprehensive guide introduces researchers, scientists, and drug development professionals to the integrated analysis of multi-omics data.

From Single Layers to Systems Biology: A Complete Guide to Modern Multi-Omics Analysis for Drug Discovery

Abstract

This comprehensive guide introduces researchers, scientists, and drug development professionals to the integrated analysis of multi-omics data. We begin by defining the core 'omics' layers—genomics, transcriptomics, proteomics, and metabolomics—and explaining the power of their integration for uncovering complex biological mechanisms. We then navigate through current methodologies, including batch effect correction, dimensionality reduction, and network analysis, with a focus on real-world applications in biomarker discovery and target identification. A dedicated section addresses common pitfalls in data integration, quality control, and statistical power, providing actionable troubleshooting strategies. Finally, we evaluate methods for validating multi-omics findings and comparing analysis tools. This article provides a foundational yet advanced roadmap for implementing robust multi-omics strategies to accelerate translational research.

What is Multi-Omics? Demystifying Genomics, Transcriptomics, Proteomics, and Metabolomics for Systems Biology

The systematic analysis of biological systems requires an integrated approach beyond single data layers. This guide, framed within a broader thesis on Introduction to multi-omics data analysis research, details the core omics tiers—genomics, transcriptomics, proteomics, and metabolomics—that form the foundational data strata. Integration of these layers is essential for constructing comprehensive biological network models and identifying translatable biomarkers for complex disease and drug development.

The Hierarchical Omics Landscape: Core Definitions & Technologies

The flow of biological information from genotype to phenotype is captured through successive omics layers. Each layer employs distinct technologies for large-scale measurement.

Table 1: The Core Omics Tiers: Scope, Primary Technologies, and Output

| Omics Layer | Analytical Scope | Core Technology | Primary Output | Typical Sample Input |

|---|---|---|---|---|

| Genomics | DNA sequence, structure, variation | Next-Generation Sequencing (NGS), Microarrays | Sequence variants (SNPs, Indels), structural variants, epigenetic marks | DNA (genomic, bisulfite-treated) |

| Transcriptomics | RNA abundance & sequence | RNA-Seq, Microarrays, qRT-PCR | Gene expression levels, splice variants, non-coding RNA profiles | Total RNA, mRNA |

| Proteomics | Protein identity, quantity, modification | Mass Spectrometry (LC-MS/MS), Antibody Arrays | Protein identification, abundance, post-translational modifications (PTMs) | Proteins/Peptides (cell lysate, biofluid) |

| Metabolomics | Small-molecule metabolite profiles | Mass Spectrometry (GC-MS, LC-MS), NMR Spectroscopy | Metabolite identification and relative/absolute concentration | Serum, plasma, urine, tissue extract |

Detailed Experimental Protocols for Key Omics Analyses

Protocol: Whole-Transcriptome RNA Sequencing (RNA-Seq)

Objective: To profile the complete transcriptome, quantifying gene expression levels and identifying splice variants.

- RNA Extraction & QC: Isolate total RNA using TRIzol or silica-membrane kits. Assess integrity (RIN > 8.0) via Bioanalyzer.

- Library Preparation: Deplete ribosomal RNA or enrich poly-A mRNA. Fragment RNA, synthesize cDNA, and ligate sequencing adapters. Amplify via PCR.

- Sequencing: Load library onto an NGS platform (e.g., Illumina NovaSeq) for paired-end sequencing (e.g., 2x150 bp). Target 30-50 million reads per sample.

- Bioinformatics Analysis: Align reads to a reference genome (STAR, HISAT2). Quantify gene-level counts (featureCounts). Perform differential expression analysis (DESeq2, edgeR).

Protocol: Label-Free Quantitative Proteomics (LC-MS/MS)

Objective: To identify and quantify proteins in complex biological samples.

- Protein Extraction & Digestion: Lyse cells/tissue in RIPA buffer with protease inhibitors. Reduce (DTT), alkylate (IAA), and digest proteins with trypsin overnight at 37°C.

- Desalting: Desalt peptides using C18 solid-phase extraction (SPE) columns.

- LC-MS/MS Analysis: Separate peptides on a reverse-phase C18 nano-UHPLC column with a 60-90 min organic gradient. Analyze eluting peptides with a high-resolution tandem mass spectrometer (e.g., Q-Exactive) in data-dependent acquisition (DDA) mode.

- Data Processing: Identify proteins by searching MS/MS spectra against a protein database (MaxQuant, Proteome Discoverer). Quantify based on precursor ion intensity.

Protocol: Untargeted Metabolomics via LC-MS

Objective: To comprehensively profile small molecules in a biological sample.

- Metabolite Extraction: Add cold methanol/acetonitrile/water (4:4:2) to sample for protein precipitation. Vortex, centrifuge, and collect supernatant.

- LC-MS Analysis: Analyze in both positive and negative ionization modes. Use HILIC chromatography for polar metabolites and C18 for lipids. Employ a high-resolution mass spectrometer (e.g., Orbitrap) in full-scan mode (m/z 70-1000).

- Data Preprocessing: Perform peak picking, alignment, and annotation using software (XCMS, MS-DIAL). Annotate metabolites against public spectral libraries (mzCloud, GNPS).

- Statistical Analysis: Use multivariate analysis (PCA, PLS-DA) to identify differentially abundant metabolites.

Visualizing Omics Relationships and Workflows

Diagram 1: Central Dogma & Omics Flow

Diagram 2: Multi-Omics Analysis Pipeline

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Reagents and Kits for Core Omics Workflows

| Item Name | Category | Function in Omics Research |

|---|---|---|

| QIAGEN DNeasy/RNeasy Kits | Genomics/Transcriptomics | Silica-membrane technology for high-purity, rapid isolation of genomic DNA or total RNA from various sample types. |

| Illumina TruSeq RNA Library Prep Kit | Transcriptomics | For preparation of stranded, paired-end RNA-seq libraries from total RNA, with mRNA enrichment or rRNA depletion. |

| Thermo Fisher Pierce BCA Protein Assay Kit | Proteomics | Colorimetric detection and quantification of total protein concentration, critical for sample normalization prior to MS analysis. |

| Trypsin, Sequencing Grade (Promega) | Proteomics | Protease for specific digestion of proteins at lysine/arginine residues, generating peptides for LC-MS/MS analysis. |

| C18 Solid-Phase Extraction (SPE) Cartridges | Metabolomics/Proteomics | Desalting and purification of peptides or metabolites from complex biological extracts prior to mass spectrometry. |

| Deuterated Internal Standards (e.g., CAMAG) | Metabolomics | Stable isotope-labeled compounds spiked into samples for quality control and to improve quantification accuracy in MS. |

| Bio-Rad Protease & Phosphatase Inhibitor Cocktails | General | Added to lysis buffers to prevent protein degradation and preserve post-translational modification states during extraction. |

| 6-Mercaptopurine Monohydrate | 6-Mercaptopurine Monohydrate|CAS 6112-76-1 | 6-Mercaptopurine monohydrate is a purine antagonist for cancer and immunology research. This product is for Research Use Only (RUO). Not for human or veterinary use. |

| Besifloxacin Hydrochloride | Besifloxacin Hydrochloride, CAS:405165-61-9, MF:C19H22Cl2FN3O3, MW:430.3 g/mol | Chemical Reagent |

In the field of multi-omics data analysis, a paradigm shift is underway from single-omics investigations to integrative approaches. This whitepaper posits that the strategic integration of genomics, transcriptomics, proteomics, and metabolomics data uncovers systemic biological insights that are fundamentally inaccessible through the analysis of any single layer in isolation. This emergent property—where the integrated whole is greater than the sum of its individual omics parts—is the Core Hypothesis of modern systems biology. We validate this through current evidence, provide a technical framework for integration, and outline its critical application in accelerating therapeutic discovery.

Quantitative Evidence for Integrative Superiority

Empirical studies consistently demonstrate that multi-omics integration yields a more complete and accurate picture of biological systems than unimodal analysis.

Table 1: Comparative Performance of Single vs. Multi-Omics Analyses in Disease Subtyping

| Study Focus | Single-Omics Approach (Best) | Classification Accuracy | Multi-Omics Integrated Approach | Classification Accuracy | Key Integrated Insight |

|---|---|---|---|---|---|

| Breast Cancer Subtypes | Transcriptomics (RNA-Seq) | 82-88% | RNA-Seq + DNA Methylation + miRNA | 94-97% | Revealed epigenetic drivers of transcriptional heterogeneity |

| Alzheimer's Disease Progression | Proteomics (Mass Spec) | 75-80% | GWAS + RNA-Seq + Proteomics + Metabolomics | 89-92% | Linked genetic risk loci to downstream metabolic pathway dysfunction |

| Colorectal Cancer Prognosis | Genomics (Mutation Panel) | 70-78% | WES + Transcriptomics + Immunohistochemistry | 91-95% | Identified immune-cold tumors masked by mutational load alone |

Table 2: Increase in Mechanistically Interpretable Findings from Integration

| Research Goal | Number of Significant Hits (Single-Omics) | Number of Significant Hits (Integrated) | Fold Increase | Nature of Gained Insights |

|---|---|---|---|---|

| Biomarker Discovery for NSCLC | 12 candidate proteins | 38 multi-omic features | 3.2x | Protein-metabolite complexes as superior early detectors |

| Pathway Elucidation in IBD | 3 dysregulated pathways | 11 coherent inter-omic pathways | 3.7x | Cascade from SNP->splicing->protein activity->metabolite output |

| Drug Target Prioritization | 5 high-interest genes | 15 ranked target modules | 3.0x | Contextualized druggable proteins within active network neighborhoods |

Foundational Methodologies for Multi-Omics Integration

Integration strategies are broadly categorized into a priori knowledge-driven and data-driven methods.

3.1 Early Integration (Data-Driven)

- Protocol: Concatenation-Based Fusion for Predictive Modeling

- Data Preprocessing: Independently normalize and scale each omics dataset (e.g., Z-score for RNA, Min-Max for methylation beta-values).

- Feature Reduction: Apply omics-specific dimensionality reduction (e.g., PCA on transcriptomics, UMAP on proteomics). Retain top components explaining >85% variance.

- Matrix Concatenation: Horizontally concatenate reduced matrices from n samples across m omics layers to form a unified feature matrix of size n x (p1+p2+...+pk).

- Joint Analysis: Feed the concatenated matrix into a machine learning model (e.g., Random Forest, Neural Network) for classification or regression.

- Validation: Use strict cross-validation where all data from a single patient is kept within the same fold to prevent data leakage.

3.2 Late Integration (Knowledge-Driven)

- Protocol: Pathway-Centric Integration Using Public Databases

- Individual Analysis: Perform differential expression/abundance analysis per omics layer. Generate lists of significant entities (e.g., genes, proteins, metabolites).

- Identifier Mapping: Map all entities to standard identifiers (e.g., Ensembl ID, Uniprot ID, HMDB ID) using tools like

g:ProfilerorMetaboAnalyst. - Pathway Enrichment: Conduct over-representation analysis (ORA) or gene set enrichment analysis (GSEA) per omics list against curated databases (KEGG, Reactome).

- Consensus Scoring: Integrate pathway scores using statistical meta-analysis methods (e.g., Fisher's combined probability test) or rank-aggregation (e.g., Robust Rank Aggregation).

- Causal Inference: Use prior knowledge graphs (e.g., from STRING, OmniPath) to infer directional flow from genomic variants to metabolomic changes, filling omics gaps with established interactions.

3.3 Intermediate/Hybrid Integration

- Protocol: Multi-Omics Factor Analysis (MOFA+)

- Data Input: Prepare omics datasets as a list of matrices, aligned by common samples.

- Model Training: Run MOFA+, a statistical framework that decomposes the data into a set of latent factors that capture shared and specific variations across omics types.

- Factor Interpretation: Correlate factors with sample covariates (e.g., disease status, survival) to interpret biological meaning.

- View-Specific Weights: Analyze the weight of each feature (gene, protein) in each factor per omics view to identify key drivers of inter-omic patterns.

Visualization of Core Integration Concepts

Multi-Omics Integration Reveals Latent Drivers

A Causally Linked Multi-Omics Cascade

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Reagents and Platforms for Multi-Omics Research

| Category | Product/Platform Example | Core Function in Integration |

|---|---|---|

| Sample Prep (Nucleic Acids) | PAXgene Blood RNA/DNA System | Enables simultaneous stabilization of RNA and DNA from single blood sample, preserving molecular relationships. |

| Sample Prep (Proteins) | TMTpro 18-plex Isobaric Label Reagents | Allows multiplexed quantitative proteomics of up to 18 samples, directly aligning with transcriptomic cohorts. |

| Single-Cell Multi-Omics | 10x Genomics Multiome ATAC + Gene Expression | Profiles chromatin accessibility (ATAC) and transcriptome (RNA) from the same single nucleus. |

| Spatial Multi-Omics | NanoString GeoMx Digital Spatial Profiler | Enables region-specific, high-plex protein and RNA quantification from a single tissue section. |

| Mass Spectrometry | Thermo Scientific Orbitrap Astral Mass Spectrometer | Delivers deep-coverage proteomics and metabolomics, enabling direct correlation from a shared analytical platform. |

| Data Integration Software | QIAGEN OmicSoft Studio | Commercial platform for harmonizing, visualizing, and statistically analyzing disparate omics datasets. |

| Open-Source Analysis Suite | Snakemake or Nextflow Workflow Managers |

Orchestrates reproducible, modular pipelines for each omics type and their integration. |

| Dexamethasone sodium phosphate | Dexamethasone Sodium Phosphate | High Purity | RUO | Dexamethasone sodium phosphate for research. Highly soluble corticosteroid for cell culture & inflammation studies. For Research Use Only. Not for human use. |

| Ropivacaine Hydrochloride | Ropivacaine Hydrochloride Monohydrate|CAS 132112-35-7 |

Key Technologies and Platforms Generating Each Data Type (NGS, Mass Spectrometry, Arrays)

In the burgeoning field of multi-omics data analysis research, the integration of disparate biological data types is paramount for constructing a holistic understanding of complex systems. This technical guide details the core technologies and platforms responsible for generating the primary data types—from next-generation sequencing (NGS), mass spectrometry, and arrays—that form the foundation of genomics, proteomics, and metabolomics studies. A precise understanding of these data-generation engines is critical for designing robust integrative analyses in drug development and basic research.

Next-Generation Sequencing (NGS) Technologies

NGS platforms enable high-throughput, parallel sequencing of DNA and RNA, forming the bedrock of genomics and transcriptomics data.

Core Platforms & Technologies

| Platform (Vendor) | Core Technology | Key Output Data Type | Max Read Length | Throughput per Run (Approx.) | Primary Applications |

|---|---|---|---|---|---|

| NovaSeq X Series (Illumina) | Sequencing-by-Synthesis (SBS) with reversible terminators | Paired-end reads (FASTQ) | 2x 300 bp (X Plus) | Up to 16 Tb | Whole-genome, exome, transcriptome sequencing |

| Revio (PacBio) | Single Molecule, Real-Time (SMRT) Sequencing | HiFi reads (FASTQ) | 15-20 kb | 360 Gb | De novo assembly, variant detection, isoform sequencing |

| PromethION 2 (Oxford Nanopore) | Nanopore-based electronic sequencing | Long, direct reads (FAST5/FASTQ) | >4 Mb demonstrated | Up to 290 Gb | Ultra-long reads, real-time sequencing, direct RNA seq |

Detailed NGS Experimental Protocol: RNA-Sequencing

Objective: To generate a quantitative profile of the transcriptome. Key Reagents & Kits: See "The Scientist's Toolkit" below. Workflow:

- Total RNA Isolation: Extract RNA using guanidinium thiocyanate-phenol-chloroform (e.g., TRIzol) or column-based methods. Assess integrity (RIN > 8) via Bioanalyzer.

- Poly-A Selection or rRNA Depletion: Enrich for mRNA using oligo(dT) beads or remove ribosomal RNA using probe-based kits.

- cDNA Library Construction:

- Fragment RNA (or cDNA) to ~200-300 bp.

- Synthesize first-strand cDNA using reverse transcriptase and random hexamers/dT primers.

- Synthesize second-strand cDNA with DNA Polymerase I and RNase H.

- End-repair, A-tailing, and ligation of platform-specific adapters with sample indexes (barcodes).

- Library Amplification: Perform limited-cycle PCR to enrich for adapter-ligated fragments.

- Library QC: Quantify using fluorometry (Qubit) and assess size distribution (Bioanalyzer/TapeStation).

- Sequencing: Pool libraries at equimolar ratios and load onto the chosen NGS platform (e.g., Illumina NovaSeq) for cluster generation and sequencing-by-synthesis.

Diagram: Standard RNA-Seq Library Prep Workflow

Mass Spectrometry (MS) Platforms

MS platforms ionize and separate molecules based on their mass-to-charge ratio (m/z), generating proteomic and metabolomic data.

Core Platforms & Technologies

| Platform Category (Vendor Examples) | Ionization Source | Mass Analyzer(s) | Key Output Data Type | Key Applications |

|---|---|---|---|---|

| High-Resolution Tandem MS (Thermo Orbitrap Eclipse, Bruker timsTOF) | Electrospray (ESI), Nano-ESI | Quadrupole, Orbitrap, Time-of-Flight (TOF) | m/z spectra, fragmentation spectra (.raw, .d) | Discovery proteomics, phosphoproteomics, interactomics |

| MALDI-TOF/TOF (Bruker, SCIEX) | Matrix-Assisted Laser Desorption/Ionization (MALDI) | Time-of-Flight (TOF) | m/z peak lists | Microbial identification, imaging mass spec |

| GC-MS / LC-MS (Agilent, Waters) | EI/CI (GC), ESI (LC) | Quadrupole, Triple Quadrupole (QqQ) | Chromatograms & spectra | Targeted metabolomics, quantitation (MRM/SRM) |

Detailed MS Experimental Protocol: Bottom-Up Proteomics

Objective: To identify and quantify proteins in a complex sample. Key Reagents & Kits: See "The Scientist's Toolkit" below. Workflow:

- Protein Extraction & Quantification: Lyse cells/tissue in appropriate buffer (e.g., RIPA with protease inhibitors). Quantify via BCA or similar assay.

- Protein Digestion: Reduce disulfide bonds (DTT), alkylate cysteines (Iodoacetamide), and digest proteins into peptides using trypsin (typically overnight at 37°C).

- Peptide Cleanup/Desalting: Use C18 solid-phase extraction tips or columns to remove salts and detergents.

- Liquid Chromatography (LC): Separate peptides online via reversed-phase C18 column using a gradient of increasing organic solvent (acetonitrile).

- Mass Spectrometry Analysis (Data-Dependent Acquisition - DDA):

- Full MS Scan: The Orbitrap or TOF analyzer acquires a high-resolution MS1 spectrum.

- Peptide Selection: The most intense precursor ions are selected for fragmentation.

- Fragmentation: Selected ions are fragmented via Higher-energy Collisional Dissociation (HCD) or Collision-Induced Dissociation (CID).

- MS2 Scan: A high-resolution MS2 spectrum of the fragment ions is acquired.

- Data Output: Raw files containing paired MS1 and MS2 spectra for downstream database search.

Diagram: Bottom-Up Proteomics DDA Workflow

Array-Based Platforms

Arrays provide a high-throughput, multiplexed approach for profiling known targets via hybridization or affinity binding.

Core Platforms & Technologies

| Platform Type (Vendor Examples) | Core Technology | Key Output Data Type | Key Features | Primary Applications |

|---|---|---|---|---|

| Microarray (Affymetrix GeneChip, Agilent SurePrint) | Hybridization of labeled nucleic acids to immobilized probes | Fluorescence intensity data (.CEL, .GPR) | High multiplexing, cost-effective for known targets | Gene expression (mRNA, miRNA), SNP genotyping |

| Bead-Based Array (Illumina Infinium) | Hybridization to beads, followed by single-base extension | Fluorescence intensity data (.IDAT) | Scalable, high sample throughput | Methylation profiling (EPIC), GWAS |

| Protein/Antibody Array (RayBiotech, R&D Systems) | Affinity binding to immobilized antibodies or antigens | Chemiluminescence/fluorescence signals | Direct protein measurement, no digestion needed | Cytokine screening, phospho-protein profiling |

Detailed Array Experimental Protocol: Gene Expression Microarray

Objective: To measure the relative abundance of thousands of transcripts simultaneously. Key Reagents & Kits: See "The Scientist's Toolkit" below. Workflow:

- Total RNA Isolation & QC: As described in section 1.2. High-quality RNA is critical.

- cDNA Synthesis: Convert RNA into double-stranded cDNA using reverse transcriptase with a T7 promoter primer.

- cRNA Synthesis & Labeling: Perform in vitro transcription (IVT) from the cDNA template using T7 RNA polymerase and biotin- or Cy-labeled nucleotides to produce amplified, labeled cRNA.

- Fragmentation & Hybridization: Chemically fragment the labeled cRNA to uniform size and hybridize to the microarray under stringent conditions (16-20 hrs).

- Washing & Staining: Remove non-specifically bound material through a series of washes. Stain with a fluorescent conjugate (e.g., streptavidin-phycoerythrin for biotin) to detect bound target.

- Scanning & Data Acquisition: Scan the array with a confocal laser scanner to measure fluorescence intensity at each probe location.

The Scientist's Toolkit: Key Research Reagent Solutions

| Item Name (Example Vendor) | Field of Use | Function & Brief Explanation |

|---|---|---|

| TRIzol Reagent (Thermo Fisher) | NGS / Arrays | A monophasic solution of phenol and guanidine isothiocyanate for simultaneous cell lysis and RNA/DNA/protein isolation. Denatures RNases. |

| NEBNext Ultra II DNA Library Prep Kit (NEB) | NGS | A comprehensive kit for converting DNA or RNA into sequencing-ready Illumina-compatible libraries, including fragmentation, end-prep, adapter ligation, and PCR modules. |

| Trypsin, Sequencing Grade (Promega) | Mass Spectrometry | A proteolytic enzyme that cleaves peptide bonds C-terminal to lysine and arginine residues, generating peptides of ideal size for MS analysis. |

| Pierce BCA Protein Assay Kit (Thermo Fisher) | Mass Spectrometry | A colorimetric assay based on bicinchoninic acid (BCA) for accurate colorimetric quantification of protein concentration. |

| GeneChip WT PLUS Reagent Kit (Thermo Fisher) | Arrays | Provides reagents for cDNA synthesis, IVT labeling, and fragmentation specifically optimized for Affymetrix whole-transcript expression arrays. |

| Hybridization Control Kit (CytoSure) | Arrays | Contains labeled synthetic oligonucleotides that bind to control spots on the array, allowing monitoring of hybridization efficiency and uniformity. |

| Propranolol Hydrochloride | Propranolol Hydrochloride | Propranolol hydrochloride is a non-selective beta-adrenergic antagonist for cardiovascular and neurological research. For Research Use Only. Not for human consumption. |

| Pramoxine Hydrochloride | Pramoxine Hydrochloride | Pramoxine hydrochloride is a topical sodium channel blocker for research applications in pruritus and pain pathways. For Research Use Only. Not for human or veterinary use. |

Major Repositories and Public Databases for Multi-Omics Data (e.g., TCGA, GEO, PRIDE, Metabolomics Workbench)

The systematic integration of multiple molecular data layers—genomics, transcriptomics, proteomics, and metabolomics—is fundamental to modern systems biology and precision medicine. A critical first step in any multi-omics analysis research is the acquisition of high-quality, well-annotated public data. This guide provides an in-depth technical overview of the major repositories serving as the primary sources for such data, forming the empirical foundation upon which integrative computational analyses and biological discoveries are built.

Public data repositories are specialized archives designed to store, standardize, and disseminate large-scale omics data. They adhere to FAIR (Findable, Accessible, Interoperable, Reusable) principles and often require data submission as a condition of publication.

Table 1: Major Multi-Omics Data Repositories: Core Characteristics

| Repository Name | Primary Omics Focus | Data Types & Scope | Key Features & Standards | Access Method & Tools |

|---|---|---|---|---|

| The Cancer Genome Atlas (TCGA) | Genomics, Transcriptomics, Epigenomics | DNA-seq, RNA-seq, miRNA-seq, Methylation arrays, clinical data from ~33 cancer types. | Harmonized data via GDC; high-quality controlled pipelines; linked clinical outcomes. | GDC Data Portal, GDC API, TCGAbiolinks (R), GDC Transfer Tool. |

| Gene Expression Omnibus (GEO) | Transcriptomics, Epigenomics | Microarray, RNA-seq, ChIP-seq, methylation, and non-array data. Over 7 million samples. | MIAME/MINSEQE compliant; flexible platform; Series (study) and Sample-centric organization. | Web interface, GEO2R, GEOquery (R), SRA Toolkit for sequences. |

| Sequence Read Archive (SRA) | Genomics, Transcriptomics | Raw sequencing reads (NGS) from all technologies. Over 40 petabases of data. | Part of INSDC; stores raw data in FASTQ, aligned data in BAM/CRAM. | SRA Toolkit (prefetch, fasterq-dump), AWS/GCP buckets, ENA browser. |

| Proteomics Identifications (PRIDE) | Proteomics, Metabolomics (MS) | Mass spectrometry-based proteomics and metabolomics data: raw, processed, identification results. | MIAPE compliant; supports mzML, mzIdentML, mzTab; reanalysis via ProteomeXchange. | PRIDE Archive website, PRIDE API, PRIDE Inspector tool suite. |

| Metabolomics Workbench | Metabolomics | MS and NMR spectroscopy data from targeted and untargeted studies. Over 1,000 studies. | Supports a wide range of metabolomics data formats; detailed experimental metadata. | Web-based search, REST API, data download in various processed formats. |

| dbGaP | Genomics, Phenomics | Genotype-phenotype interaction studies. Includes GWAS, clinical, and molecular data. | Controlled-access for sensitive human data; strict protocols for data access approval. | Authorized access via eRA Commons; phenotype and genotype association browsers. |

| ArrayExpress | Transcriptomics, Epigenomics | Functional genomics data, primarily microarray and NGS-based. MIAME/MINSEQE compliant. | Curated data with ontology annotations; cross-references to ENA and PRIDE. | Web interface, API, R/Bioconductor packages. |

| GNPS (Global Natural Products Social Molecular Networking) | Metabolomics | Tandem mass spectrometry (MS/MS) data for natural products and metabolomics. | Enables molecular networking, spectral library matching, and repository-scale analysis. | Web platform, MASST search, Feature-Based Molecular Networking workflows. |

Table 2: Quantitative Summary of Repository Contents (Representative Stats)

| Repository | Estimated Studies | Estimated Samples/ Datasets | Primary Data Volume | Update Frequency |

|---|---|---|---|---|

| TCGA (via GDC) | 1 (pan-cancer program) | > 20,000 cases (multi-omic per case) | ~ 3.5 PB | Static, legacy archive. |

| GEO | > 150,000 | > 7,000,000 | Tens of PB | Daily submissions. |

| SRA | Millions of runs | > 40 Petabases of sequence data | > 40 PB | Continuous. |

| PRIDE | > 20,000 | > 1,000,000 datasets | ~ 1.5 PB | Weekly. |

| Metabolomics Workbench | > 1,200 | Not uniformly defined | ~ 50 TB | Regular submissions. |

Experimental Protocols and Data Generation Standards

The utility of public data hinges on the reproducibility of the underlying experiments. Below are generalized protocols for key omics technologies prevalent in these repositories.

Bulk RNA-Sequencing (Transcriptomics - representative for GEO, SRA, TCGA)

Protocol Title: Standard Workflow for Illumina Stranded Total RNA-Seq Library Preparation and Sequencing.

Key Steps:

- RNA Extraction & QC: Isolate total RNA using silica-membrane columns (e.g., RNeasy kit). Assess integrity via RIN (RNA Integrity Number) on Bioanalyzer. Require RIN > 7 for mammalian samples.

- rRNA Depletion: Use ribodepletion kits (e.g., Illumina Ribo-Zero Plus) to remove ribosomal RNA, enriching for mRNA and non-coding RNA.

- cDNA Synthesis & Library Prep: Fragment purified RNA. Synthesize first-strand cDNA with random hexamers and reverse transcriptase. Synthesize second strand incorporating dUTP for strand specificity. Perform end repair, A-tailing, and adapter ligation (using unique dual indices, UDIs).

- Library QC & Quantification: Purify libraries using SPRI beads. Quantify via fluorometry (Qubit). Assess size distribution via Bioanalyzer/Tapestation.

- Sequencing: Pool libraries equimolarly. Sequence on Illumina NovaSeq or HiSeq platform to a minimum depth of 30 million paired-end (2x150 bp) reads per sample.

Liquid Chromatography-Tandem Mass Spectrometry (LC-MS/MS) for Proteomics (representative for PRIDE)

Protocol Title: Data-Dependent Acquisition (DDA) Proteomics for Whole-Cell Lysate Analysis.

Key Steps:

- Protein Extraction & Digestion: Lyse cells in strong denaturant (e.g., 8M Urea, RIPA buffer). Reduce disulfide bonds with DTT (10mM, 30min, 56°C). Alkylate with iodoacetamide (25mM, 20min, dark). Digest with sequence-grade trypsin/Lys-C (1:50 enzyme:protein, 37°C, overnight) after dilution to 1-2M urea.

- Peptide Desalting: Desalt peptides using C18 solid-phase extraction (SPE) tips or StageTips. Elute with 60% acetonitrile/0.1% formic acid.

- LC-MS/MS Analysis: Reconstitute peptides in 0.1% formic acid. Load onto a C18 reverse-phase nanoLC column. Separate using a 60-180 min gradient from 2% to 35% acetonitrile. Interface with MS via nano-electrospray.

- Mass Spectrometry: Operate instrument (e.g., Q-Exactive HF, timsTOF) in DDA mode. Perform full MS1 scan (e.g., 60k resolution, 300-1750 m/z). Select top N most intense precursor ions (charge states 2-7) for fragmentation via higher-energy collisional dissociation (HCD). Acquire MS2 spectra at 15-30k resolution.

- Data Output: Raw instrument files (.raw, .d) are converted to open formats (.mzML) for submission.

Untargeted Metabolomics by LC-MS (representative for Metabolomics Workbench, GNPS)

Protocol Title: Global Metabolic Profiling of Plasma/Sera Using Reversed-Phase Chromatography and High-Resolution MS.

Key Steps:

- Sample Preparation: Deproteinize plasma/serum (e.g., 50 µL) with cold methanol or acetonitrile (1:4 ratio). Vortex, incubate at -20°C, centrifuge. Transfer supernatant and dry in a vacuum concentrator. Reconstitute in mobile phase starting conditions.

- Chromatographic Separation: Use a reversed-phase column (e.g., C18). Run a binary gradient: (A) Water + 0.1% formic acid; (B) Acetonitrile + 0.1% formic acid. Gradient from 2% B to 98% B over 15-25 minutes.

- Mass Spectrometry in Polarity Switching Mode: Use a high-resolution Q-TOF or Orbitrap mass spectrometer. Acquire data in both positive and negative electrospray ionization (ESI+/-) modes alternately. Acquire full-scan data at high resolution (> 50,000 FWHM) with a mass range of ~50-1200 m/z.

- Data-Dependent MS/MS: In parallel, acquire fragmentation spectra for top ions in each scan cycle to generate experimental MS/MS spectral libraries.

- Quality Controls: Inject pooled quality control (QC) samples repeatedly throughout the batch to monitor instrument stability.

Visualizations of Data Flows and Relationships

Title: Multi-Omics Data Lifecycle from Sample to Repository

Title: Multi-Omics Analysis Workflow from Repositories to Insight

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Reagents and Kits for Multi-Omics Data Generation

| Item Name | Vendor Examples | Function in Protocol |

|---|---|---|

| RNeasy Mini/Midi Kit | Qiagen | Silica-membrane based purification of high-quality total RNA from various samples; critical for transcriptomics. |

| KAPA HyperPrep Kit | Roche | A widely used library preparation kit for Illumina sequencing from DNA or RNA, offering robust performance for genomics/transcriptomics. |

| Illumina Stranded Total RNA Prep with Ribo-Zero Plus | Illumina | Integrated kit for ribodepletion and stranded RNA-seq library construction, ensuring comprehensive transcriptome coverage. |

| Trypsin/Lys-C Mix, Mass Spec Grade | Promega | Proteolytic enzyme for specific digestion of proteins into peptides; gold standard for bottom-up proteomics. |

| S-Trap or FASP Columns | Protifi, Expedeon | Filter-aided or column-based devices for efficient protein digestion and cleanup, ideal for detergent-containing lysates. |

| Pierce C18 Spin Tips | Thermo Fisher Scientific | For desalting and concentrating peptide samples prior to LC-MS/MS analysis, improving sensitivity. |

| Mass Spectrometry Internal Standards Kit | Cambridge Isotope Labs | Stable isotope-labeled compounds added to metabolomics samples for quality control and semi-quantitative analysis. |

| Bioanalyzer RNA Nano or High Sensitivity Kits | Agilent | Microfluidics-based electrophoresis for precise assessment of RNA or DNA library quality and quantity. |

| Qubit dsDNA HS/RNA HS Assay Kits | Thermo Fisher Scientific | Fluorometric quantification of nucleic acids, offering high specificity over spectrophotometric methods. |

| Unique Dual Index (UDI) Kits | Illumina, IDT | Oligonucleotide sets for multiplexing samples, ensuring accurate sample demultiplexing and reducing index hopping artifacts. |

| Milnacipran Hydrochloride | Milnacipran Hydrochloride, CAS:101152-94-7, MF:C15H23ClN2O, MW:282.81 g/mol | Chemical Reagent |

| 2-Methoxypropyl acetate | 2-Methoxypropyl acetate, CAS:70657-70-4, MF:C6H12O3, MW:132.16 g/mol | Chemical Reagent |

The integration of multi-omics data (genomics, transcriptomics, proteomics, metabolomics) is central to modern systems biology. Effective visualization is not merely illustrative but an analytical tool for hypothesis generation, pattern recognition, and communicating complex biological narratives. This guide details three pivotal visualization techniques within the context of a multi-omics analysis research framework.

Core Visualization Techniques: Methods and Applications

Heatmaps: For Pattern Discovery and Clustering

Methodology: Heatmaps are matrix representations where individual values are colored. In multi-omics, they are essential for visualizing gene expression (RNA-seq), protein abundance, or metabolite levels across multiple samples.

- Data Normalization: Apply Z-score normalization (for rows/features) or log2 transformation (for count data like RNA-seq) to make values comparable.

- Clustering: Perform hierarchical clustering (using Euclidean or correlation distance and Ward's or average linkage) on both rows (features) and columns (samples) to group similar patterns.

- Color Scaling: Choose a diverging colormap (e.g., blue-white-red) for Z-scores or a sequential colormap (e.g., white to dark blue) for normalized abundance.

- Annotation: Add side-columns to annotate sample groups (e.g., disease vs. control) or feature metadata (e.g., gene pathway).

Table 1: Common Clustering & Distance Metrics for Heatmaps

| Aspect | Option 1 | Option 2 | Use Case |

|---|---|---|---|

| Distance Metric | Euclidean Distance | Pearson Correlation | Euclidean for absolute magnitude, Correlation for pattern shape. |

| Linkage Method | Ward's Method | Average Linkage | Ward's minimizes variance; Average is less sensitive to outliers. |

| Normalization | Row Z-score | Log2(CPM+1) | Z-score for relative change; Log-CPM for sequencing count data. |

Methodology: Circos plots display connections between genomic loci or data tracks in a circular layout, ideal for showing structural variants, copy number variations, or correlations between different omics layers on a chromosomal scale.

- Data Preparation: Format data into tracks (e.g., ideogram, scatter plot, histogram, link). Each track requires genomic coordinates (chromosome, start, end).

- Ideogram Setup: Define chromosomes as the plot's backbone using a genome reference file (e.g., hg38).

- Adding Tracks: Plot quantitative data (e.g., gene expression fold-change) as scatter points or histograms on outer tracks.

- Drawing Links: Represent relationships (e.g., fusion genes, chromatin interactions) as ribbons or lines connecting two genomic regions. Link thickness can encode a value like read pair support.

Pathway & Network Diagrams: For Functional Interpretation

Methodology: These diagrams contextualize omics data within biological pathways (e.g., KEGG, Reactome) or protein-protein interaction networks, translating gene lists into mechanistic insights.

- Overlay Data: Map differentially expressed genes or altered proteins onto a canonical pathway. Use a continuous color gradient on node symbols to represent fold-change or p-value.

- Enrichment Visualization: Create bubble charts or bar graphs where node size = gene count, color = enrichment p-value, and position groups related pathways.

- Custom Network Building: Use interaction databases (STRING, BioGRID) to build networks from significant hits, then apply layout algorithms (force-directed, circular) for clarity.

Table 2: Key Reagents & Tools for Multi-Omics Visualization

| Item / Resource | Function / Purpose |

|---|---|

| R/Bioconductor | Primary platform for statistical analysis and generation of publication-quality heatmaps (pheatmap, ComplexHeatmap) and Circos plots (circlize). |

| Python (Matplotlib, Seaborn, Plotly) | Libraries for creating interactive and static visualizations, including advanced heatmaps and network graphs. |

| Cytoscape | Standalone software for powerful, customizable network visualization and analysis, especially for pathway diagrams. |

| Adobe Illustrator / Inkscape | Vector graphics editors for final polishing, annotation, and layout adjustment of figures for publication. |

| KEGG / Reactome / WikiPathways | Databases providing curated pathway maps in standardized formats (KGML, SBGN) for data overlay. |

| UCSC Genome Browser / IGV | Reference tools for visualizing genomic coordinates and aligning custom tracks, informing Circos plot design. |

Experimental Protocol: Integrative Multi-Omics Analysis Workflow

This protocol outlines a standard pipeline for generating data suitable for the visualizations described.

Title: Differential Analysis of Transcriptome and Proteome in Treatment vs. Control Cell Lines.

- Sample Preparation:

- Culture cells in triplicate for treated and control conditions.

- Harvest cells, divide aliquots for RNA and protein extraction.

- Multi-Omics Data Generation:

- RNA-seq: Extract total RNA (QIAGEN RNeasy), assess quality (RIN > 8, Bioanalyzer). Prepare libraries (Illumina TruSeq Stranded mRNA), sequence on NovaSeq (2x150bp).

- Proteomics (LC-MS/MS): Lyse protein pellets, digest with trypsin, label with TMTpro 16-plex reagents. Fractionate by high-pH reverse-phase HPLC, analyze on Orbitrap Eclipse.

- Bioinformatics Processing:

- Transcriptomics: Align reads to reference genome (hg38) with STAR. Quantify gene counts with featureCounts. Perform differential expression with

DESeq2(FDR < 0.05, |log2FC| > 1). - Proteomics: Process raw files with MaxQuant. Search against human UniProt database. Perform differential abundance analysis with

limmaon log2-transformed TMT intensities.

- Transcriptomics: Align reads to reference genome (hg38) with STAR. Quantify gene counts with featureCounts. Perform differential expression with

- Integrative Visualization:

- Heatmap: Create a unified heatmap of significant genes/proteins (Z-scores) across all samples.

- Pathway Analysis: Perform GSEA on both datasets. Visualize enriched pathways (e.g., "Apoptosis Signaling") as annotated diagrams.

- Circos Plot: Generate a plot showing chromosomal locations of key dysregulated genes and proteins, with links indicating cis-correlations.

Diagram: Multi-Omics Data Analysis & Visualization Workflow

Diagram: Key Immune Signaling Pathway (NF-κB)

Multi-Omics Integration in Action: Step-by-Step Workflows, Tools, and Applications in Biomarker Discovery

Multi-omics integrates diverse biological data sets—including genomics, transcriptomics, proteomics, and metabolomics—to construct a comprehensive model of biological systems. Framed within a broader thesis on introduction to multi-omics data analysis research, this technical guide provides a high-level overview of the end-to-end pipeline, from raw data generation to functional biological insight, targeting researchers and drug development professionals.

The canonical pipeline consists of four sequential, interconnected stages: Data Generation & Processing, Multi-Omics Integration, Biological Interpretation, and Validation & Insight.

Diagram Title: Multi-Omics Pipeline Core Stages

Stage 1: Data Generation & Processing

This stage involves converting biological samples into quantitative digital data. Each omics layer requires specific experimental and computational protocols.

Table 1: Core Omics Layers & Data Processing Tools

| Omics Layer | Core Technology | Primary Output | Key Processing Tools (Examples) | Typical Data Matrix |

|---|---|---|---|---|

| Genomics | Next-Generation Sequencing (NGS) | FASTQ files | BWA, GATK, SAMtools | Variant Call Format (VCF) |

| Transcriptomics | RNA-Seq, Microarrays | FASTQ or .CEL files | STAR, HISAT2, DESeq2, limma | Gene Expression Counts/FPKM |

| Proteomics | Mass Spectrometry (LC-MS/MS) | .raw spectra files | MaxQuant, MSFragger, DIA-NN | Peptide/Protein Abundance |

| Metabolomics | LC/GC-MS, NMR | .raw spectra files | XCMS, MS-DIAL, MetaboAnalyst | Metabolite Abundance |

Detailed Protocol: RNA-Seq Data Processing (Example)

- Quality Control (QC): Use FastQC to assess read quality. Trim adapters and low-quality bases with Trimmomatic or Cutadapt.

- Alignment: Map reads to a reference genome (e.g., GRCh38) using a splice-aware aligner like STAR. Output: BAM files.

- Quantification: Count reads mapping to genomic features (genes, exons) using featureCounts or HTSeq. Generate a counts matrix.

- Normalization & Differential Expression: Import counts into R/Bioconductor. Use DESeq2 to normalize for library size and composition, then perform statistical testing to identify differentially expressed genes (FDR < 0.05).

The Scientist's Toolkit: Key Research Reagent Solutions

| Item | Function in Multi-Omics |

|---|---|

| Poly(A) mRNA Magnetic Beads | Isolates eukaryotic mRNA from total RNA for RNA-Seq library prep. |

| Trypsin (Sequencing Grade) | Digests proteins into peptides for bottom-up LC-MS/MS proteomics. |

| TMT/Isobaric Tags | Allows multiplexed quantification of up to 16 samples in a single MS run. |

| Methanol (LC-MS Grade) | Extracts and preserves metabolites for metabolomics; high purity prevents ion suppression. |

| KAPA HyperPrep Kit | Robust library preparation kit for NGS, compatible with degraded inputs. |

| Phosphatase/Protease Inhibitors | Preserves post-translational modification states in proteomics samples. |

Stage 2: Multi-Omics Data Integration

Integration methods correlate features across omics layers to identify master regulators and unified signatures.

Table 2: Common Multi-Omics Integration Methods

| Method Type | Description | Key Algorithms/Tools | Use Case |

|---|---|---|---|

| Concatenation-Based | Merges datasets into a single matrix for joint analysis. | MOFA, DIABLO | Identifying multi-omics biomarkers for patient stratification. |

| Network-Based | Constructs correlation or regulatory networks. | WGCNA, miRLAB | Inferring gene-metabolite interaction networks. |

| Similarity-Based | Integrates via kernels or statistical similarity. | Similarity Network Fusion (SNF) | Cancer subtype discovery from complementary data. |

| Model-Based | Uses statistical models to infer latent factors. | MOFA, Integrative NMF | Deconvolving shared vs. dataset-specific variations. |

Diagram Title: Multi-Omics Data Integration Approaches

Stage 3: Biological Interpretation & Pathway Analysis

Integrated features are mapped to biological knowledge bases for functional insight.

Detailed Protocol: Overrepresentation Analysis (ORA)

- Input: A list of significant integrated features (e.g., genes and metabolites).

- Background Definition: Define the set of all features measured in the experiment.

- Statistical Test: Use a hypergeometric test or Fisher's exact test to assess if features from a specific pathway (e.g., from KEGG, Reactome) appear in your list more often than expected by chance.

- Multiple Testing Correction: Apply Benjamini-Hochberg procedure to control the False Discovery Rate (FDR). Pathways with FDR < 0.05 are considered significantly enriched.

Diagram Title: Biological Interpretation from Integrated Signature

Stage 4: Validation & Translational Insight

Hypotheses generated in silico must be validated experimentally. Common approaches include:

- Targeted Assays: Using qPCR (genes), Western Blot/SRM (proteins), or targeted MS (metabolites) to confirm key findings in a new sample set.

- Functional Experiments: In vitro (knockdown/overexpression in cell lines) or in vivo studies to establish causal relationships.

- Clinical Correlation: Validating multi-omics biomarkers against patient outcomes in independent cohorts.

The final output is refined biological insight, which may include novel therapeutic targets, diagnostic biomarkers, or an advanced understanding of disease mechanisms, directly informing drug development pipelines.

Data Preprocessing and Normalization Strategies for Heterogeneous Datasets

Within the context of multi-omics data analysis research, the integration of heterogeneous datasets—spanning genomics, transcriptomics, proteomics, and metabolomics—presents a formidable challenge. Each omics layer is generated via distinct technologies, resulting in data with varying scales, distributions, missingness, and noise profiles. Effective preprocessing and normalization are not merely preliminary steps but are foundational to deriving biologically meaningful and statistically robust integrated models. This guide details current strategies to transform raw, disparate data into a coherent analytical framework.

Core Preprocessing Challenges in Multi-Omics Data

Each data type requires specific handling before cross-omics normalization can occur.

Table 1: Characteristic Challenges by Omics Data Type

| Data Type | Typical Format | Key Preprocessing Needs | Common Noise Sources |

|---|---|---|---|

| Genomics (e.g., SNP) | Variant counts/calls | Quality score filtering, linkage disequilibrium pruning, imputation. | Sequencing errors, batch effects. |

| Transcriptomics | RNA-seq read counts | Adapter trimming, quality control, alignment, count generation. | Library size, GC content, ribosomal RNA. |

| Proteomics | Mass spectrometry peaks | Peak detection/alignment, background correction, ion current normalization. | Ion suppression, instrument drift. |

| Metabolomics | NMR/LC-MS spectral peaks | Spectral alignment, baseline correction, solvent peak removal. | Matrix effects, day-to-day variability. |

Experimental Protocols for Key Preprocessing Steps

Protocol 3.1: RNA-seq Read Normalization (DESeq2 Median-of-Ratios Method)

- Input: Raw count matrix (genes x samples).

- Step 1 - Calculate gene-wise geometric mean: For each gene, compute the geometric mean of counts across all samples.

- Step 2 - Calculate sample-wise ratios: For each sample, divide each gene's count by the gene's geometric mean (creating a ratio). Genes with a geometric mean of zero or ratios in the extreme upper/lower quantiles are excluded.

- Step 3 - Derive size factor: The size factor for each sample is the median of its non-excluded gene ratios.

- Step 4 - Normalize: Divide the raw counts for each sample by its calculated size factor.

- Output: Normalized count matrix suitable for between-sample comparison.

Protocol 3.2: Probabilistic Quotient Normalization (PQN) for Metabolomics

- Input: Pre-aligned spectral intensity matrix (features x samples).

- Step 1 - Select Reference: Calculate the median spectrum (feature-wise median across all samples) as the reference.

- Step 2 - Calculate Quotients: For each sample, compute the quotient of each feature's intensity divided by the corresponding reference intensity.

- Step 3 - Determine Dilution Factor: Calculate the median of all quotients for that sample. This is the estimated dilution factor.

- Step 4 - Normalize: Divide all feature intensities in the sample by its dilution factor.

- Output: Concentration-corrected intensity matrix, reducing urine/serum dilution variability.

Normalization Strategies for Dataset Integration

Post individual-layer preprocessing, strategies to enable cross-omics analysis are applied.

Table 2: Cross-Platform Normalization Strategies

| Strategy | Principle | Best For | Key Limitation |

|---|---|---|---|

| Quantile Normalization | Forces all sample distributions (per platform) to be identical. | Technical replicate harmonization. | Removes true biological inter-sample variance. |

| ComBat / limma | Empirical Bayes framework to adjust for known batch effects. | Removing strong, known batch covariates. | Requires careful model specification. |

| Mean-Centering & Scaling (Auto-scaling) | Subtract mean, divide by standard deviation per feature. | Making features unit variance for downstream ML. | Amplifies noise in low-variance features. |

| Domain-Specific Normalization | Apply optimal single-omics method (e.g., DESeq2 for RNA-seq, PQN for metabolomics) separately before concatenation. | Preserving data-type-specific biological signals. | Does not correct for inter-omics scale differences. |

| Singular Value Decomposition (SVD) | Removes dominant orthogonal components assumed to represent technical noise. | Unsupervised batch effect removal. | Risk of removing biologically relevant signal. |

Visualization of Workflows and Relationships

Multi-Omics Data Preprocessing and Normalization Pipeline

Decision Guide for Selecting a Normalization Strategy

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Multi-Omics Preprocessing

| Item / Reagent | Function in Preprocessing/Normalization |

|---|---|

| FastQC / MultiQC | Quality control software for sequencing and array data; aggregates reports across samples and omics layers. |

| Trim Galore! / Trimmomatic | Removes adapter sequences and low-quality bases from NGS reads, critical for accurate alignment. |

| DESeq2 (R/Bioconductor) | Performs median-of-ratios normalization and differential expression analysis for count-based RNA-seq data. |

| limma (R/Bioconductor) | Applies linear models to microarray or RNA-seq data for differential expression and batch effect removal. |

| ComBat (sva R package) | Empirical Bayes method to adjust for batch effects in high-dimensional data across platforms. |

| MetaboAnalyst | Web-based platform offering multiple normalization protocols (e.g., PQN, sample-specific) for metabolomics. |

| SIMCA-P+ / Eigenvector Solo | Commercial software with advanced tools for multiplicative scatter correction (MSC) in spectral data. |

| Python Scikit-learn | Provides StandardScaler, RobustScaler, and Normalizer classes for feature-wise scaling post-integration. |

| Pentaerythritol glycidyl ether | Pentaerythritol Glycidyl Ether CAS 3126-63-4 - Supplier |

| 2-Methylbenzenesulfonic acid | 2-Methylbenzenesulfonic Acid|CAS 88-20-0|Supplier |

Within the burgeoning field of multi-omics data analysis research, the integration of disparate biological data layers—such as genomics, transcriptomics, proteomics, and metabolomics—is paramount for constructing a holistic understanding of complex biological systems and disease mechanisms. This technical guide details four core methodological paradigms for multi-omics integration: Concatenation, Correlation, Network, and Machine Learning-Based methods. Each approach presents unique advantages, challenges, and appropriate contexts for application, directly supporting the central thesis that sophisticated integration is the key to unlocking translational insights in biomedical research and drug development.

Concatenation-Based Methods

Concatenation, or early integration, involves merging raw or processed data matrices from multiple omics layers into a single, combined matrix prior to analysis.

Methodology

The core protocol involves:

- Data Preprocessing & Normalization: Each omics dataset is independently normalized (e.g., using variance stabilizing transformation for RNA-seq, quantile normalization for microarrays, or probabilistic quotient normalization for metabolomics) and scaled (e.g., Z-score) to ensure comparability across features with vastly different dynamic ranges.

- Feature Space Union: The normalized matrices are joined horizontally (sample-wise) or vertically (feature-wise). The most common approach is sample-wise concatenation, creating a unified matrix

X_integratedof dimensions(n_samples, n_features_omics1 + n_features_omics2 + ...). - Dimensionality Reduction & Analysis: The high-dimensional concatenated matrix is subjected to multivariate analysis. Principal Component Analysis (PCA) or Multiple Factor Analysis (MFA) are frequently employed to project the data into a lower-dimensional space where samples can be visualized and clusters identified.

Table 1: Quantitative Comparison of Key Concatenation Analysis Tools

| Tool/Method | Key Algorithm | Input Data Type | Primary Output | Typical Runtime for N=100, p=10k |

|---|---|---|---|---|

| MOFA+ | Factor Analysis | Multi-modal matrices | Latent factors, weights | ~10-30 minutes |

| Multiple Factor Analysis (MFA) | Generalized PCA | Quantitative matrices | Combined sample factors | <5 minutes |

| iCluster | Joint Latent Variable | Discrete/Continuous | Integrated clusters | ~15-60 minutes |

Multi-Omics Concatenation and Analysis Workflow

Research Reagent Solutions

- Benchmarking Datasets: Pre-processed, gold-standard multi-omics datasets (e.g., TCGA Pan-Cancer, CPTAC) are essential for validating concatenation pipelines.

- Normalization Reagents: Spike-in controls (e.g., SIRMs for metabolomics, ERCC RNA spikes for transcriptomics) are critical for cross-platform normalization.

- Integrated Analysis Software: Licensed software like SIMCA-P (for MFA) or dedicated R/Python packages (e.g.,

mointegrator,omicade4) provide the computational environment.

Correlation-Based Methods

Correlation, or pairwise integration, identifies statistical relationships between features across different omics datasets, often measured on the same samples.

Methodology

A standard protocol for cross-omics correlation analysis:

- Dataset Preparation: Generate paired datasets where

X(e.g., mRNA expression, dimensions n x p) andY(e.g., protein abundance, dimensions n x q) are measured from the samenbiological samples. - Correlation Matrix Computation: Calculate all pairwise correlations between features in

XandY. Common metrics include Pearson'sr(for linear relationships), Spearman'sÏ(for monotonic), or sparse canonical correlation analysis (sCCA) for high-dimensional data. - Statistical Inference & Multiple Testing Correction: Assess the significance of each correlation (e.g., via t-test for Pearson's r) and apply corrections (Benjamini-Hochberg FDR) to control false discoveries.

- Biological Interpretation: Significant cross-omics feature pairs (e.g., gene-protein) are mapped to pathways (KEGG, Reactome) using enrichment analysis tools.

Cross-Omics Correlation Analysis Pipeline

Network-Based Methods

Network approaches model biological systems as graphs, where nodes represent biomolecules from various omics layers and edges represent functional or physical interactions.

Experimental Protocol

Protocol for Multi-Layer Network Construction:

- Layer-Specific Network Inference: Construct individual omics networks (e.g., gene co-expression via WGCNA, protein-protein interaction from STRING).

- Integration via Similarity or Propagation: Fuse networks using methods like Similarity Network Fusion (SNF):

a. For each omics data type, construct a sample similarity network (affinity matrix

W). b. Normalize each network:P = D^{-1} W, whereDis the diagonal degree matrix. c. Iteratively update each network using the formula:P^{(v)} = S^{(v)} * ( (∑_{k≠v} P^{(k)}) / (V-1) ) * (S^{(v)})^T, whereS^{(v)}is the similarity for viewv, fortiterations. d. Fuse the stabilized networks:P_{fused} = (1/V) ∑_{v=1}^{V} P^{(v)}. - Cluster Detection & Analysis: Perform spectral clustering on

P_{fused}to identify multi-omics patient subtypes. Analyze differential features across clusters.

Table 2: Network Integration Tools and Performance

| Tool | Integration Strategy | Network Types Supported | Key Output | Scalability (Max Samples) |

|---|---|---|---|---|

| Similarity Network Fusion (SNF) | Iterative Message Passing | Sample similarity | Fused network, clusters | ~1,000 |

| MOGAMUN | Multi-Objective Genetic Algorithm | PPI + Expression | Subnetworks | ~500 genes |

| OmicsIntegrator | Prize-Collecting Steiner Forest | PPI + any omics | Context-specific networks | ~10,000 nodes |

Multi-Layer Network Fusion and Clustering

Research Reagent Solutions

- Reference Interaction Databases: Curated knowledge bases (e.g., STRING, BioGRID, Recon3D metabolic model) serve as scaffold networks.

- Network Visualization Software: Tools like Cytoscape with dedicated plugins (Omics Visualizer, enhancedGraphics) are mandatory for interpretation.

- High-Performance Computing (HPC) Resources: Network algorithms are computationally intensive, requiring access to HPC clusters with adequate RAM (≥64 GB) and multi-core processors.

Machine Learning-Based Methods

ML methods, particularly supervised and deep learning models, learn complex, non-linear patterns from integrated omics data for predictive modeling.

Detailed Methodology

Protocol for a Deep Learning-Based Multi-Omics Classifier (e.g., for Disease Prediction):

- Data Partitioning & Input Engineering: Split samples into training (70%), validation (15%), and test (15%) sets. For each omics type, design an input encoding layer (e.g., a dense layer for molecular features).

- Model Architecture Definition: Implement a multi-modal neural network.

- Input Layers: Separate input tensors for each omics type.

- Encoder Branches: Each branch contains dense layers with batch normalization, ReLU activation, and dropout (e.g., 0.5) for feature extraction.

- Integration Layer: Concatenate the outputs of all branches.

- Classifier Head: Dense layers culminating in a softmax output for classification.

- Model Training & Validation: Train using the Adam optimizer with a categorical cross-entropy loss. Monitor validation loss for early stopping. Use gradient-based attribution methods (e.g., SHAP, Integrated Gradients) on the trained model to identify influential features across omics layers.

Table 3: Comparison of ML Integration Approaches

| Method Class | Example Algorithms | Handles High Dimensionality | Models Non-linearity | Interpretability |

|---|---|---|---|---|

| Supervised (Late Integration) | Stacked Generalization, MOFA + Classifier | Moderate | Yes | Moderate |

| Deep Learning (Hybrid) | Multi-modal Autoencoders, DeepION | Yes (with regularization) | High | Low (requires XAI) |

| Kernel Methods | Multiple Kernel Learning (MKL) | Yes | Yes | Low |

Deep Learning Model for Multi-Omics Integration

Research Reagent Solutions

- Curated Benchmark Suites: Frameworks like

MultiBenchprovide standardized datasets and protocols for fair ML model comparison. - Explainable AI (XAI) Tools: Software libraries (e.g.,

SHAP,Captum,LIME) are indispensable for interpreting "black-box" model predictions. - Specialized ML Platforms: Cloud-based AI platforms (Google Vertex AI, NVIDIA CLARA) offer optimized environments for developing and deploying large multi-omics models.

The selection of a multi-omics integration approach—concatenation, correlation, network, or machine learning—is contingent upon the specific biological question, data characteristics, and desired outcome. Concatenation and correlation offer intuitive starts, while network and ML methods provide powerful, albeit complex, frameworks for uncovering deep biological insights. As the field matures, hybrid methods that combine the strengths of these paradigms will be central to advancing the thesis of multi-omics research, ultimately accelerating biomarker discovery and therapeutic development.

Essential Software and R/Python Packages (e.g., MixOmics, MOFA, OmicsPlayground)

Multi-omics data integration is a cornerstone of modern systems biology, enabling researchers to derive a holistic understanding of biological systems. This guide, framed within a broader thesis on multi-omics data analysis, provides an in-depth technical overview of essential software and packages for researchers, scientists, and drug development professionals. We focus on three pivotal tools: MixOmics, MOFA, and OmicsPlayground.

Core Packages and Software

Quantitative Comparison of Core Tools

The following table summarizes key quantitative and functional attributes of the featured tools.

Table 1: Comparison of Multi-Omics Integration Tools

| Feature | MixOmics (R) | MOFA (R/Python) | OmicsPlayground (R/Web) |

|---|---|---|---|

| Primary Method | Projection (PLS, sPLS, DIABLO) | Factor Analysis (Bayesian) | Exploratory Analysis & Visualization Suite |

| Omics Types Supported | Transcriptomics, Metabolomics, Proteomics, Microbiome | Any (Designed for heterogeneous data) | Transcriptomics, Proteomics, Metabolomics, Single-cell |

| Key Strength | Dimensionality reduction, supervised integration | Unsupervised discovery of latent factors | Interactive GUI, no-code analysis, extensive preprocessing |

| Integration Model | Multi-block, multivariate | Statistical, factor-based | Modular, workflow-based |

| Typical Output | Component plots, loadings, network inferences | Factor values, weights, variance decomposition | Interactive plots, biomarker lists, pathway maps |

| Best For | Class prediction, biomarker discovery, correlation | Uncovering hidden sources of variation across datasets | Rapid hypothesis generation, data exploration, validation |

| License | GPL-2/3 | LGPL-3 | Freemium (Academic/Commercial) |

| Latest Version (as of 2024) | 6.24.0 | 2.0 (MOFA2) / 1.6.0 (MOFA+) | 3.0 |

The Scientist's Toolkit: Research Reagent Solutions

This table details essential computational "reagents" for conducting multi-omics integration studies.

Table 2: Essential Research Reagent Solutions for Multi-Omics Analysis

| Item | Function/Explanation |

|---|---|

| High-Performance Compute (HPC) Cluster or Cloud Credits | Essential for running resource-intensive integration algorithms and large-scale permutations. |

| Curated Reference Databases (e.g., KEGG, STRING, Reactome) | Provide biological context for interpreting integrated results (pathways, interactions). |

| Sample Metadata Manager (e.g., REDCap, LabKey) | Critical for ensuring accurate sample pairing across omics layers and covariate tracking. |

| Containerization Software (Docker/Singularity) | Guarantees reproducibility by encapsulating software, dependencies, and environment. |

| Normalization & Batch Correction Algorithms (e.g., ComBat, SVA) | "Wet-lab reagents" of computational biology; essential for removing technical noise before integration. |

| Benchmarking Dataset (e.g., TCGA multi-omics, simulated data) | Serves as a positive control to validate the integration pipeline and method performance. |

| Neopentyl glycol dimethacrylate | Neopentyl Glycol Dimethacrylate (NPGDMA) High-Purity |

| Pentakis(dimethylamino)tantalum(V) | Pentakis(dimethylamino)tantalum(V) |

Detailed Methodologies and Experimental Protocols

Protocol: Multi-Omics Integrative Analysis using DIABLO (MixOmics)

Objective: To identify multi-omics biomarkers predictive of a phenotypic outcome (e.g., disease vs. control).

- Data Preprocessing: Independently normalize and filter each omics dataset (e.g., RNA-seq, Metabolomics). Scale variables to mean zero and unit variance.

- Experimental Design Check: Verify sample alignment across datasets. Format data into a list of matrices (

Xlist) and a factor vector for outcome (Y). - Parameter Tuning (

tune.block.splsda):- Perform 5-fold cross-validation to determine the optimal number of components and the number of features to select per component and per omics type.

- The tuning criterion is the balanced error rate.

- Model Training (

block.splsda): Run the DIABLO model using the tuned parameters. The model finds components that maximize covariance between selected features from all omics datasets and the outcome. - Performance Evaluation (

perf): Assess the model's prediction accuracy using repeated cross-validation to estimate generalizability. - Visualization & Interpretation: Generate sample plots (2D/3D), correlation circle plots, and loading plots to interpret the selected multi-omics features and their associations.

Protocol: Unsupervised Integration using MOFA+

Objective: To discover latent factors that capture shared and unique sources of biological variation across multiple omics assays.

- Data Preparation: Format data into a matrix per view (omics type) with matching samples. Handle missing values (MOFA+ models them explicitly).

- Model Creation (

create_mofa): Initialize the MOFA object. Specify likelihoods (Gaussian for continuous, Bernoulli for binary, Poisson for counts). - Model Training (

run_mofa):- Set training options (e.g., number of factors, convergence criteria).

- The model uses variational inference to decompose the data matrices into Factors (samples x latent factors), Weights (features x factors), and an intercept.

- Variance Decomposition Analysis (

plot_variance_explained): Quantify the proportion of variance explained per factor in each view. This identifies factors that are global (active in many views) or view-specific. - Factor Interpretation:

- Correlate factor values with known sample covariates (e.g., clinical traits).

- Examine the top-weighted features for each factor to infer biological meaning (e.g., Factor 1 loads on cell cycle genes).

- Downstream Analysis: Use factor values as reduced-dimension covariates in survival analysis, or to stratify samples into molecular subgroups.

Visualizations of Workflows and Relationships

Diagram 1: Generic Multi-Omics Integration Workflow

Diagram 2: MOFA+ Factor Model Decomposition Logic

Within the broader thesis on Introduction to multi-omics data analysis research, this case study exemplifies its translational power in oncology. Traditional single-omics approaches often fail to capture the complex, adaptive nature of cancer. Multi-omics—the integrative analysis of genomics, transcriptomics, proteomics, metabolomics, and epigenomics—provides a systems-level view of tumor biology, enabling the identification of novel, druggable targets and predictive biomarkers with higher precision.

Foundational Multi-Omics Technologies and Workflow

A standard multi-omics workflow for target identification involves sequential and parallel data generation, integration, and validation.

Experimental Protocols for Key Omics Layers:

Whole Genome/Exome Sequencing (Genomics):

- Method: DNA is extracted from tumor and matched normal tissue. Libraries are prepared, followed by sequencing on platforms like Illumina NovaSeq. Somatic variants (SNVs, indels) are called using tools like GATK Mutect2 and annotated for functional impact.

- Key Reagent: Hybridization capture probes (e.g., IDT xGen Pan-Cancer Panel) for exome/targeted sequencing enrich disease-relevant genomic regions.

RNA Sequencing (Transcriptomics):

- Method: Total RNA is extracted, ribosomal RNA is depleted, and cDNA libraries are constructed. Sequencing data is aligned (STAR), and gene expression (counts), fusion genes (Arriba, STAR-Fusion), and alternative splicing events are quantified.

Mass Spectrometry-Based Proteomics & Phosphoproteomics:

- Method: Proteins are extracted from tissue, digested with trypsin, and peptides are fractionated. Liquid chromatography-tandem MS (LC-MS/MS) is performed (e.g., on a Thermo Fisher Orbitrap Eclipse). Data is processed via MaxQuant for identification and quantification. Phosphopeptides are enriched using TiO2 or IMAC magnetic beads prior to MS.

Reverse-Phase Protein Array (RPPA - Targeted Proteomics):

- Method: Lysates are printed on nitrocellulose-coated slides, probed with validated primary antibodies against specific proteins/post-translational modifications, and detected by chemiluminescence. Provides quantitative, pathway-centric data.

Integrative Analysis: From Data to Candidate Targets

The core challenge is data integration. Methods include:

- Multi-Omics Factor Analysis (MOFA): A statistical model that identifies latent factors driving variation across all omics datasets.

- Pathway-Centric Integration: Tools like PARADIGM or Ingenuity Pathway Analysis combine omics alterations to infer pathway activity.

- Machine Learning: Supervised models (e.g., random forests) can integrate features from multiple layers to predict drug response or vulnerability.

Visualization of the Core Multi-Omics Integration Workflow:

Diagram Title: Multi-Omics Workflow for Target Discovery

Case Study: Identifying a Synthetic Lethal Target in Pancreatic Ductal Adenocarcinoma (PDAC)

A recent study integrated genomic, transcriptomic, and proteomic data from PDAC patient samples and cell lines.

Key Findings from Integrative Analysis:

- Genomics identified frequent KRAS and TP53 mutations.

- Proteomics revealed consistent overexpression of the DNA repair protein PARP1 even in tumors without homologous recombination (HR) genomic signatures.

- Phosphoproteomics identified hyperactivation of the ATM/ATR DNA damage response (DDR) pathway.

Hypothesis: PDAC cells with KRAS/TP53 co-mutations exhibit a latent DNA repair defect and rely on PARP1-mediated backup repair, creating a context-specific vulnerability.

Visualization of the Identified Signaling Axis:

Diagram Title: PDAC Synthetic Lethality Hypothesis

Validation Protocol:

- Genetic Knockdown: siRNA-mediated PARP1 knockdown in PDAC cell lines (with KRAS/TP53 mutations) led to significant loss of viability vs. controls.

- Pharmacological Inhibition: Treatment with PARP inhibitors (Olaparib, Talazoparib) selectively killed PDAC cells, correlating with proteomic PARP1 levels, not genomic HR status.

- In Vivo Validation: Patient-derived xenograft (PDX) models with high proteomic PARP1 showed marked tumor regression on PARPi treatment, confirming it as a novel, actionable target beyond BRCA-mutant contexts.

Table 1: Multi-Omics Data Yield from PDAC Cohort (n=50)

| Omics Layer | Platform | Key Metrics | Median Coverage/Depth |

|---|---|---|---|

| Genomics | WES (Illumina) | 12,500 somatic variants; 45% KRAS mut; 60% TP53 mut | 150x tumor, 60x normal |

| Transcriptomics | RNA-Seq (Poly-A) | 18,000 genes expressed; 5,000 differentially expressed | 50M paired-end reads |

| Proteomics | LC-MS/MS (TMT) | 8,500 proteins quantified; PARP1 >2x overexpressed in 70% | N/A |

| Phosphoproteomics | LC-MS/MS (TiO2) | 25,000 phosphosites; DDR pathway enriched (p<0.001) | N/A |

Table 2: Validation Experiment Results

| Experiment | Model System | Intervention | Key Result (vs Control) | p-value |

|---|---|---|---|---|

| PARP1 Knockdown | MIA PaCa-2 Cell Line | siRNA PARP1 | 75% reduction in viability | < 0.001 |

| PARP Inhibition | 10 PDAC Cell Lines | Olaparib (10µM, 72h) | IC50 correlated with PARP1 protein (R=0.82) | 0.003 |

| In Vivo PDX Study | 5 PARP1-High PDX Models | Talazoparib (1mg/kg, 21d) | 80% tumor growth inhibition | < 0.001 |

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Reagents for Multi-Omics Target Discovery

| Reagent/Solution | Vendor Examples | Primary Function in Workflow |

|---|---|---|

| AllPrep DNA/RNA/Protein Kit | Qiagen | Simultaneous isolation of intact multi-omic molecules from a single tissue sample. |

| xGen Pan-Cancer Hybridization Panel | Integrated DNA Technologies (IDT) | For targeted exome sequencing, enriching cancer-related genes for efficient variant detection. |

| Poly(A) mRNA Magnetic Beads | NEB, Thermo Fisher | Isolation of polyadenylated mRNA from total RNA for RNA-Seq library prep. |

| TMTpro 16plex Isobaric Label Reagent Set | Thermo Fisher | Multiplexing up to 16 samples in one MS run for high-throughput, quantitative proteomics. |

| Phosphopeptide Enrichment TiO2 Magnetic Beads | GL Sciences, MilliporeSigma | Selective enrichment of phosphopeptides from complex peptide mixtures for phosphoproteomics. |

| Validated Primary Antibodies for RPPA/WB | CST, Abcam | Target-specific protein detection and quantification for orthogonal validation. |

| PARP Inhibitors (Olaparib, Talazoparib) | Selleckchem, MedChemExpress | Pharmacological probes for validating PARP1 target dependency in in vitro and in vivo assays. |

| Solvent Blue 63 | Solvent Blue 63|CAS 6408-50-0|Research Chemical | Solvent Blue 63 is an anthraquinone dye for research in plastics, resins, and industrial applications. This product is For Research Use Only (RUO). Not for personal use. |

| Fluorescein dibutyrate | Fluorescein dibutyrate, CAS:7298-65-9, MF:C28H24O7, MW:472.5 g/mol | Chemical Reagent |

This case study demonstrates that multi-omics integration moves beyond correlative genomics to reveal functional, context-dependent drug targets. The identification of PARP1 as a target in a molecularly defined PDAC subset, driven by proteomic rather than genomic alterations, underscores the necessity of layered data. This approach, framed within systematic multi-omics research, is reshaping oncology drug discovery by identifying novel targets, defining responsive patient populations, and accelerating the development of precision therapies.

Solving the Multi-Omics Puzzle: Troubleshooting Batch Effects, Statistical Power, and Integration Challenges

Identifying and Correcting for Batch Effects and Technical Variation Across Platforms

Within the broader thesis on Introduction to multi-omics data analysis research, a fundamental challenge emerges when integrating datasets generated across different laboratories, times, or technological platforms. This challenge is the introduction of non-biological, systematic technical variation, commonly termed "batch effects." These artifacts can be of greater magnitude than the biological signals of interest, leading to spurious findings, reduced statistical power, and irreproducible results. This guide provides an in-depth technical examination of methodologies for identifying, diagnosing, and correcting for these pervasive variations.

Batch effects arise from a multitude of sources, which vary by platform.

Primary Sources of Variation:

- Platform/Technology: Differences between microarray vs. RNA-seq, LC-MS vs. GC-MS, or different instrument manufacturers.

- Reagent Lots: Variation in antibody lots, sequencing kits, or chromatography columns.

- Operator & Protocol: Differences in sample handling, library preparation, and data acquisition personnel.

- Temporal Runs: Experiments processed on different days or in different sequential orders.

Diagnosis is the critical first step. Principal Component Analysis (PCA) and hierarchical clustering are standard exploratory tools, where samples frequently cluster by batch rather than biological condition. Formal statistical tests like the Surrogate Variable Analysis (SVA) or the Percent Variance Explained (PVE) calculation can quantify the proportion of variance attributable to batch.

Table 1: Percent Variance Explained by Batch in Example Multi-Omics Datasets

| Omics Type | Platform A | Platform B | PVE by Batch (%) | Statistical Test Used |

|---|---|---|---|---|

| Transcriptomics | Illumina HiSeq | Illumina NovaSeq | 35% | SVA (Leek, 2014) |

| Proteomics | Thermo TMT-10plex | Bruker label-free | 50% | ANOVA-PVE |

| Metabolomics | Agilent GC-TOFMS | Waters LC-HRMS | 28% | PCA-based PVE |

| Methylomics | Illumina 450K | Illumina EPIC | 22% | Combat (Johnson, 2007) |

Correction Methodologies and Experimental Protocols

Correction strategies are divided into study design-based and computational approaches.