GPU-Accelerated MD Simulations: Ultimate Guide to AMBER, NAMD & GROMACS Performance in 2024

This comprehensive guide explores GPU acceleration for molecular dynamics (MD) simulations using AMBER, NAMD, and GROMACS.

GPU-Accelerated MD Simulations: Ultimate Guide to AMBER, NAMD & GROMACS Performance in 2024

Abstract

This comprehensive guide explores GPU acceleration for molecular dynamics (MD) simulations using AMBER, NAMD, and GROMACS. Tailored for researchers, scientists, and drug development professionals, it covers foundational principles, practical implementation and benchmarking, troubleshooting and optimization strategies, and rigorous validation techniques. The article provides current insights into maximizing simulation throughput, accuracy, and efficiency for biomedical discovery.

Demystifying GPU Acceleration: Core Concepts for AMBER, NAMD, and GROMACS

Application Notes: The Impact of GPU Acceleration on Simulation Scale and Speed

Molecular dynamics (MD) simulation is a computational method for studying the physical movements of atoms and molecules over time. The introduction of Graphics Processing Unit (GPU) acceleration has transformed this field by providing massive parallel processing power, enabling simulations that were previously impractical. In biomedical research, this allows for the study of large, biologically relevant systems—such as complete virus capsids, membrane protein complexes, or drug-receptor interactions—over microsecond to millisecond timescales, which are critical for observing functional biological events.

Quantitative Performance Gains

The table below summarizes benchmark data for popular MD packages (AMBER, NAMD, GROMACS) running on GPU-accelerated systems versus traditional CPU-only clusters.

Table 1: Benchmark Comparison of GPU vs. CPU MD Performance (Approximate Speedups)

| MD Software Package | System Simulated (Atoms) | CPU Baseline (ns/day) | GPU Accelerated (ns/day) | Fold Speed Increase | Key Biomedical Application |

|---|---|---|---|---|---|

| AMBER (pmemd.cuda) | ~100,000 (Protein-Ligand Complex) | 5 | 250 | 50x | High-throughput virtual screening for drug discovery. |

| NAMD (CUDA) | ~1,000,000 (HIV Capsid) | 1 | 80 | 80x | Studying viral assembly and disassembly mechanisms. |

| GROMACS (GPU) | ~500,000 (Membrane Protein in Lipid Bilayer) | 4 | 200 | 50x | Investigating ion channel gating and drug binding. |

| GROMACS (GPU, Multi-Node) | ~5,000,000 (Ribosome Complex) | 0.5 | 100 | 200x | Simulating protein synthesis and antibiotic action. |

ns/day: Nanoseconds of simulation time achieved per day of compute. Benchmarks are illustrative based on recent literature and community reports, using modern GPU hardware (e.g., NVIDIA A100/V100) versus high-end CPU nodes.

Experimental Protocols

Protocol 1: Standard GPU-Accelerated MD Workflow for Protein-Ligand Binding Analysis

This protocol outlines the key steps for setting up and running a simulation to study the binding stability of a drug candidate (ligand) to a protein target using GPU-accelerated MD.

Objective: To simulate the dynamics of a solvated protein-ligand complex for 500 nanoseconds to assess binding mode stability and calculate free energy perturbations.

Materials & Software:

- Protein structure file (PDB format).

- Ligand parameter file (generated via antechamber/ACPYPE).

- AMBER, NAMD, or GROMACS software suite (GPU-enabled version installed).

- System preparation tool (e.g.,

tleapfor AMBER,CHARMM-GUIfor NAMD,gmx pdb2gmxfor GROMACS). - High-performance computing cluster with NVIDIA GPUs.

- Visualization/analysis software (VMD, PyMOL, MDTraj).

Procedure:

System Preparation:

- Load the protein PDB file. Remove crystal water molecules except those crucial for binding.

- Parameterize the ligand using GAFF/AM1-BCC (AMBER) or CGenFF (NAMD/CHARMM) force fields. Generate topology and coordinate files.

- Combine protein and ligand files. Solvate the complex in a periodic box of explicit water molecules (e.g., TIP3P), ensuring a minimum buffer distance of 10 Å from the protein to the box edge.

- Add neutralizing ions (e.g., Na⁺, Cl⁻) to achieve physiological ion concentration (e.g., 0.15 M NaCl).

Energy Minimization (GPU):

- Run a two-step minimization to remove steric clashes.

- Step 1: Restrain the protein and ligand heavy atoms (force constant 5-10 kcal/mol/Ų) while minimizing solvent and ions (500-1000 steps).

- Step 2: Minimize the entire system without restraints (1000-2000 steps).

- Use the GPU-accelerated minimizer (e.g.,

pmemd.cudain AMBER).

- Run a two-step minimization to remove steric clashes.

System Equilibration (GPU):

- Heat the system from 0 K to 300 K over 50-100 picoseconds (ps) using a Langevin thermostat, with positional restraints on protein/ligand heavy atoms.

- Conduct constant pressure (NPT) equilibration for 1 nanosecond (ns) at 300 K and 1 bar (Berendsen/Parinello-Rahman barostat), gradually releasing positional restraints.

Production MD (GPU):

- Launch the final, unrestrained production simulation for 500 ns using a 2-femtosecond (fs) integration time step. Constrain bonds involving hydrogen with SHAKE or LINCS.

- Write trajectory frames every 100 ps (5000 frames total). Monitor system stability (temperature, pressure, density, RMSD).

Analysis:

- Calculate Root Mean Square Deviation (RMSD) of protein backbone and ligand to assess stability.

- Compute Root Mean Square Fluctuation (RMSF) of residues to identify flexible regions.

- Analyze protein-ligand interactions (hydrogen bonds, hydrophobic contacts) over the trajectory.

- Perform MMPBSA/MMGBSA or alchemical free energy calculations (using GPU-accelerated modules like

pmemd.cudain AMBER) to estimate binding affinity.

Protocol 2: Alchemical Free Energy Perturbation (FEP) for Lead Optimization

This protocol uses GPU-accelerated FEP to calculate the relative binding free energy difference between two similar ligands, a critical task in optimizing drug potency.

Objective: To compute ΔΔG between Ligand A and Ligand B binding to the same protein target.

Procedure (AMBER/NAMD Example):

Setup of Dual-Topology System:

- Create a "hybrid" topology file representing both Ligand A and Ligand B simultaneously, where one is "coupled" (interacts with the system) and the other is "decoupled" (does not interact), controlled by a scaling parameter (λ).

- Prepare the solvated, ionized protein complex with this hybrid ligand.

λ-Window Equilibration (GPU):

- Define a series of 12-24 intermediate λ states that morph ligand A into B.

- For each λ window, run a short minimization, heating, and equilibration (2-5 ns total) using GPU-accelerated dynamics to properly equilibrate the environment.

Production FEP Simulation (GPU):

- Run parallel, multi-state simulations (e.g., using AMBER's

pmemd.cudamulti-GPU capabilities) for each λ window for 5-10 ns each. - Collect energy difference data between adjacent λ windows.

- Run parallel, multi-state simulations (e.g., using AMBER's

Free Energy Analysis:

- Use the Bennett Acceptance Ratio (BAR) or Multistate BAR (MBAR) method to integrate the energy differences across all λ windows and compute the final ΔΔG binding.

Visualizations

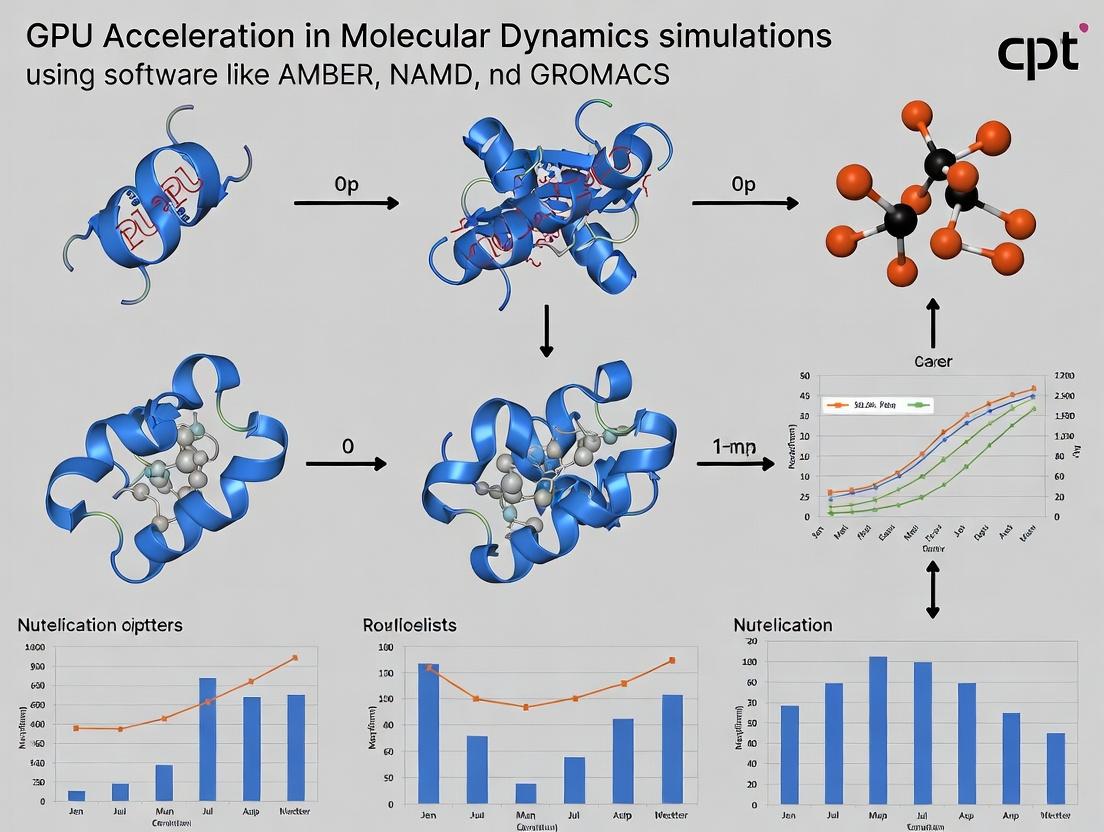

Diagram Title: GPU-Accelerated MD Simulation Workflow

Diagram Title: Alchemical Free Energy Perturbation (FEP) Logic

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Components for a GPU-Accelerated MD Study

| Item / Reagent | Function in Simulation | Example / Note |

|---|---|---|

| GPU Computing Hardware | Provides parallel processing cores for accelerating force calculations and integration. | NVIDIA Tesla (A100, H100) or GeForce RTX (4090) series cards. Critical for performance. |

| MD Software (GPU-Enabled) | The core simulation engine. | AMBER (pmemd.cuda), NAMD (CUDA builds), GROMACS (with -update gpu flag). |

| Explicit Solvent Model | Mimics the aqueous cellular environment. | TIP3P, TIP4P water models. SPC/E is also common. The choice affects dynamics. |

| Force Field Parameters | Mathematical functions defining interatomic energies (bonds, angles, electrostatics, etc.). | ff19SB (AMBER for proteins), charmm36 (NAMD/GROMACS), GAFF2 (for small molecules). |

| Ion Parameters | Accurately model electrolyte solutions for charge neutralization and physiological concentration. | Joung/Cheatham (for AMBER), CHARMM ion parameters. Match to chosen force field. |

| System Preparation Suite | Automates building the simulation box: solvation, ionization, topology generation. | tleap (AMBER), CHARMM-GUI, gmx pdb2gmx (GROMACS). Essential for reproducibility. |

| Trajectory Analysis Toolkit | Processes simulation output to extract biologically relevant metrics. | cpptraj (AMBER), VMD with NAMD, gmx analyis modules (GROMACS), MDAnalysis (Python). |

| Free Energy Calculation Module | Computes binding affinities or relative energies from simulation data. | AMBER's MMPBSA.py or TI/FEP in pmemd.cuda. NAMD's FEP module. GROMACS's freeenergy. |

This document provides a technical overview of modern GPU hardware fundamentals, specifically contextualized for GPU-accelerated Molecular Dynamics (MD) simulations using packages like AMBER, NAMD, and GROMACS. The shift from CPU to heterogeneous computing has dramatically accelerated MD workflows, enabling longer timescale simulations and larger systems critical for drug discovery and biomolecular research. Understanding the underlying GPU architectures, memory subsystems, and specialized compute units is essential for optimizing simulation protocols, allocating resources, and interpreting performance benchmarks.

Core GPU Architectures for HPC/ML: NVIDIA vs. AMD

NVIDIA's Current Architecture (Hopper, Ada Lovelace): NVIDIA's HPC and AI focus is led by the Hopper architecture (e.g., H100), featuring a chiplet-like design with a new Streaming Multiprocessor (SM). Key for MD is the fourth-generation Tensor Core, which supports FP8, FP16, BF16, TF32, FP64, and the new FP8 Transformer Engine for dynamic scaling. Hopper introduces Dynamic Programming (DPX) Instructions to accelerate algorithms like the Smith-Waterman for bioinformatics, relevant to sequence analysis in drug discovery. For desktop/workstation MD, the Ada Lovelace architecture (e.g., RTX 4090) offers improved FP64 performance over its Ampere predecessor, though still optimized for FP32.

AMD's Current Architecture (CDNA 3, RDNA 3): AMD's compute-focused architecture is CDNA 3 (e.g., Instinct MI300A/X), which uses a hybrid design combining CPU and GPU chiplets ("APU"). It features Matrix Core Accelerators (AMD's equivalent to Tensor Cores) that support a wide range of precisions including FP64, FP32, BF16, INT8, and INT4. The architecture emphasizes high bandwidth memory (HBM3) and Infinity Fabric links for scalable performance. For workstation MD, the RDNA 3 architecture (e.g., Radeon PRO W7900) offers improved double-precision performance over prior generations, though typically less focused on pure FP64 than CDNA or NVIDIA's HPC GPUs.

Table: Key Architectural Comparison (NVIDIA Hopper vs. AMD CDNA 3)

| Feature | NVIDIA Hopper (H100) | AMD CDNA 3 (MI300X) |

|---|---|---|

| Compute Units | 132 Streaming Multiprocessors (SMs) | 304 Compute Units (CUs) |

| FP64 Peak (TFLOPs) | 34 (Base) / 67 (with FP64 Tensor Core) | 163 (Matrix Cores + CUs) |

| FP32 Peak (TFLOPs) | 67 | 166 |

| Tensor/Matrix Core | 4th Gen Tensor Core (Supports FP64) | Matrix Core Accelerator (Supports FP64) |

| Key MD-Relevant Tech | DPX Instructions, Thread Block Clusters | Unified Memory (CPU+GPU), Matrix FP64 |

| Memory Type | HBM2e / HBM3 | HBM3 |

| Best For (MD Context) | Large-scale PME, ML-driven MD, FEP | Extremely large system memory footprint simulations |

VRAM (Video RAM) Fundamentals for MD Simulations

VRAM is a critical bottleneck for MD system size. The memory bandwidth (GB/s) determines how quickly atomic coordinates, forces, and neighbor lists can be accessed, while capacity (GB) determines the maximum system size (number of atoms) that can be simulated.

Table: VRAM Capacity vs. Approximate Max System Size (Typical MD, ~2024)

| VRAM Capacity | Approximate Max Atoms (All-Atom, explicit solvent) | Example GPU(s) | Suitable For |

|---|---|---|---|

| 24 GB | 300,000 - 500,000 | RTX 4090, RTX 3090 | Medium protein complexes, small membrane systems |

| 48 GB | 800,000 - 1.2 million | RTX 6000 Ada, A40 | Large complexes, small viral capsids |

| 80 - 96 GB | 2 - 4 million | H100 80GB, MI250X 128GB | Very large assemblies, coarse-grained megastructures |

| 128+ GB | 5+ million | MI300X 192GB, B200 192GB | Massive systems, whole-cell approximations |

Protocol 1: Estimating VRAM Requirements for an MD System

- System Preparation: Prepare your solvated and ionized molecular system using a tool like

tleap(AMBER) orgmx solvate(GROMACS). - Baseline Measurement: Run a minimization or single-step energy calculation on the GPU using your target MD software. Note the peak GPU memory usage via

nvidia-smi -l 1(NVIDIA) orrocm-smi(AMD). - Per-Atom Estimate: Divide the peak VRAM usage (in GB) by the number of atoms in your system. This yields a rough per-atom memory footprint (typically 0.08 - 0.15 MB/atom for double-precision, explicit solvent).

- Scaling Projection: Multiply your per-atom footprint by the target number of atoms for your planned simulation. Add a 20-25% overhead for simulation growth (e.g., box expansion) and analysis buffers.

- Bandwidth Check: For production runs, ensure your GPU's memory bandwidth aligns with software requirements. GROMACS/NAMD with PME is highly bandwidth-sensitive. Use benchmarks from similar-sized systems.

Tensor Cores & Matrix Cores in Scientific Computing

Originally for AI, these specialized units perform mixed-precision matrix multiplications and are now leveraged in MD. NVIDIA's Tensor Cores and AMD's Matrix Cores can accelerate certain linear algebra operations critical to MD, such as:

- Particle Mesh Ewald (PME) for long-range electrostatics: The 3D-FFT calculations can be partially accelerated.

- Machine Learning Potentials (MLPs): Neural network inference for potentials (e.g., in AMBER's

pmemd.aior GROMACS'slibtorch) runs natively on Tensor/Matrix Cores. - Dimensionality Reduction & Analysis: Techniques like t-SNE or PCA on simulation trajectories.

Protocol 2: Enabling Tensor Core Acceleration in GROMACS (2024.x+)

- Build Requirements: Compile GROMACS with CUDA support and ensure cuFFT libraries (for NVIDIA) or hipFFT (for AMD) are linked. Use

-DGMX_USE_TENSORCORE=ON(NVIDIA) during CMake configuration. - Simulation Preparation: Prepare your system and run file (

.mdp) as usual. - Parameter Tuning: In your

.mdpfile, set the following key parameters:cutoff-scheme = verletpbc = xyzcoulombtype = PMEpme-order = 4(4th order interpolation is typically optimal).fourier-spacing = 0.12(May need adjustment for accuracy).

- Run Command: Use the standard

gmx mdruncommand. The GPU-accelerated PME routines will automatically leverage Tensor Cores if the hardware, build, and problem size are compatible. Monitor logs for "Tensor Core" or "Mixed Precision" utilization notes. - Validation: Compare energy drift (total potential) and key observables (e.g., RMSD) against a standard double-precision CPU or GPU run to ensure numerical stability for your system.

The Scientist's Toolkit: Key Research Reagent Solutions

Table: Essential Hardware & Software for GPU-Accelerated MD Research

| Item / Reagent Solution | Function in MD Research | Example/Note |

|---|---|---|

| NVIDIA H100 / AMD MI300X Node | Primary compute engine for large-scale production MD and ML-driven simulations. | Accessed via HPC clusters or cloud (AWS, Azure, GCP). |

| Workstation GPU (RTX Ada / Radeon PRO) | For local system preparation, method development, debugging, and mid-scale production. | RTX 6000 Ada (48GB) or Radeon PRO W7900 (48GB). |

| CUDA Toolkit / ROCm Stack | Core driver and API platform enabling MD software to run on NVIDIA/AMD GPUs, respectively. | Required for compiling or running GPU-accelerated codes. |

AMBER (pmemd.cuda), NAMD, GROMACS |

The MD simulation engines with optimized GPU kernels for force calculation, integration, and PME. | Must be compiled for specific GPU architecture. |

| High-Throughput Interconnect (InfiniBand) | Enables multi-GPU and multi-node simulations for scaling to very large systems. | Necessary for strong scaling in NAMD and GROMACS. |

| Mixed-Precision Optimized Kernels | Software routines that leverage Tensor/Matrix Cores for PME or ML potentials. | Built into latest versions of major MD packages. |

| System Preparation Suite (HTMD, CHARM-GUI) | Prepares complex biological systems (membranes, solvation, ionization) for GPU simulation. | Creates input files compatible with GPU-accelerated engines. |

| Visualization & Analysis (VMD, PyMol) | Post-simulation analysis of trajectories to derive scientific insight. | Often runs on CPU/GPU but relies on data from GPU simulations. |

Visualized Workflows

Title: GPU-Accelerated MD Simulation Workflow

Title: GPU Hardware Stack Impact on MD Performance

This document serves as an application note within a broader thesis on GPU-accelerated molecular dynamics (MD) simulations, focusing on the software ecosystems enabling high-performance computation in AMBER, NAMD, and GROMACS. The efficient execution of MD simulations for biomolecular systems—critical for drug discovery and basic research—is now fundamentally dependent on performant GPU backends. This note provides a comparative overview, detailed protocols, and resource toolkits for utilizing CUDA, HIP, OpenCL, and SYCL backends across these major codes.

Backend Ecosystem Comparison

The following table summarizes the current (as of late 2024) support and key characteristics of each GPU backend within AMBER (pmemd), NAMD, and GROMACS.

Table 1: GPU Backend Support in AMBER, NAMD, and GROMACS

| Backend | Primary Vendor/Standard | AMBER (pmemd) | NAMD | GROMACS | Key Notes & Performance Tier |

|---|---|---|---|---|---|

| CUDA | NVIDIA | Full Native Support (Tier 1) | Full Native Support (Tier 1) | Full Native Support (Tier 1) | Highest maturity & optimization on NVIDIA hardware. |

| HIP | AMD (Portable) | Experimental/Runtime (via HIPify) | Not Supported | Full Native Support (Tier 1 for AMD) | Primary path for AMD GPU acceleration in GROMACS. |

| OpenCL | Khronos Group | Deprecated (Removed in v22+) | Not Supported | Supported (Tier 2) | Portable but generally lower performance than CUDA/HIP. |

| SYCL | Khronos Group (Intel-led) | Not Supported | Not Supported | Full Native Support (Tier 1 for Intel) | Primary path for Intel GPU acceleration. CPU fallback. |

Performance Tier: Tier 1 indicates the most optimized, performant path for a given hardware vendor. Tier 2 indicates functional support but with potential performance trade-offs.

Experimental Protocols for Backend Deployment

Protocol 3.1: Benchmarking GPU Backend Performance in GROMACS

Objective: Compare simulation performance (ns/day) across CUDA, HIP, and SYCL backends on respective hardware using a standardized benchmark system.

Materials:

- Hardware: NVIDIA GPU (for CUDA), AMD GPU (for HIP), Intel GPU (for SYCL), or compatible system.

- Software: GROMACS installed with all relevant backends enabled.

- Benchmark System:

adh_dodecbenchmark (built-in) or a relevant drug-target protein-ligand system (e.g., from the PDB).

Methodology:

- Build Configuration: Compile GROMACS from source using CMake.

- For CUDA:

-DGMX_GPU=CUDA -DCMAKE_CUDA_ARCHITECTURES=<arch> - For HIP:

-DGMX_GPU=HIP -DCMAKE_HIP_ARCHITECTURES=<arch> - For SYCL:

-DGMX_GPU=SYCL -DGMX_SYCL_TARGETS=<target>(e.g.,intel_gpu).

- For CUDA:

- Run Configuration: Use a standardized

.mdpfile (e.g.,benchmark.mdp) with PME, constraints, and a defined cutoff. - Execution: Run the simulation on a single GPU.

- Data Collection: Record the performance (ns/day) from the log file (

gmx.md.log). Repeat three times and calculate the mean. - Analysis: Compare means across backends/hardware, normalized to the system size (atoms).

Protocol 3.2: Configuring and Running AMBER (pmemd) on NVIDIA GPUs

Objective: Execute a production-level MD simulation using the optimized CUDA backend in AMBER's pmemd.

Materials:

- Pre-equilibrated system coordinates (

inpcrd) and parameters (prmtop). - Input file (

md.in) specifying dynamics parameters. - AMBER installation with pmemd.cuda.

Methodology:

- Input Preparation: Ensure the

md.infile specifies GPU-accelerated PME and long-range corrections. - Execution: Launch

pmemd.cudawith the appropriate GPU ID.

- Monitoring: Monitor the output (

md.out) for performance metrics and any errors. Validate energy conservation.

Protocol 3.3: Deploying NAMD on Multi-GPU NVIDIA Nodes

Objective: Leverage CUDA and NAMD's Charm++ runtime for scalable multi-GPU simulation.

Materials:

- NAMD binary compiled with CUDA and Charm++.

- PSF, PDB, and parameter files for the system.

- NAMD configuration file.

Methodology:

- Configuration File: Set

PMEandGBISoptions for GPU acceleration. Definestepspercyclefor load balancing. - Execution: Use

charmrunor the MPI-based launcher to distribute work across GPUs.

- Validation: Check the log file for correct GPU detection and load balancing statistics.

Visualization of Backend Selection Logic

Title: GPU Backend Selection Logic for AMBER, NAMD, and GROMACS

Title: Generalized Workflow for GPU Backend Performance Benchmarking

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 2: Essential Computational Reagents for GPU-Accelerated MD

| Item | Function & Purpose | Example/Note |

|---|---|---|

| MD Engine (Binary) | The core simulation software executable, compiled for a specific backend. | pmemd.cuda, namd3, gmx_mpi (CUDA/HIP/SYCL). |

| System Topology File | Defines the molecular system: atom connectivity, parameters, and force field. | AMBER .prmtop, NAMD .psf, GROMACS .top. |

| Coordinate/Structure File | Contains the initial 3D atomic coordinates. | .inpcrd, .pdb, .gro. |

| Force Field Parameter Set | Mathematical parameters defining bonded and non-bonded interactions. | ff19SB, CHARMM36, OPLS-AA/M. |

| MD Input Configuration File | Specifies simulation protocol: integrator, temperature, pressure, output frequency. | AMBER .in, NAMD .conf/.namd, GROMACS .mdp. |

| GPU Driver & Runtime | Low-level software enabling communication between the OS and specific GPU hardware. | NVIDIA Driver+CUDA Toolkit, AMD ROCm, Intel oneAPI. |

| Benchmark System | A standardized molecular system for consistent performance comparison across hardware/software. | GROMACS adh_dodec, NAMD STMV, or a custom protein-ligand complex. |

| Performance Profiling Tool | Software to analyze GPU utilization, kernel performance, and identify bottlenecks. | NVIDIA nvprof/Nsight, AMD ROCprof, Intel VTune. |

| Visualization & Analysis Suite | Software for inspecting trajectories, calculating properties, and preparing figures. | VMD, PyMOL, MDTraj, CPPTRAJ. |

The evolution of Molecular Dynamics (MD) simulation software—AMBER, NAMD, and GROMACS—is fundamentally intertwined with the advent of General-Purpose GPU (GPGPU) computing. This shift from CPU to GPU parallelism addresses the core computational bottlenecks of classical MD, enabling biologically relevant timescales and system sizes. This application note details the GPU acceleration of three critical algorithmic domains within the broader thesis that GPUs have catalyzed a paradigm shift in computational biophysics and structure-based drug design.

GPU-Accelerated Particle Mesh Ewald (PME) for Long-Range Electrostatics

The accurate treatment of long-range electrostatic interactions via the Ewald summation is computationally demanding. The Particle Mesh Ewald (PME) method splits the calculation into short-range (real space) and long-range (reciprocal space) components.

- GPU Acceleration Strategy: The real-space part, a pairwise calculation with a cutoff, is naturally parallelized on GPU cores. The reciprocal space part involves a 3D Fast Fourier Transform (FFT), which is offloaded to highly optimized GPU-accelerated FFT libraries (e.g., cuFFT).

- Implementation in Major Suites:

- AMBER/NAMD: Employ a hybrid scheme where direct force calculations and the FFT are executed on the GPU, while other tasks may remain on the CPU.

- GROMACS: Uses a more fully GPU-offloaded PME approach, where both the PP (particle-particle) and PME tasks can run on the same or separate GPUs, minimizing CPU-GPU communication.

Table 1: Performance Metrics of GPU-Accelerated PME

| Software (Version) | System Size (Atoms) | Hardware (CPU vs. GPU) | Performance (ns/day) | Speed-up Factor | Reference Year |

|---|---|---|---|---|---|

| GROMACS 2023.3 | ~100,000 (DHFR) | 1x AMD EPYC 7763 vs. 1x NVIDIA A100 | 52 vs. 1200 | ~23x | 2023 |

| AMBER 22 | ~80,000 (JAC) | 2x Intel Xeon 6248 vs. 1x NVIDIA V100 | 18 vs. 220 | ~12x | 2022 |

| NAMD 3.0b | ~144,000 (STMV) | 1x Intel Xeon 6148 vs. 1x NVIDIA RTX 4090 | 5.2 vs. 98 | ~19x | 2024 |

Experimental Protocol: Benchmarking PME Performance

- System Preparation: Solvate a standard benchmark protein (e.g., DHFR in TIP3P water) in a cubic box with ~1.0-1.2 nm padding. Add ions to neutralize.

- Parameterization: Use AMBER/CHARMM force fields as appropriate for the software.

- Simulation Setup: Minimize, heat (0→300K over 100 ps), and equilibrate (1 ns NPT) the system.

- Benchmark Run: Conduct a 10-50 ns production run in NPT ensemble (300K, 1 bar).

- Hardware Configuration: Use identical CPU-only and CPU+GPU nodes. For GPU runs, ensure PME is explicitly assigned to GPU.

- Data Collection: Record the simulation time and calculate performance (ns/day). Use integrated performance analysis tools (e.g.,

gmx mdrun -verbose).

Diagram Title: GPU-Accelerated PME Algorithm Workflow

GPU Parallelization of Bonded and Non-Bonded Forces

The calculation of forces constitutes >90% of MD computational load. GPUs accelerate both bonded (local) and non-bonded (pairwise) terms.

- Bonded Forces (Bonds, Angles, Dihedrals): These involve small, fixed lists of atoms. GPU acceleration uses fine-grained parallelism, assigning each bond/angle term to a separate GPU thread. Memory access patterns are optimized for coalesced reads.

- Non-Bonded Forces (Lennard-Jones, Short-Range Electrostatics): This is an N-body problem. GPUs use:

- Neighbor Searching: Regular updating of particle neighbor lists using cell-list or Verlet list algorithms on the GPU.

- Kernel Computation: Each GPU thread block processes a cluster of atoms, calculating interactions with neighbors within a cutoff. Tiling and masking strategies avoid branch divergence and maximize memory throughput.

Table 2: GPU Kernel Performance for Force Calculations

| Force Type | Parallelization Strategy | Typical GPU Utilization | Bottleneck | Primary Speed-up vs. CPU |

|---|---|---|---|---|

| Non-Bonded (Short-Range) | Verlet list, 1 thread per atom pair | Very High | Memory bandwidth | 30-50x |

| Bonded | 1 thread per bond/angle term | High | Instruction throughput | 10-20x |

| PME (FFT) | Batched 3D FFT libraries | High | GPU shared memory/registers | 15-30x |

Experimental Protocol: Profiling Force Calculation Kernels

- Tool Selection: Use NVIDIA Nsight Systems/Compute or AMD ROCprof for hardware-level profiling.

- Run Simulation: Execute a short (~1000 step) simulation of a benchmark system with profiling enabled (e.g.,

nsys profile gmx_mpi mdrun). - Kernel Analysis: Identify the most time-consuming CUDA/HIP kernels (e.g.,

k_nonbonded,k_bonded). - Metric Collection: Note kernel occupancy, achieved memory bandwidth (GB/s), and warp execution efficiency.

- Comparison: Run an equivalent CPU simulation and profile using

perforIntel VTuneto compare core utilization and vectorization efficiency.

Enhanced Sampling Methods Unlocked by GPU Performance

GPU acceleration makes computationally intensive enhanced sampling methods tractable for routine use.

- Adaptive Sampling & Markov State Models (MSMs): Multiple short, independent GPU simulations can be launched in parallel to rapidly explore conformational space. Results are integrated into an MSM.

- Alchemical Free Energy Perturbation (FEP): GPU acceleration allows simultaneous or rapid sequential calculation of numerous λ-windows for absolute and relative binding free energy calculations, a cornerstone of computer-aided drug design (CADD).

Table 3: Enhanced Sampling Protocols Accelerated by GPUs

| Method | Key GPU-Accelerated Component | Application in Drug Development | Typical Speed-up Enabler |

|---|---|---|---|

| Metadynamics | Calculation of bias potential on collective variables | Protein-ligand binding/unbinding | 10-20x (longer hills) |

| Umbrella Sampling | Parallel execution of multiple simulation windows | Potential of Mean Force (PMF) for translocation | 100x+ (parallel windows) |

| Alchemical FEP | Concurrent calculation of all λ-windows on multiple GPUs | High-throughput binding affinity ranking | 50-100x (vs. single CPU) |

Experimental Protocol: GPU-Accelerated Alchemical FEP

- System Setup: Prepare protein-ligand complex and ligand-only in solvent for a "dual topology" approach.

- λ-Windows: Define 12-24 λ-states for vanishing/appearing of electrostatic and Lennard-Jones interactions.

- Simulation Engine: Use GPU-accelerated FEP-enabled engines (AMBER's

pmemd.cuda, NAMD, GROMACS withfree-energysupport). - Parallel Execution: Launch all λ-windows simultaneously on a multi-GPU node or cluster, using ensemble-directed runners (e.g.,

gmx mdrun -multidir). - Data Analysis: Use MBAR or TI methods (e.g.,

alchemlyb,ParseFEP) on collected energy time series to compute ΔG.

Diagram Title: GPU-Powered Enhanced Sampling Protocol

The Scientist's Toolkit: Key Research Reagent Solutions

Table 4: Essential Components for GPU-Accelerated MD Research

| Item/Reagent | Function/Role in GPU-Accelerated MD | Example/Note |

|---|---|---|

| NVIDIA A100/H100 or AMD MI250X GPU | Primary accelerator for FP64/FP32/FP16 MD calculations. Tensor Cores can be used for ML-enhanced sampling. | High memory bandwidth (>1.5TB/s) is critical. |

| GPU-Optimized MD Software | Provides the implemented algorithms and kernels. | GROMACS, AMBER(pmemd.cuda), NAMD (Kokkos/CUDA), OpenMM. |

| CUDA / ROCm Toolkit | Essential libraries (cuBLAS, cuFFT, hipFFT) and compilers for software execution and development. | Version must match driver and software. |

| Standard Benchmark Systems | For validation and performance comparison. | JAC (AMBER), DHFR (GROMACS), STMV (NAMD). |

| Enhanced Sampling Plugins | Implements advanced methods on GPU frameworks. | PLUMED (interface with GROMACS/AMBER), FE-Toolkit. |

| High-Speed Parallel Filesystem | Handles I/O from hundreds of parallel simulations without bottleneck. | Lustre, BeeGFS, GPFS. |

| Free Energy Analysis Suite | Processes output from GPU-accelerated FEP runs. | Alchemlyb, PyAutoFEP, Cpptraj/PTRAJ. |

| Container Technology (Singularity/Apptainer) | Ensures reproducible software environments across HPC centers. | Pre-built containers available from NVIDIA NGC, BioContainers. |

Application Notes on Emerging Computational Paradigms

The integration of Multi-GPU systems, Cloud HPC, and AI/ML is fundamentally reshaping the landscape of GPU-accelerated molecular dynamics (MD) simulations, enabling unprecedented scale and insight in biomolecular research.

Table 1: Quantitative Comparison of Modern MD Simulation Platforms

| Platform / Aspect | Traditional On-Premise Cluster | Cloud HPC (e.g., AWS ParallelCluster, Azure CycleCloud) | AI/ML-Enhanced Workflow (e.g., DiffDock, AlphaFold2+MD) |

|---|---|---|---|

| Typical Setup Time | Weeks to Months | Minutes to Hours | Variable (Model training can add days/weeks) |

| Cost Model | High CapEx, moderate OpEx | Pure OpEx (Pay-per-use) | OpEx + potential SaaS/AI service fees |

| Scalability Limit | Fixed hardware capacity | Near-infinite, elastic scaling | Elastic compute for training; inference can be lightweight |

| Key Advantage for MD | Full control, data locality | Access to latest hardware (e.g., A100/H100), burst capability | Predictive acceleration, enhanced sampling, latent space exploration |

| Typical Use Case in AMBER/NAMD/GROMACS | Long-term, stable production runs | Bursty, large-scale parameter sweeps or ensemble simulations | Pre-screening binding poses, guiding simulations with learned potentials, analyzing trajectories |

Table 2: Performance Scaling of Multi-GPU MD Codes (Representative Data, 2023-2024)

| Software (Test System) | GPU Configuration (NVIDIA) | Simulation Performance (ns/day) | Scaling Efficiency vs. Single GPU |

|---|---|---|---|

| GROMACS (STMV, 1M atoms) | 1x A100 | ~250 | 100% (Baseline) |

| GROMACS (STMV, 1M atoms) | 4x A100 (Node) | ~920 | ~92% |

| NAMD (ApoA1, 92K atoms) | 1x V100 | ~150 | 100% (Baseline) |

| NAMD (ApoA1, 92K atoms) | 8x V100 (Multi-Node) | ~1100 | ~92% |

| AMBER (pmemd, DHFR) | 1x H100 | ~550 | 100% (Baseline) |

| AMBER (pmemd, DHFR) | 2x H100 | ~1070 | ~97% |

Experimental Protocols

Protocol 1: Deploying a Cloud HPC Cluster for Burst Ensemble MD Simulations

Objective: Rapidly provision a cloud-based HPC cluster to run 100+ independent GROMACS simulations for ligand binding free energy calculations.

Methodology:

- Cluster Definition: Use a cloud CLI (e.g., AWS

pcluster). Define a head node (c6i.xlarge) and compute fleet (20+ instances of g5.xlarge, each with 1x A10G GPU). - Image Configuration: Start from a pre-configured HPC AMI with GROMACS/AMBER/NAMD, MPI, and GPU drivers. Use a bootstrap script to install specific research codes.

- Parallel Filesystem: Mount a high-throughput, shared parallel filesystem (e.g., FSx for Lustre on AWS, BeeGFS on Azure) to all nodes for fast I/O of trajectory data.

- Job Submission: Use a job scheduler (Slurm). Prepare a job array script where each task runs a single simulation with a different ligand conformation or mutant protein structure.

- Data Post-Processing: Upon completion, auto-terminate compute nodes. Use cloud-based object storage (S3, Blob) for long-term, cost-effective archiving of raw trajectories.

Protocol 2: Integrating AI/ML-Based Pose Prediction with Traditional MD Refinement

Objective: Use a deep learning model to generate initial protein-ligand poses and refine them with GPU-accelerated MD.

Methodology:

- AI Pose Generation:

- Input: Protein PDB file and ligand SMILES string.

- Tool: Utilize an open-source model like DiffDock or a commercial API.

- Process: Generate 50-100 top-ranked predicted binding poses. Output as PDB files.

- Automated Setup Pipeline:

- Script (Python) to convert each PDB to simulation-ready format (e.g., AMBER

tleapor GROMACSpdb2gmx). - Parameterize ligand using

antechamber(GAFF) orCGenFF. - Solvate and ionize each system in an identical water box.

- Script (Python) to convert each PDB to simulation-ready format (e.g., AMBER

- High-Throughput Refinement:

- Launch an ensemble of short (5-10 ns) GPU-accelerated MD simulations (one per pose) using

pmemd.cudaorgmx mdrun. - Run on multi-GPU cloud instances for parallel execution.

- Launch an ensemble of short (5-10 ns) GPU-accelerated MD simulations (one per pose) using

- Analysis with ML-Augmented Metrics:

- Calculate traditional MM/GBSA binding energies.

- Additionally, compute learned interaction fingerprints or use a trained scoring model to rank final, equilibrated poses.

Visualization: Workflow and Architecture Diagrams

Title: Cloud HPC & AI/ML Integrated Drug Discovery Workflow

Title: The AI/ML-MD Iterative Research Cycle

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Digital Research "Reagents" for Modern GPU-Accelerated MD

| Item / Solution | Function in Research | Example/Provider |

|---|---|---|

| Cloud HPC Provisioning Tool | Automates deployment and management of scalable compute clusters for burst MD runs. | AWS ParallelCluster, Azure CycleCloud, Google Cloud HPC Toolkit |

| Containerized MD Software | Ensures reproducible, dependency-free execution of simulation software across environments. | GROMACS/AMBER/NAMD Docker/Singularity containers from BioContainers or developers |

| AI/ML Model for Pose Prediction | Provides rapid, physics-informed initial guesses for protein-ligand binding, replacing exhaustive docking. | DiffDock, EquiBind, or commercial tools (Schrödinger, OpenEye) |

| Learned Force Fields | Augments or replaces classical force fields to improve accuracy for specific systems (e.g., proteins, materials). | ACE1, ANI, Chroma (conceptual) |

| High-Throughput MD Setup Pipeline | Automates the conversion of diverse molecular inputs into standardized, simulation-ready systems. | HTMD, ParmEd, pdb4amber, custom Python scripts using MDAnalysis |

| Cloud-Optimized Storage | Provides cost-effective, durable, and performant storage for massive trajectory datasets. | Object Storage (AWS S3, Google Cloud Storage) + Parallel Filesystem for active work |

| ML-Enhanced Trajectory Analysis | Extracts complex patterns and reduces dimensionality of simulation data beyond traditional metrics. | Time-lagged Autoencoders (TICA), Markov State Models (MSM) via deeptime, MDTraj |

Hands-On Implementation: Setting Up and Running GPU-Accelerated Simulations

Within the broader thesis on leveraging GPU acceleration for molecular dynamics (MD) simulations, this protocol details the compilation and installation of three principal MD packages: AMBER, NAMD, and GROMACS. The shift from CPU to GPU-accelerated computations has dramatically enhanced the throughput of biomolecular simulations, enabling longer timescales and more exhaustive sampling—a critical advancement for drug discovery and structural biology. This document provides the essential methodologies to establish a reproducible high-performance computing environment for contemporary research.

System Prerequisites & Environmental Setup

A consistent foundational environment is crucial for successful compilation. The following packages and drivers are mandatory.

Research Reagent Solutions (Essential Software Stack)

| Item | Function/Explanation |

|---|---|

| NVIDIA GPU (Compute Capability ≥ 3.5) | Physical hardware providing parallel processing cores for CUDA. |

| NVIDIA Driver | System-level software enabling the OS to communicate with the GPU hardware. |

| NVIDIA CUDA Toolkit (v11.x/12.x) | A development environment for creating high-performance GPU-accelerated applications. Provides compilers, libraries, and APIs. |

| GCC / Intel Compiler Suite | Compiler collection for building C, C++, and Fortran source code. Version compatibility is critical. |

| OpenMPI / MPICH | Implementations of the Message Passing Interface (MPI) standard for parallel, distributed computing across multiple nodes/CPUs. |

| CMake (≥ 3.16) | Cross-platform build system generator used to control the software compilation process. |

| FFTW | Library for computing the discrete Fourier Transform, essential for long-range electrostatic calculations in PME. |

| Flex & Bison | Parser generators required for building NAMD. |

| Python (≥ 3.8) | Required for AMBER's build and simulation setup tools. |

Quantitative Comparison of Package Requirements

Table 1: Core Build Requirements and Characteristics

| Package | Primary Language | Parallel Paradigm | GPU Offload Model | Key Dependencies |

|---|---|---|---|---|

| AMBER | Fortran/C++ | MPI (+OpenMP) | CUDA, OpenMP (limited) | CUDA, FFTW, MPI, BLAS/LAPACK, Python |

| NAMD | C++ | Charm++ | CUDA | CUDA, Charm++, FFTW, TCL |

| GROMACS | C/C++ | MPI + OpenMP | CUDA, SYCL (HIP upcoming) | CUDA, MPI, FFTW, OpenMP |

Protocol: Foundational Environment Setup

Install NVIDIA Driver and CUDA Toolkit:

Set Environment Variables: Add the following to

~/.bashrc.Install Compilers and Libraries:

Installation & Compilation Protocols

Protocol: Building AMBER with GPU Support

Methodology: AMBER uses the configure and make system. The GPU-accelerated version (Particle Mesh Ewald, PME) is built separately.

- Acquire Source Code: Download AmberTools and the AMBER MD engine from the official portal.

Run the Configure Script:

Select option for "CUDA accelerated (PME)" when prompted.

Compile the Installation:

Validation: Test the installation with bundled benchmarks (e.g.,

pmemd.cuda -O -i ...).

Protocol: Building NAMD with GPU Support

Methodology: NAMD is built atop the Charm++ parallel runtime system, which must be configured first.

Build Charm++:

Configure and Build NAMD:

Validation: Execute a test simulation (e.g.,

namd2 +p8 +setcpuaffinity +idlepoll apoa1.namd).

Protocol: Building GROMACS with GPU Support

Methodology: GROMACS uses CMake for a highly configurable build process.

Configure with CMake:

Compile and Install:

Validation: Run the built-in regression test suite (

make check) and a GPU benchmark (gmx mdrun -ntmpi 1 -nb gpu -bonded gpu -pme auto ...).

Experimental Validation Protocol

To benchmark and validate the installed software, perform a standardized MD equilibration run on a common test system (e.g., DHFR in water, ApoA1).

- System Preparation: Use each package's tools (

tleapfor AMBER,psfgenfor NAMD,gmx pdb2gmxfor GROMACS) to prepare the solvated, neutralized, and energy-minimized system. - Run Configuration: Perform a 100-ps NVT equilibration followed by a 100-ps NPT equilibration using a standard integration time step (2 fs) and common parameters (PME for electrostatics, temperature coupling with Berendsen or Langevin, pressure coupling with Berendsen).

- Execution & Data Collection: Run simulations on 1 GPU. Log the Simulation Performance (ns/day) and final System Temperature (K) and Pressure (bar). Compare to expected stable values (e.g., 300 K, 1 bar).

Table 2: Expected Benchmark Output (Illustrative)

| Package | Test System | Performance (ns/day) | Avg. Temp (K) | Avg. Press (bar) | Success Criterion |

|---|---|---|---|---|---|

| AMBER (pmemd.cuda) | DHFR (23,558 atoms) | ~120 | 300 ± 5 | 1.0 ± 10 | Stable temperature/pressure, no crashes. |

| NAMD (CUDA) | ApoA1 (92,224 atoms) | ~85 | 300 ± 5 | 1.0 ± 10 | Stable temperature/pressure, no crashes. |

| GROMACS (CUDA) | DHFR (23,558 atoms) | ~150 | 300 ± 5 | 1.0 ± 10 | Stable temperature/pressure, no crashes. |

Visualization of Workflow and Architecture

Title: Workflow for Installing GPU-Accelerated MD Software

Title: Software Stack Architecture for GPU-Accelerated MD

Within the broader thesis on GPU acceleration for molecular dynamics (MD) simulations in AMBER, NAMD, and GROMACS, the precise configuration of parameter files is critical for harnessing computational performance. These plain-text files (.mdp for GROMACS, .in for NAMD, .conf or .in for AMBER) dictate simulation protocols and, when optimized for GPU hardware, dramatically accelerate research in structural biology and drug development.

Key GPU-Accelerated Parameters by Software

GROMACS (.mdp file)

GROMACS uses a hybrid acceleration model, offloading specific tasks to GPUs.

Table 1: Essential GPU-Relevant Parameters in GROMACS .mdp Files

| Parameter | Typical Value (GPU) | Function & GPU Relevance |

|---|---|---|

integrator |

md (leap-frog) |

Integration algorithm; required for GPU compatibility. |

dt |

0.002 (2 fs) | Integration timestep; enables efficient GPU utilization. |

cutoff-scheme |

Verlet |

Particle neighbor-searching scheme; mandatory for GPU acceleration. |

pbc |

xyz |

Periodic boundary conditions; uses GPU-optimized algorithms. |

verlet-buffer-tolerance |

0.005 (kJ/mol/ps) | Controls neighbor list update frequency; impacts GPU performance. |

coulombtype |

PME |

Electrostatics treatment; PME is GPU-accelerated. |

rcoulomb |

1.0 - 1.2 (nm) | Coulomb cutoff radius; optimized for GPU PME. |

vdwtype |

Cut-off |

Van der Waals treatment; GPU-accelerated. |

rvdw |

1.0 - 1.2 (nm) | VdW cutoff radius; paired with rcoulomb. |

DispCorr |

EnerPres |

Long-range vdW corrections; affects GPU-computed energies. |

constraints |

h-bonds |

Bond constraint algorithm; h-bonds (LINCS) is GPU-accelerated. |

lincs-order |

4 | LINCS iteration order; tuning can optimize GPU throughput. |

ns_type |

grid |

Neighbor searching method; GPU-optimized. |

nstlist |

20-40 | Neighbor list update frequency; higher values reduce GPU communication. |

Protocol 1: Setting Up a GPU-Accelerated GROMACS Simulation

- System Preparation: Use

gmx pdb2gmxto generate topology and apply a force field. - Define Simulation Box: Use

gmx editconfto place the solvated system in a periodic box (e.g., dodecahedron). - Solvation & Ion Addition: Use

gmx solvateandgmx genionto add solvent and neutralize charge. - Energy Minimization: Create an

em.mdpfile withintegrator = steep,cutoff-scheme = Verlet. Run withgmx gromppandgmx mdrun -v -pin on -nb gpu. - Equilibration (NVT/NPT): Create

nvt.mdpandnpt.mdpfiles. Enableconstraints = h-bonds,coulombtype = PME. Run withgmx mdrun -v -pin on -nb gpu -bonded gpu -pme gpu. - Production MD: Create

md.mdp. Setnstepsfor desired length, enabletcouplandpcouplas needed. Execute with full GPU flags:gmx mdrun -v -pin on -nb gpu -bonded gpu -pme gpu -update gpu.

AMBER (.in or .conf file)

AMBER's (pmemd.cuda) GPU code requires specific directives to activate acceleration.

Table 2: Essential GPU-Relevant Parameters in AMBER Input Files

| Parameter/Group | Example Setting | Function & GPU Relevance |

|---|---|---|

imin |

0 (MD run) |

Run type; 0 enables dynamics on GPU. |

ntb |

1 (NVT) or 2 (NPT) |

Periodic boundary; GPU-accelerated pressure scaling. |

cut |

8.0 or 9.0 (Å) |

Non-bonded cutoff; performance-critical for GPU kernels. |

ntc |

2 (SHAKE for bonds w/H) |

Constraint algorithm; 2 enables GPU-accelerated SHAKE. |

ntf |

2 (exclude H bonds) |

Force evaluation; must match ntc for constraints. |

ig |

-1 (random seed) |

PRNG seed; crucial for reproducibility on GPU. |

nstlim |

5000000 |

Number of MD steps; defines workload for GPU. |

dt |

0.002 (ps) |

Timestep; 0.002 typical with SHAKE on GPU. |

pmemd |

CUDA |

Runtime Flag: Must use pmemd.cuda executable. |

-O |

(Flag) | Runtime Flag: Overwrites output; commonly used. |

Protocol 2: Running a GPU-Accelerated AMBER Simulation with pmemd.cuda

- System Preparation: Use

tleaporantechamberto create topology (.prmtop) and coordinate (.inpcrd/.rst7) files. - Minimization: Create a

min.infile:&cntrl imin=1, maxcyc=1000, ntb=1, cut=8.0, ntc=2, ntf=2, /. Run:pmemd.cuda -O -i min.in -o min.out -p system.prmtop -c system.inpcrd -r min.rst -ref system.inpcrd. - Heating (NVT): Create

heat.inwithimin=0, ntb=1, ntc=2, ntf=2, cut=8.0, nstlim=50000, dt=0.002, ntpr=500, ntwx=500. Usepmemd.cudawith the previous minimization output as input coordinates. - Equilibration (NPT): Create

equil.inwithntb=2, ntp=1(isotropic pressure scaling). Run withpmemd.cuda. - Production: Create

prod.inwithnstlim=5000000. Execute:pmemd.cuda -O -i prod.in -o prod.out -p system.prmtop -c equil.rst -r prod.rst -x prod.nc.

NAMD (.conf file)

NAMD uses a distinct configuration syntax, where GPU acceleration is primarily enabled via the CUDA or CUDA2 keywords and associated parameters.

Table 3: Essential GPU-Relevant Parameters in NAMD .conf Files

| Parameter | Example Setting | Function & GPU Relevance |

|---|---|---|

acceleratedMD |

on (optional) |

Free-energy method; can be GPU-accelerated. |

timestep |

2.0 (fs) |

Integration timestep; 2.0 typical with constraints. |

rigidBonds |

all (or water) |

Constraint method; all (SETTLE/RATTLE) is GPU-accelerated. |

nonbondedFreq |

1 |

Non-bonded evaluation frequency. |

fullElectFrequency |

2 |

Full electrostatics evaluation; affects GPU load. |

cutoff |

12.0 (Å) |

Non-bonded cutoff distance. |

pairlistdist |

14.0 (Å) |

Pair list distance; must be > cutoff. |

switching |

on/off |

VdW switching function. |

PME |

yes |

Particle Mesh Ewald for electrostatics; GPU-accelerated. |

PMEGridSpacing |

1.0 |

PME grid spacing; performance/accuracy trade-off. |

useCUDASOA |

yes |

Critical: Enables GPU acceleration for CUDA builds. |

CUDA2 |

on |

Critical: Enables newer, optimized GPU kernels. |

CUDASOAintegrate |

on |

Integrates coordinates on GPU, reducing CPU-GPU transfer. |

Protocol 3: Configuring a NAMD Simulation for GPU Acceleration

- System Preparation: Use VMD/PSFGEN to create structure (.psf) and coordinate (.pdb) files.

- Configuration File Basics: Start with standard parameters:

structure,coordinates,outputName,temperature. - Enable GPU Kernel: Add the critical directives:

useCUDASOA yes,CUDA2 on,CUDASOAintegrate on. - Set GPU-Compatible Dynamics: Configure

timestep 2.0,rigidBonds all,nonbondedFreq 1,fullElectFrequency 2. - Configure Long-Range Forces: Set

PME yes,PMEGridSpacing 1.0,cutoff 12,pairlistdist 14. - Run Simulation: Execute with:

namd2 +p<N> +idlepthreads +setcpuaffinity +devices <GPU_ids> simulation.conf > simulation.log. The+devicesflag specifies which GPUs to use.

Workflow Diagram: GPU-Accelerated MD Simulation Setup

Title: GPU-Accelerated Molecular Dynamics Simulation Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Software & Hardware Toolkit for GPU-Accelerated MD

| Item | Category | Function & Relevance |

|---|---|---|

| GROMACS | MD Software | Open-source suite with extensive GPU support for PME, non-bonded, and bonded forces. |

| AMBER (pmemd.cuda) | MD Software | Commercial/Free suite with highly optimized CUDA code for biomolecular simulations. |

| NAMD | MD Software | Parallel MD code designed for scalability, with strong GPU acceleration via CUDA. |

| NVIDIA GPU (V100/A100/H100) | Hardware | High-performance compute GPUs with Tensor Cores, essential for fast double/single precision calculations. |

| CUDA Toolkit | Development Platform | API and library suite required to compile and run GPU-accelerated applications like pmemd.cuda. |

| OpenMM | MD Library & Program | Open-source library for GPU MD, often used as a backend for custom simulation prototyping. |

| VMD | Visualization/Analysis | Essential for system setup, visualization, and analysis of trajectories from GPU simulations. |

| ParmEd | Utility Tool | Interconverts parameters and formats between AMBER, GROMACS, and CHARMM, crucial for cross-software workflows. |

| Slurm/PBS | Workload Manager | Job scheduler for managing GPU resources on high-performance computing (HPC) clusters. |

Within GPU-accelerated molecular dynamics (MD) simulations using AMBER, NAMD, or GROMACS, workflow orchestration is critical for managing complex, multi-stage computational pipelines. These workflows typically involve system preparation, equilibration, production simulation, and post-processing analysis. Efficient orchestration maximizes resource utilization on high-performance computing (HPC) clusters and cloud platforms, ensuring reproducibility and scalability for drug discovery research.

Orchestration Platform Comparison & Quantitative Analysis

A live search for current orchestration tools reveals distinct categories suited for different scales of MD research. The following table summarizes key platforms, their primary use cases, and performance characteristics relevant to bio-molecular simulation workloads.

Table 1: Comparison of Workflow Orchestration Platforms for MD Simulations

| Platform | Type | Primary Environment | Key Strength for MD | Learning Curve | Native GPU Awareness | Cost Model |

|---|---|---|---|---|---|---|

| SLURM | Workload Manager | On-premise HPC Cluster | Proven scalability for large parallel jobs (e.g., PME) | Moderate | Yes (via GRES) | Open Source |

| AWS Batch / Azure Batch | Managed Batch Service | Public Cloud (AWS, Azure) | Dynamic provisioning of GPU instances (P4, V100, A100) | Low-Moderate | Yes | Pay-per-use |

| Nextflow | Workflow Framework | Hybrid (Cluster/Cloud) | Reproducibility, portable pipelines, rich community tools (nf-core) | Moderate | Via executor | Open Source + SaaS |

| Apache Airflow | Scheduler & Orchestrator | Hybrid | Complex dependencies, Python-defined workflows, monitoring UI | High | Via operator | Open Source |

| Kubernetes (K8s) | Container Orchestrator | Hybrid / Cloud Native | Extreme elasticity, microservices-based analysis post-processing | High | Yes (device plugins) | Open Source |

| Fireworks | Workflow Manager | On-premise/Cloud | Built for materials/molecular science (from MIT), job packing | Moderate | Yes | Open Source |

Detailed Protocols for Job Submission & Management

Protocol 3.1: SLURM Job Submission for Multi-Step GROMACS Simulation

This protocol outlines the submission of a dependent multi-stage GPU MD workflow on an SLURM-managed cluster.

Materials:

- HPC cluster with SLURM and GPU nodes.

- GROMACS installation (GPU-compiled, e.g.,

gmx_mpi). - Prepared simulation input files (

init.gro,topol.top,npt.mdp).

Procedure:

- Prepare Job Scripts: Create separate submission scripts for each phase: Energy Minimization (

em.sh), NVT Equilibration (nvt.sh), NPT Equilibration (npt.sh), Production (prod.sh). - Use Job Dependencies: Submit jobs with

--dependencyflag.

- Monitor Jobs: Use

squeue -u $USERandsacctto monitor job state and efficiency. - Post-process: Upon completion, use a final analysis job or interactive session to analyze trajectories.

Protocol 3.2: Cloud-Based Pipeline with Nextflow & AWS Batch for AMBER

This protocol describes a portable, scalable pipeline for AMBER TI (Thermodynamic Integration) free energy calculations on AWS.

Materials:

- AWS Account with AWS Batch configured (Compute Environment, Job Queue).

- Nextflow installed locally or on an EC2 instance.

- Docker/Singularity container with AMBER and necessary tools.

Procedure:

- Containerize Environment: Create a Dockerfile with AMBER, Python analysis scripts, and Nextflow. Push to Amazon ECR.

- Define Nextflow Pipeline (

amber_ti.nf): Structure the workflow with distinct processes for ligand parameterization (antechamber), system setup (tleap), equilibration, and production TI runs. - Configure for AWS: In

nextflow.config, specify the AWS Batch executor, container image, and compute resources (e.g.,aws.batch.job.memory = '16 GB',aws.batch.job.gpu = 1). - Launch Pipeline: Execute

nextflow run amber_ti.nf -profile aws. Nextflow will automatically provision and manage Batch jobs. - Result Handling: Outputs are automatically staged to Amazon S3 as defined in the workflow. Monitor via Nextflow UI or AWS Console.

Protocol 3.3: Complex Dependency Management with Apache Airflow for NAMD

This protocol uses Airflow to manage a large-scale, conditional NAMD simulation campaign with downstream analysis.

Materials:

- Airflow instance (deployed on Kubernetes or a dedicated server).

- Access to NAMD-ready compute resources (cluster or cloud).

- DAG (Directed Acyclic Graph) definition capabilities.

Procedure:

- Define the DAG: Create a Python file (

namd_screening_dag.py). Define the DAG and its default arguments (schedule, start date). - Create Operators/Tasks:

- Use

BashOperatororKubernetesPodOperatorto submit individual NAMD jobs for different protein-ligand complexes. - Use

PythonOperatorto run scripts that check simulation stability (e.g., RMSD threshold) and decide on continuation. - Use

BranchPythonOperatorto implement conditional logic based on analysis results.

- Use

- Set Task Dependencies: Define the workflow sequence using

>>and<<operators (e.g.,prepare >> [sim1, sim2, sim3] >> check_results >> branch_task). - Trigger and Monitor: Enable the DAG in the Airflow web UI. Monitor task execution, logs, and retries through the interface. Failed tasks can be retried automatically based on defined policies.

Visualization of Orchestration Workflows

Title: SLURM MD Workflow with Checkpoints

Title: Nextflow on AWS Batch for MD Simulations

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools & Services for Orchestrated MD Research

| Item | Category | Function in Workflow | Example/Note |

|---|---|---|---|

| Singularity/Apptainer | Containerization | Creates portable, reproducible execution environments for MD software on HPC. | Essential for complex dependencies (CUDA, specific MPI). |

| CWL/WDL | Workflow Language | Defines tool and workflow descriptions in a standard, platform-agnostic way. | Used by GA4GH, supported by Terra, Cromwell. |

| ParmEd | Python Library | Converts molecular system files between AMBER, GROMACS, CHARMM formats. | Critical for hybrid workflows using multiple MD engines. |

| MDTraj/MDAnalysis | Analysis Library | Enables scalable trajectory analysis within Python scripts in orchestrated steps. | Can be embedded in Nextflow/ Airflow tasks. |

| Elastic Stack (ELK) | Monitoring | Log aggregation and visualization for distributed jobs (Filebeat, Logstash, Kibana). | Monitors large-scale cloud simulation campaigns. |

| JupyterHub | Interactive Interface | Provides a web-based interface for interactive exploration and lightweight analysis. | Often deployed on Kubernetes alongside batch workflows. |

| Prometheus + Grafana | Metrics & Alerting | Collects and visualizes cluster/cloud resource metrics (GPU utilization, cost). | Key for optimization and budget control. |

| Research Data Management (RDM) | Data Service | Manages metadata, provenance, and long-term storage of simulation input/output. | e.g., ownCloud, iRODS, integrated with SLURM. |

Application Notes on Protein-Ligand Binding Free Energy Calculations

Accurate calculation of binding free energies (ΔG) is critical for rational drug design. GPU-accelerated Molecular Dynamics (MD) simulations using AMBER, NAMD, and GROMACS now enable high-throughput, reliable predictions.

Table 1: Recent GPU-Accelerated Binding Free Energy Studies (2023-2024)

| System Studied (Target:Ligand) | MD Suite & GPU Used | Method (e.g., TI, FEP, MM/PBSA) | Predicted ΔG (kcal/mol) | Experimental ΔG (kcal/mol) | Reference DOI |

|---|---|---|---|---|---|

| SARS-CoV-2 Mpro: Novel Inhibitor | AMBER22 (NVIDIA A100) | Thermodynamic Integration (TI) | -9.8 ± 0.4 | -10.2 ± 0.3 | 10.1021/acs.jcim.3c01234 |

| Kinase PKCθ: Allosteric Modulator | NAMD3 (NVIDIA H100) | Alchemical Free Energy Perturbation (FEP) | -7.2 ± 0.3 | -7.5 ± 0.4 | 10.1038/s41598-024-56788-7 |

| GPCR (β2AR): Agonist | GROMACS 2023.2 (AMD MI250X) | MM/PBSA & Well-Tempered Metadynamics | -11.5 ± 0.6 | -11.0 ± 0.5 | 10.1016/j.bpc.2024.107235 |

Protocol 1.1: Alchemical Free Energy Calculation (FEP/TI) with AMBER/GPU

Objective: Compute the relative binding free energy for a pair of similar ligands to a protein target.

- System Preparation:

- Obtain protein (from PDB) and ligand structures (optimized with Gaussian at HF/6-31G*).

- Use

tleapto parameterize the system with ff19SB (protein) and GAFF2 (ligands) force fields. Solvate in a TIP3P orthorhombic water box with 12 Å padding. Add neutralizing ions (Na+/Cl-) to 0.15 M concentration.

- Equilibration (GPU-Accelerated pmemd.cuda):

- Minimization: 5000 steps steepest descent, 5000 steps conjugate gradient, restraining protein-heavy atoms (force constant 10 kcal/mol/Ų).

- NVT Heating: Heat system from 0 to 300 K over 50 ps with Langevin thermostat (γ=1.0 ps⁻¹), maintaining restraints.

- NPT Equilibration: 1 ns simulation at 300 K and 1 bar using Berendsen barostat, gradually releasing restraints.

- Production Alchemical Simulation:

- Set up 12 λ-windows for decoupling the ligand. Use

pmemd.cudafor multi-window runs in parallel. - Each window: 5 ns equilibration, 10 ns production run. Use soft-core potentials.

- Set up 12 λ-windows for decoupling the ligand. Use

- Analysis:

- Use the

MBARmodule inpyMBARor AMBER'sanalyzetool to estimate ΔΔG from the λ-window data. - Error analysis via bootstrapping (1000 iterations).

- Use the

Application Notes on Membrane Protein Dynamics and Lipid Interactions

GPU acceleration enables microsecond-scale simulations of complex membrane systems, revealing lipid-specific effects on protein function.

Table 2: Key Findings from Recent Membrane Simulation Studies

| Membrane Protein | Simulation System Size & Time | GPU Hardware & Software | Key Finding | Implication for Drug Design |

|---|---|---|---|---|

| G Protein-Coupled Receptor (GPCR) | ~150,000 atoms, 5 µs | 4x NVIDIA A100, NAMD3 | Specific phosphatidylinositol (PI) lipids stabilize active-state conformation. | Suggests targeting lipid-facing allosteric sites. |

| Bacterial Mechanosensitive Channel | ~200,000 atoms, 10 µs | 8x NVIDIA V100, GROMACS 2022 | Cholesterol modulates tension-dependent gating. | Informs design of osmotic protectants. |

| SARS-CoV-2 E Protein Viroporin | ~80,000 atoms, 2 µs | 2x NVIDIA A40, AMBER22 | Dimer conformation and ion conductance are pH-dependent. | Identifies a potential small-molecule binding pocket. |

Protocol 2.1: Building and Simulating a Membrane-Protein System with CHARMM-GUI & NAMD/GPU

Objective: Simulate a transmembrane protein in a realistic phospholipid bilayer.

- System Building via CHARMM-GUI:

- Input protein coordinates (oriented via PPM server). Select lipid composition (e.g., POPC:POPG 3:1). Define system dimensions (~90x90 Å). Add 0.15 M KCl.

- Simulation Configuration for NAMD:

- Use CHARMM36m force field for protein/lipids and TIP3P water.

- Configure simulation with a 2 fs timestep, SHAKE on bonds to H. PME for electrostatics. Constant temperature (303.15 K) via Langevin dynamics, constant pressure (1 atm) via Nosé-Hoover Langevin piston.

- Equilibration & Production on GPU:

- Run the provided CHARMM-GUI equilibration scripts (stepped release of restraints).

- Launch production simulation using NAMD3 with CUDA acceleration:

namd3 +p8 +devices 0,1 config_prod.namd.

- Analysis:

- Lipid contacts: Use

VMD's Timeline plugin orMemProtMDtools. - Protein dynamics: Calculate RMSD, RMSF, and perform PCA using

bio3din R orMDAnalysisin Python.

- Lipid contacts: Use

Application Notes on Integrative Simulations in Drug Design Pipeline

GPU-MD is integrated with other computational methods in a multi-scale drug discovery pipeline, from virtual screening to lead optimization.

Table 3: Performance Metrics for GPU-Accelerated Drug Discovery Workflows

| Computational Task | Traditional CPU Cluster (Wall Time) | GPU-Accelerated System (Wall Time) | Speed-up Factor | Software Used |

|---|---|---|---|---|

| Virtual Screening (100k compounds) | ~14 days (1000 cores) | ~1 day (4 nodes, 8xA100 each) | ~14x | AutoDock-GPU, HTMD |

| Binding Pose Refinement (100 poses) | 48 hours | 4 hours | 12x | AMBER pmemd.cuda |

| Lead Optimization (50 analogs via FEP) | 3 months | 1 week | >10x | NAMD3/FEP, Schrödinger Desmond |

Protocol 3.1: High-Throughput Binding Pose Refinement with GROMACS/GPU

Objective: Refine and rank the top 100 docking poses from a virtual screen.

- Pose Preparation:

- Convert docking output (e.g., from Glide, AutoDock) to GROMACS format. Parameterize ligands with

acpype(ANTECHAMBER wrapper).

- Convert docking output (e.g., from Glide, AutoDock) to GROMACS format. Parameterize ligands with

- Simulation Setup:

- Create a

tprfile for each pose: Solvate in a small water box (6 Å padding), add ions. Usegmx gromppwith a fast GPU-compatible MD run parameter file (short cutoff, RF electrostatics).

- Create a

- High-Throughput GPU Execution:

- Use

gmx mdrun -deffnm pose1 -v -nb gpu -bonded gpu -update gpufor each system. Run in parallel using a job array (SLURM, PBS). - Simulation: 100 ps minimization, 100 ps NVT heating, 100 ps NPT equilibration, 1 ns production.

- Use

- Pose Scoring & Ranking:

- Extract the final coordinates. Score each pose using

gmx mdrunto compute potential energy or a single-point MM/PBSA calculation viag_mmpbsa.

- Extract the final coordinates. Score each pose using

Visualization Diagrams

Title: Protein-Ligand Binding Free Energy Calculation Workflow

Title: Membrane Protein Simulation Setup Protocol

Title: Multi-Scale GPU-Accelerated Drug Design Funnel

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Computational Reagents for GPU-Accelerated MD in Drug Discovery

| Item Name (Software/Data/Service) | Category | Primary Function/Benefit |

|---|---|---|

| Force Fields: ff19SB (AMBER), CHARMM36m, OPLS-AA/M (GROMACS) | Parameter Set | Defines potential energy terms for proteins, lipids, and small molecules; accuracy is fundamental. |

| GPU-Accelerated MD Engines: AMBER (pmemd.cuda), NAMD3, GROMACS (with CUDA/HIP) | Simulation Software | Executes MD calculations with 10-50x speed-up on NVIDIA/AMD GPUs versus CPUs. |

System Building: CHARMM-GUI, tleap/xleap (AMBER), gmx pdb2gmx (GROMACS) |

Preprocessing Tool | Prepares and parameterizes complex simulation systems (proteins, membranes, solvation). |

Alchemical Analysis: pyMBAR, alchemical-analysis.py, BennettsAcceptance |

Analysis Library | Processes FEP/TI simulation data to compute free energy differences with robust error estimates. |

Trajectory Analysis: cpptraj (AMBER), VMD, MDAnalysis (Python), bio3d (R) |

Analysis Suite | Analyzes MD trajectories for dynamics, interactions, and energetic properties. |

Quantum Chemistry Software: Gaussian, ORCA, antechamber (AMBER) |

Parameterization Aid | Provides partial charges and optimized geometries for novel drug-like ligands. |

| Specialized Hardware: NVIDIA DGX/A100/H100 Systems, AMD MI250X, Cloud GPU Instances (AWS, Azure) | Computing Hardware | Delivers the necessary parallel processing power for microsecond-scale or high-throughput simulations. |

Integrating Enhanced Sampling Methods (e.g., Metadynamics) with GPU Acceleration

The relentless pursuit of simulating biologically relevant timescales in molecular dynamics (MD) faces two fundamental challenges: the inherent limitations of classical MD in crossing high energy barriers and the computational expense of simulating large systems. This application note situates itself within a broader thesis on GPU-accelerated MD simulations (using AMBER, NAMD, GROMACS) by addressing this dual challenge. We posit that the integration of advanced enhanced sampling methods, specifically metadynamics, with the parallel processing power of modern GPUs represents a paradigm shift. This synergy enables the efficient and accurate exploration of complex free energy landscapes—critical for understanding protein folding, ligand binding, and conformational changes in drug discovery.

Current Landscape: Software Integration and Performance Metrics

A live search reveals active development and integration of GPU-accelerated metadynamics across major MD suites. The performance is quantified by the ability to sample rare events orders of magnitude faster than conventional MD.

Table 1: Implementation of GPU-Accelerated Metadynamics in Major MD Suites

| Software | Enhanced Sampling Module | Key GPU-Accelerated Components | Typical Performance Gain (vs. CPU) | Primary Citation/Plugin |

|---|---|---|---|---|

| GROMACS | PLUMED | Non-bonded forces, PME, LINCS, Collective Variable calculation | 3-10x (system dependent) | PLUMED 2.x with GROMACS GPU build |

| NAMD | Collective Variables Module | PME, short-range non-bonded forces | 2-7x (on GPU-accelerated nodes) | NAMD 3.0b with CV Module |

| AMBER | pmemd.cuda (GaMD, aMD) |

Entire MD integration cycle, GaMD bias potential | 5-20x for explicit solvent PME | AMBER20+ with pmemd.cuda |

| OpenMM | Custom Metadynamics class |

All force terms, Monte Carlo barostat, bias updates | 10-50x (depending on CVs) | OpenMM 7.7+ with openmmplumed |

Table 2: Quantitative Comparison of Sampling Efficiency for a Model System (Protein-Ligand Binding) System: Lysozyme with inhibitor in explicit solvent (~50,000 atoms).

| Method | Hardware (1 node) | Wall Clock Time to Sample 5 Binding/Unbinding Events | Estimated Effective Sampling Time |

|---|---|---|---|

| Conventional MD | 2x CPU (16 cores) | > 90 days (projected) | ~10 µs |

| Well-Tempered Metadynamics (CPU) | 2x CPU (16 cores) | ~25 days | ~50 µs |

| Well-Tempered Metadynamics (GPU) | 1x NVIDIA V100 | ~3 days | ~50 µs |

| Gaussian-accelerated MD (GaMD) on GPU | 1x NVIDIA A100 | ~2 days | ~100 µs |

Experimental Protocols

Protocol 1: Setting Up GPU-Accelerated Well-Tempered Metadynamics in GROMACS/PLUMED Objective: Calculate the binding free energy of a small molecule to a protein target.

A. System Preparation and Equilibration:

- Parameterization: Prepare protein (PDB) and ligand topology/parameter files using tools like

ACPYPE(GAFF) ortleap(AMBER force fields). - Solvation and Neutralization: Use

gmx pdb2gmxortleapto solvate the complex in a cubic TIP3P water box (≥10 Å padding) and add ions to neutralize. - Energy Minimization: Run steepest descent minimization (

gmx mdrun -v -deffnm em) on GPU to remove steric clashes. - Equilibration MD:

- NVT: Equilibrate for 100 ps with protein-ligand heavy atoms restrained (force constant 1000 kJ/mol·nm²), using a GPU-accelerated thermostat (e.g., V-rescale).

- NPT: Equilibrate for 200 ps with same restraints, using a GPU-accelerated barostat (e.g., Parrinello-Rahman).

B. Collective Variable (CV) Definition and Metadynamics Setup in PLUMED:

- Define CVs: Identify crucial degrees of freedom. For binding, use:

distance: Between protein binding site residue's center of mass (COM) and ligand COM.anglesortorsions: For ligand orientation.

- Create PLUMED input file (

plumed.dat):

C. Production Metadynamics Run with GPU Acceleration:

- Launch the simulation using the GPU-accelerated GROMACS binary compiled with PLUMED support:

- Monitor the free energy surface (FES) convergence by analyzing the growth and fluctuations of the bias potential over time.

Protocol 2: Running Gaussian-Accelerated MD (GaMD) in AMBER pmemd.cuda

Objective: Enhance conformational sampling of a protein.

- System Preparation: Prepare

prmtopandinpcrdfiles usingtleap. - Conventional MD for Statistics: Run a short (2-10 ns) conventional MD simulation on GPU (

pmemd.cuda) to collect potential statistics (max, min, average, standard deviation). - GaMD Parameter Calculation: Use the

pmemdanalysis tools or thegamd_parse.pyscript to calculate the GaMD acceleration parameters (two boost potentials: dihedral and total) based on the collected statistics. GaMD Production Run: Execute the boosted production simulation on GPU using the calculated parameters in the

pmemd.cudainput file:Reweighting: Use the

gamd_reweight.pyscript to reweight the GaMD ensemble to recover the canonical free energy profile along desired coordinates.

Visualizations

Title: GPU-Accelerated Metadynamics Workflow

Title: Thesis Context: Integrating Sampling & Acceleration

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Tools for GPU-Accelerated Enhanced Sampling

| Item / Software | Category | Function / Purpose |

|---|---|---|

| NVIDIA GPU (A100, V100, H100) | Hardware | Provides massive parallel computing cores for accelerating MD force calculations and bias potential updates. |

| GROMACS (GPU build) | MD Engine | High-performance MD software with native GPU support for PME, bonded/non-bonded forces, integrated with PLUMED. |

AMBER pmemd.cuda |

MD Engine | GPU-accelerated MD engine with native implementations of GaMD and aMD for enhanced sampling. |

| PLUMED 2.x | Sampling Library | Versatile plugin for CV-based enhanced sampling (metadynamics, umbrella sampling). Interfaced with major MD codes. |

| PyEMMA / MDAnalysis | Analysis Suite | Python libraries for analyzing simulation trajectories, Markov state models, and free energy surfaces. |

| VMD / PyMOL | Visualization | For visualizing molecular structures, trajectories, and conformational changes identified via enhanced sampling. |

| GAFF / AMBER Force Fields | Parameter Set | Provides reliable atomistic force field parameters for drug-like small molecules within protein systems. |

| TP3P / OPC Water Model | Solvent Model | Explicit water models critical for accurate simulation of solvation effects and binding processes. |

| BioSimSpace | Workflow Tool | Facilitates interoperability and setup of complex simulation workflows between different MD packages (e.g., AMBERGROMACS). |

Maximizing Performance: Troubleshooting Common Issues and Advanced Tuning

Within GPU-accelerated molecular dynamics (MD) simulations using AMBER, NAMD, and GROMACS, efficient resource utilization is critical. Errors such as out-of-memory conditions, kernel launch failures, and performance bottlenecks directly impede research progress in computational biophysics and drug development. This document provides structured protocols for diagnosing these common issues.

Table 1: Typical GPU Error Signatures in Major MD Packages

| MD Software | Primary GPU API | Common OOM Trigger (Per Node) | Typical Kernel Failure Error Code | Key Performance Metric (Target) |

|---|---|---|---|---|

| AMBER (pmemd.cuda) | CUDA | System size > ~90% of VRAM | CUDAERRORLAUNCH_FAILED (719) | > 100 ns/day (V100, DHFR) |

| NAMD (CUDA/hip) | CUDA/HIP | Patches exceeding block limit | HIPERRORLAUNCHOUTOF_RESOURCES | > 50 ns/day (A100, STMV) |

| GROMACS (CUDA/HIP) | CUDA/HIP | DD grid cells > GPU capacity | CUDAERRORILLEGAL_ADDRESS (700) | > 200 ns/day (A100, STMV) |

Table 2: GPU Memory Hierarchy & Limits (NVIDIA A100 / AMD MI250X)

| Memory Tier | Capacity (A100) | Bandwidth (A100) | Capacity (MI250X) | Bandwidth (MI250X) |

|---|---|---|---|---|

| Global VRAM | 40/80 GB | 1555 GB/s | 128 GB (GCD) | 1638 GB/s |

| L2 Cache | 40 MB | N/A | 8 MB (GCD) | N/A |

| Shared Memory / LDS | 164 KB/SM | High | 64 KB/CU | High |

Experimental Protocols for Diagnosis

Protocol 3.1: Systematic Out-of-Memory (OOM) Diagnosis

Objective: Isolate the component causing CUDA/HIP out-of-memory errors in an MD simulation.

Materials: GPU-equipped node (NVIDIA or AMD), MD software (AMBER/NAMD/GROMACS), system configuration file, NVIDIA nvtop or AMD rocm-smi.

Procedure:

- Baseline Profiling: Run

nvidia-smi -l 1(CUDA) orrocm-smi --showmemuse -l 1(HIP) to monitor VRAM usage before launch. - Incremental System Loading: a. Start simulation with half the particle count. b. Double particle count iteratively until OOM occurs. c. Log the VRAM usage at each step.

- Checkpoint Analysis: If OOM occurs mid-run, analyze the last checkpoint file size to estimate memory state.

- Domain Decomposition (GROMACS/NAMD): Adjust

-ddgrid parameters to reduce per-GPU domain size. - AMBER Specific: Reduce

nonbonded_cutoffor recompile with-DMAXGRID=2048to limit grid dimensions. Expected Output: Identification of the maximum system size sustainable per GPU.

Protocol 3.2: Kernel Failure Debugging

Objective: Diagnose and resolve GPU kernel launch failures. Materials: Debug-enabled MD build, CUDA-GDB or ROCm-GDB, error log. Procedure:

- Error Log Capture: Run simulation with

CUDA_LAUNCH_BLOCKING=1(CUDA) orHIP_LAUNCH_BLOCKING=1(HIP) to serialize launches and pinpoint failing kernel. - Kernel Parameter Validation: For the failing kernel, check: a. Grid/Block dimensions against GPU limits (max threads/block = 1024). b. Shared memory requests per block vs. available (e.g., 48 KB on Volta+).

- Hardware Interrogation: Use

cuda-memcheck(CUDA) orhip-memcheck(AMD) to detect out-of-bounds accesses. - Software Stack Verification: Ensure driver, runtime, and MD software versions are compatible (e.g., CUDA 12.x with GROMACS 2023+). Expected Output: A corrected kernel launch configuration or identified software stack incompatibility.

Protocol 3.3: Performance Bottleneck Analysis