Optimization Algorithms for Parameter Estimation in Drug Development: From Foundational Principles to AI-Driven Applications

This article provides a comprehensive exploration of optimization algorithms for parameter estimation, tailored for researchers and drug development professionals.

Optimization Algorithms for Parameter Estimation in Drug Development: From Foundational Principles to AI-Driven Applications

Abstract

This article provides a comprehensive exploration of optimization algorithms for parameter estimation, tailored for researchers and drug development professionals. It covers the foundational principles of parameter estimation within Model-Informed Drug Development (MIDD) and fit-for-purpose modeling frameworks. The piece delves into specific methodological applications, including AI and machine learning integration, advanced hybrid algorithms like HSAPSO, and their use in pharmacokinetic/pharmacodynamic (PK/PD) modeling and ADMET prediction. It also addresses critical troubleshooting strategies for overcoming common challenges such as data quality issues and model overfitting, and concludes with rigorous validation techniques and comparative analyses of different algorithmic approaches to ensure regulatory readiness and robust model performance.

The Core Principles: Understanding Parameter Estimation in Modern Drug Development

The Pivotal Role of Parameter Estimation in Model-Informed Drug Development (MIDD)

Technical Support: Frequently Asked Questions (FAQs)

FAQ 1: Why are my parameter estimates unstable or associated with unacceptably high variance?

- Problem Explanation: This is a classic symptom of multicollinearity, where strong correlations between explanatory variables inflate the variance of parameter estimates. It can lead to unreliable coefficients with unexpected signs and reduced model reliability [1].

- Solution:

- For Generalized Linear Models (e.g., Poisson Regression): Replace the standard Poisson Maximum Likelihood Estimator (PMLE) with a robust biased estimator. The proposed Robust Poisson Two-Parameter Estimator (PMT-PTE) is designed to handle both multicollinearity and outliers simultaneously, providing more stable estimates [1].

- General Practice: Incorporate regularization techniques (e.g., ridge regression) that introduce a small bias to achieve a substantial reduction in the variance of the estimates.

FAQ 2: How can I efficiently perform covariate selection for a Nonlinear Mixed-Effects (NLME) model without repeated, time-consuming model runs?

- Problem Explanation: Traditional stepwise covariate selection requires manually testing numerous covariate-parameter combinations, which is computationally expensive and inefficient [2].

- Solution:

- Adopt a novel Generative AI framework using Variational Autoencoders (VAEs). This method replaces the standard Evidence Lower Bound objective with one based on the corrected Bayesian information criterion.

- This allows for the simultaneous evaluation of all potential covariate-parameter combinations, enabling automated, joint estimation of population parameters and covariate selection in a single run [2].

FAQ 3: My model's performance is highly sensitive to outliers in the dataset. What robust methods are available?

- Problem Explanation: Outliers can disproportionately influence parameter estimates, distorting results and reducing predictive accuracy. This is a known issue in both linear regression and models like Poisson Regression [1].

- Solution:

- Use robust regression estimators. For Poisson regression, the Transformed M-estimator (MT) can be combined with biased estimators (like the proposed PMT-PTE) to effectively mitigate the influence of outliers while also addressing multicollinearity [1].

- These methods reduce the weight of influential data points, leading to more reliable parameter estimates that better represent the majority of the data.

FAQ 4: What is a "Fit-for-Purpose" approach in MIDD, and how does it guide parameter estimation?

- Problem Explanation: Selecting an overly complex or overly simplistic model can lead to poor predictions and misguided decisions [3].

- Solution:

- Align your modeling and parameter estimation strategy directly with the Key Question of Interest (QOI) and Context of Use (COU) [3].

- For early discovery (e.g., target identification), simpler models may be sufficient.

- For critical decisions (e.g., dose optimization for regulatory submission), more rigorous methods like Population PK/PD modeling or Quantitative Systems Pharmacology (QSP) are required. The model's level of complexity and the robustness of parameter estimation should be justified by its intended impact on development decisions [3].

FAQ 5: How can I enhance the predictive power and credibility of my mechanistic model?

- Problem Explanation: Models that focus solely on quantitative fit may miss key qualitative, system-level behaviors (e.g., bistability), limiting their predictive value and biological plausibility [4].

- Solution:

- Ensure your model captures emergent properties across biological scales, from molecular interactions to organ-level function [4].

- Integrate foundational biomedical knowledge from physiology and molecular biology into the model structure.

- Proactively and cautiously adapt existing literature models to your specific context through a "learn and confirm" paradigm, critically assessing their biological assumptions and parameter sources before applying them to new data [4].

Experimental Protocols & Methodologies

Protocol: Automated Covariate Selection using a Variational Autoencoder (VAE) Framework

This protocol details the methodology for streamlining covariate selection in NLME models, as presented in the research "Redefining Parameter Estimation and Covariate Selection via Variational Autoencoders" [2].

- Objective: To enable joint estimation of population parameters and covariate selection in a single, automated run.

- Background: NLME models are central to pharmacometrics but traditionally require manual, stepwise covariate model building.

- Materials/Software:

- NLME dataset

- VAE software framework as described in the primary research [2]

- Method Steps:

- Model Setup: Define the base NLME model without covariates.

- VAE Integration: Implement the VAE framework, which is designed to learn structured representations from complex, high-dimensional data.

- Objective Function Modification: Replace the standard VAE evidence lower bound (ELBO) objective function with an objective function based on the corrected Bayesian information criterion (BIC).

- Simultaneous Evaluation: Allow the modified VAE framework to evaluate all potential covariate-parameter combinations concurrently within the model optimization process.

- Output: The algorithm outputs the final model, which includes the identified significant covariate-parameter relationships and the associated population parameter estimates.

- Key Outcomes: This approach eliminates the need for manual selection and repeated model fitting, outperforming traditional procedures in efficiency while maintaining high-quality results [2].

Protocol: Implementing a Robust Poisson Two-Parameter Estimator (PMT-PTE)

This protocol outlines the procedure for applying the PMT-PTE to manage outliers and multicollinearity in Poisson regression, based on the work by Lukman et al. [1].

- Objective: To obtain stable and reliable parameter estimates for a Poisson regression model in the presence of multicollinearity and outliers.

- Background: The Poisson Maximum Likelihood Estimator (PMLE) is highly sensitive to both multicollinearity and outliers, which can distort results.

- Materials/Software:

- Dataset with count-based response variable and potentially correlated predictors.

- Statistical software capable of implementing custom estimation routines (e.g., R, Python).

- Method Steps:

- Diagnostics: Confirm the presence of multicollinearity (e.g., via Variance Inflation Factors) and identify potential outliers.

- Estimator Formulation: The proposed PMT-PTE estimator combines the Transformed M-estimator (MT) to handle outliers with a two-parameter biased estimation technique to handle multicollinearity. The form of the estimator is:

PMT-PTE = (D + kI)^{-1}(D + dI) {\hat{\beta}}_{MT}where{\hat{\beta}}_{MT}is the robust Transformed M-estimator,D = X^\prime {\hat{U}} X,kanddare the biasing parameters [1]. - Parameter Selection: Optimize the biasing parameters

kanddto minimize the Mean Squared Error (MSE), typically via Monte Carlo simulation or cross-validation on the specific dataset. - Model Fitting: Solve for the regression coefficients using the proposed PMT-PTE estimator instead of the standard PMLE.

- Key Outcomes: Simulation results indicate that the PMT-PTE estimator outperforms other estimators (like PMLE, Poisson Ridge, etc.) in scenarios with coexisting multicollinearity and outliers, as measured by a lower MSE [1].

Table: Comparison of Poisson Regression Estimators

The following table summarizes the performance characteristics of various estimators for Poisson regression models under different data challenges, as evaluated in a Monte Carlo simulation study [1].

| Estimator Name | Acronym | Primary Strength | Limitations | Reported Performance (MSE) |

|---|---|---|---|---|

| Poisson Maximum Likelihood Estimator | PMLE | Standard, unbiased estimator | Highly sensitive to multicollinearity & outliers | Highest MSE in adverse conditions [1] |

| Poisson Ridge Estimator | - | Handles multicollinearity | Does not address outliers | Higher MSE than robust estimators when outliers exist [1] |

| Transformed M-estimator (MT) | MT | Robust against outliers | Does not fully address multicollinearity | Improved over PMLE, but outperformed by combined methods [1] |

| Robust Poisson Two-Parameter Estimator | PMT-PTE | Handles both multicollinearity & outliers | Requires optimization of two parameters | Lowest MSE when both problems are present [1] |

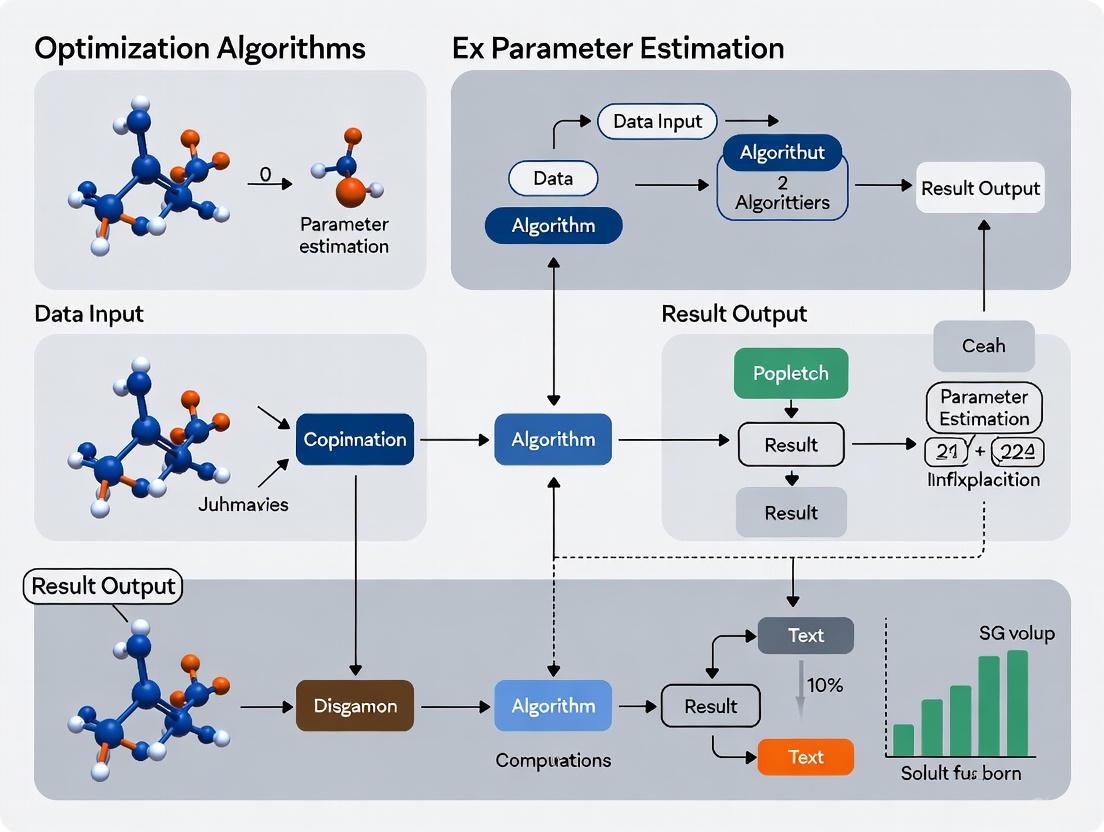

Workflow and Pathway Visualizations

VAE for Covariate Selection Workflow

Multiscale Emergent Properties

Robust Parameter Estimation Logic

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table: Key MIDD and Optimization Tools

This table details essential methodological "tools" and their functions in parameter estimation and model-informed drug development [5] [3] [4].

| Tool / Methodology | Function in Parameter Estimation & MIDD |

|---|---|

| Nonlinear Mixed Effects (NLME) Modeling | A foundational framework for quantifying fixed effects (typical values) and random effects (variability) of parameters in a population [5]. |

| Variational Autoencoder (VAE) | A generative AI framework used to automate and streamline complex tasks like covariate selection and parameter estimation in a single run [2]. |

| Robust Biased Estimators (e.g., PMT-PTE) | A class of statistical estimators designed to provide stable parameter estimates when data suffers from multicollinearity and/or outliers [1]. |

| Quantitative Systems Pharmacology (QSP) | Integrative, multiscale modeling that uses prior knowledge and experimental data to estimate system-specific parameters, helping to predict clinical efficacy and toxicity [3] [4]. |

| Physiologically Based Pharmacokinetic (PBPK) Modeling | A mechanistic approach to estimate and predict drug absorption, distribution, metabolism, and excretion (ADME) based on physiology, drug properties, and experiment data [3]. |

| Model-Based Meta-Analysis (MBMA) | Quantitatively integrates summary results from multiple clinical trials to estimate overall treatment effects and understand between-study heterogeneity [3]. |

| Bayesian Inference | A probabilistic approach to parameter estimation that combines prior knowledge with newly collected data to produce a posterior distribution of parameter values [3]. |

| Iteratively Reweighted Least Squares (IRLS) | The standard algorithm used to compute parameter estimates for Generalized Linear Models, such as the Poisson regression model [1]. |

In modern drug development and parameter estimation research, the "Fit-for-Purpose" (FFP) paradigm ensures that modeling approaches are strategically aligned with specific scientific questions and contexts. FFP modeling provides a structured framework for selecting computational tools that directly address Key Questions of Interest (QOI) within a defined Context of Use (COU). This approach emphasizes that models must be appropriate for their intended application, with validation rigor proportional to the decision-making stakes [3].

A model is considered FFP when it properly defines the COU, ensures data quality, and completes appropriate verification, calibration, and validation. Conversely, models become non-FFP through oversimplification, insufficient data quality, unjustified complexity, or failure to properly define the COU [3]. For researchers in parameter estimation, adopting FFP principles means matching algorithmic complexity to the specific questions being investigated, whether in early discovery, preclinical testing, clinical trials, regulatory submission, or post-market surveillance [3].

Core Concepts and Definitions

Key Questions of Interest (QOI): These are the specific scientific or clinical questions that a model aims to address. In parameter estimation research, QOIs might include identifying optimal dosing strategies, predicting patient population responses, or understanding compound behavior under specific physiological conditions [3].

Context of Use (COU): The COU explicitly defines how the model will be applied, including the specific conditions, populations, and decision points it will inform. This encompasses the intended application within the drug development pipeline or research workflow [3] [6].

Fit-for-Purpose (FFP): This principle ensures that the selected modeling methodology, its implementation, and validation level are appropriate for the specific QOI and COU. The FFP approach balances scientific rigor with practical considerations, avoiding both oversimplification and unnecessary complexity [3].

Troubleshooting Guides and FAQs

FAQ 1: How do I determine if my model is truly "fit-for-purpose"?

Answer: A truly FFP model must meet several criteria. First, it must be precisely aligned with your QOI and have a clearly defined COU. Second, the model must undergo appropriate verification and validation for its intended use. Third, it should utilize data of sufficient quality and quantity. Finally, the model's complexity should be justified—neither oversimplified to the point of being inaccurate nor unnecessarily complex [3].

Troubleshooting Guide:

- Problem: Model predictions consistently deviate from experimental observations.

- Potential Cause: Model may not be FFP due to oversimplification of biological processes.

- Solution: Re-evaluate QOI and COU. Consider incorporating additional mechanistic elements or switching to a more appropriate modeling framework.

- Problem: Model performs well on training data but poorly on validation data.

- Potential Cause: Overfitting or insufficient model validation for the COU.

- Solution: Implement cross-validation, reduce model complexity if necessary, or collect additional validation data specific to the COU.

FAQ 2: What are the most common reasons for model rejection in regulatory submissions?

Answer: Regulatory agencies may reject models that lack a clearly defined COU, have insufficient validation for the intended use, or fail to demonstrate clinical relevance. Specifically for digital endpoints, regulatory feedback has emphasized challenges in interpreting clinical significance when the connection between model outputs and meaningful patient benefits isn't established [6]. One case study involving a novel digital endpoint for Alzheimer's disease received regulatory feedback that although the instrument was sensitive for detecting cognitive changes, the clinical significance of intervention effects was unclear [6].

Troubleshooting Guide:

- Problem: Regulatory questions about clinical meaningfulness of model endpoints.

- Potential Cause: Insufficient connection established between model output and patient-centric outcomes.

- Solution: Conduct additional studies to demonstrate clinical relevance, engage with patient groups to establish meaningfulness, and seek early regulatory consultation.

FAQ 3: How does the "fit-for-purpose" requirement change across drug development stages?

Answer: The FFP requirements evolve significantly throughout the drug development lifecycle. Early discovery stages may utilize simpler models with lower validation requirements, while models supporting regulatory decisions or label claims require the highest level of validation evidence [3] [6].

Table: Evolution of FFP Requirements Across Development Stages

| Development Stage | Typical QOIs | FFP Validation Level | Common Methodologies |

|---|---|---|---|

| Discovery | Target identification, compound optimization | Low to Moderate | QSAR, AI/ML approaches [3] |

| Preclinical | FIH dose prediction, toxicity assessment | Moderate | PBPK, QSP, semi-mechanistic PK/PD [3] |

| Clinical Trials | Dose optimization, patient stratification | Moderate to High | PPK/ER, clinical trial simulation [3] |

| Regulatory Submission | Efficacy confirmation, safety assessment | High | Model-based meta-analysis, virtual population simulation [3] |

| Post-Market | Label updates, population expansion | High | Bayesian inference, adaptive designs [3] |

FAQ 4: What are the key considerations when selecting between different parameter estimation algorithms?

Answer: Algorithm selection should be guided by multiple factors including data structure (sparse vs. rich), model complexity, computational resources, and regulatory acceptance. Bayesian methods are particularly valuable for parameter estimation in complex biological systems with sparse data, as they naturally incorporate prior knowledge and quantify uncertainty [7].

Troubleshooting Guide:

- Problem: Parameter estimates with unacceptably wide confidence intervals.

- Potential Cause: Insufficient data to inform parameters, or structural model identifiability issues.

- Solution: Consider Bayesian approaches with informative priors, redesign experiments to collect more informative data, or simplify model structure.

- Problem: Algorithm convergence failures or excessively long computation times.

- Potential Cause: Model too complex for available data, or inappropriate algorithm selection.

- Solution: Simplify model structure, utilize more efficient optimization algorithms, or increase computational resources.

FAQ 5: How can I assess and mitigate risks associated with model-informed decisions?

Answer: Implement a comprehensive model risk assessment framework that evaluates the impact of model uncertainty on decision-making. This includes sensitivity analysis, uncertainty quantification, and scenario testing. The higher the stakes of the decision being informed, the more robust the risk assessment should be [3].

Troubleshooting Guide:

- Problem: Stakeholder reluctance to accept model-informed decisions.

- Potential Cause: Insufficient communication of model limitations and uncertainties.

- Solution: Develop clear visualizations of uncertainty, implement decision-theoretic frameworks that explicitly incorporate risk, and conduct prospective validation studies.

Methodological Framework and Workflows

The following diagram illustrates the decision process for implementing fit-for-purpose modeling in research and development:

Research Reagent Solutions: Essential Methodologies for Parameter Estimation

Table: Key Methodologies for Parameter Estimation in Biological Modeling

| Methodology | Primary Function | Typical Applications | Regulatory Consideration |

|---|---|---|---|

| Bayesian Inference [7] | Integrates prior knowledge with observed data using probabilistic frameworks | Parameter estimation from sparse data, uncertainty quantification | Well-suited for formal regulatory submissions when priors are well-justified |

| Population PK/PD [3] | Characterizes variability in drug exposure and response across individuals | Dose optimization, covariate effect identification | Established regulatory acceptance with standardized practices |

| PBPK Modeling [3] | Mechanistic modeling of drug disposition based on physiology | Drug-drug interaction prediction, special population dosing | Increasing regulatory acceptance for specific COUs |

| QSP Modeling [3] | Integrates systems biology with pharmacology to simulate drug effects | Target validation, biomarker strategy, combination therapy | Emerging regulatory pathways, early engagement recommended |

| AI/ML Approaches [3] | Pattern recognition and prediction from large, complex datasets | Biomarker discovery, patient stratification, lead optimization | Evolving regulatory landscape, requires rigorous validation |

Implementation Protocols for Common Scenarios

Protocol 1: Bayesian Parameter Estimation for Sparse Data

Background: Appropriate for parameter estimation when dealing with limited observational data, such as in HIV epidemic modeling or rare disease applications [7].

Procedure:

- Define hierarchical model structure incorporating prior knowledge

- Specify appropriate probability distributions for parameters and observables

- Implement Markov Chain Monte Carlo (MCMC) sampling or variational inference

- Conduct convergence diagnostics (Gelman-Rubin statistic, trace plots)

- Validate model using posterior predictive checks

- Quantify uncertainty in parameter estimates and model predictions

Validation: Use cross-validation techniques and compare posterior predictions to held-out data.

Protocol 2: PBPK Model Development for First-in-Human Dose Prediction

Background: Mechanistic modeling approach used to predict human pharmacokinetics from preclinical data [3].

Procedure:

- Collate physicochemical and pharmacokinetic properties of compound

- Develop system parameters for relevant physiology

- Incorporate in vitro-in vivo extrapolation (IVIVE) of clearance mechanisms

- Verify model using preclinical species data

- Predict human PK and determine safe starting dose

- Conduct sensitivity analysis to identify critical parameters

Validation: Compare predictions to observed clinical data as it becomes available.

Protocol 3: Exposure-Response Analysis for Dose Optimization

Background: Population modeling approach to characterize relationship between drug exposure and efficacy/safety outcomes [3].

Procedure:

- Develop population pharmacokinetic model to describe exposure variability

- Identify significant covariates influencing drug exposure

- Develop exposure-response models for primary efficacy and safety endpoints

- Simulate alternative dosing regimens and their predicted outcomes

- Identify optimal dosing strategy balancing efficacy and safety

- Quantify uncertainty in recommended dosing

Validation: Use visual predictive checks and bootstrap methods to evaluate model performance.

Frequently Asked Questions (FAQs)

FAQ 1: What are the most common signs that my gradient-based optimization is failing? You may observe several clear indicators. The loss function converges too quickly to a poor solution with high error, showing little to no improvement over many epochs [8]. The training loss becomes unstable, oscillating erratically or even diverging, which often signals exploding gradients [9]. For recurrent neural networks specifically, a key sign is the model's inability to capture long-term dependencies in sequence data [9].

FAQ 2: When should I consider using an evolutionary algorithm over a gradient-based method? Evolutionary algorithms are particularly advantageous in specific scenarios. They excel when optimizing non-convex objective functions commonly encountered in drug discovery and parameter estimation, where gradient-based methods often get trapped in local optima [10]. They are also highly effective when the objective function lacks differentiability, when dealing with discrete parameter spaces (like molecular structures), or when you need to perform global optimization without relying on derivative information [11] [12].

FAQ 3: How can I improve the convergence speed of my Genetic Algorithm? Recent research demonstrates several effective approaches. The Gradient Genetic Algorithm incorporates gradient information from a differentiable objective function to guide the search direction, achieving up to a 25% improvement in Top-10 scores compared to vanilla genetic algorithms [11]. For Particle Swarm Optimization (PSO), using adaptive parameter tuning (APT) can systematically adjust parameters during the optimization process, significantly enhancing convergence rates [12].

FAQ 4: How do I prioritize which algorithm error to troubleshoot first? A structured approach to prioritization is recommended. First, assess the impact of each error, focusing immediately on those causing system-wide failures or data corruption [13]. Next, consider error frequency, as issues that occur often and disrupt normal workflow should take precedence [13]. Finally, analyze dependencies, prioritizing errors in core components that other parts of your algorithm rely on, as fixing these may resolve multiple issues simultaneously [13].

Troubleshooting Guides

Issue 1: Vanishing or Exploding Gradients in Deep Neural Networks

Problem Description: During backpropagation, gradients become extremely small (vanishing) or excessively large (exploding), leading to slow learning in early layers or unstable training dynamics that prevent convergence [9].

Diagnosis Steps:

- Monitor Gradient Norms: Track the magnitudes (L2-norm) of gradients for each layer during training. A sharp decrease in early layers indicates vanishing gradients, while a sharp increase signals exploding gradients.

- Analyze Weight Updates: Check if weight updates are becoming infinitesimally small or disproportionately large.

- Observe Loss Behavior: A loss that stagnates for extended periods may indicate vanishing gradients. A loss that spikes or becomes NaN (Not a Number) often indicates exploding gradients [8].

Resolution Methods:

- Use Alternative Activation Functions: Replace saturating functions like Sigmoid or Tanh with non-saturating ones like ReLU, Leaky ReLU, or ELU to prevent gradients from shrinking [9].

- Apply Batch Normalization: Normalize the inputs to each layer to maintain zero mean and unit variance, which stabilizes and accelerates training [9].

- Employ Gradient Clipping: For exploding gradients, cap the gradients at a predefined threshold during backpropagation to prevent unstable updates [9].

- Use Proper Weight Initialization: Utilize initialization schemes (e.g., He, Xavier) that are designed to keep the scale of gradients consistent across layers [9].

Prevention Strategies:

- Implement a structured weight initialization strategy from the start.

- Incorporate Batch Normalization layers into your model architecture by default for deep networks.

- Continuously monitor gradient statistics throughout the training process.

Issue 2: Poor Convergence in Evolutionary Algorithms

Problem Description: The algorithm fails to find a satisfactory solution, gets stuck in a local optimum, or converges unacceptably slowly.

Diagnosis Steps:

- Check Diversity: Monitor the population diversity. A rapid loss of diversity suggests the algorithm is converging prematurely to a suboptimal point.

- Analyze Parameter Settings: Evaluate if the algorithm's parameters (e.g., mutation rate, crossover rate, selection pressure) are appropriate for the problem landscape.

- Profile Computational Cost: Determine if the algorithm is hindered by excessive computational overhead per iteration [10].

Resolution Methods:

- Hybridize with Gradient Information: For problems where a differentiable objective function can be defined, incorporate gradient guidance to direct the evolutionary search, as seen in the Gradient Genetic Algorithm [11].

- Implement Adaptive Parameter Tuning: Use methods like Adaptive Parameter Tuning (APT) or Hierarchically Self-Adaptive PSO (HSAPSO) to dynamically adjust algorithm parameters during a run, improving performance and convergence [12] [10].

- Consider a Two-Stage Approach: For complex parameter estimation, algorithms like the Two-stage Differential Evolution (TDE) can be highly effective. The first stage uses a mutation strategy with historical solutions for broad exploration, while the second stage uses a strategy with inferior solutions for refined exploitation, balancing diversity and convergence speed [14].

Prevention Strategies:

- Conduct preliminary experiments to understand the problem landscape and select appropriate algorithm parameters.

- Design experiments with multiple random seeds to account for the stochastic nature of evolutionary algorithms.

- Consider using advanced, self-adaptive algorithms from the outset for complex optimization tasks.

Issue 3: Algorithm Selection for Parameter Estimation

Problem Description: Uncertainty in choosing the most suitable optimization algorithm for a specific parameter estimation problem in research, leading to suboptimal results or excessive computational cost.

Diagnosis Steps:

- Define Problem Characteristics: Determine if the problem is continuous or discrete, convex or non-convex, differentiable or non-differentiable.

- Identify Constraints: Clarify the nature of the constraints (e.g., linear, non-linear, box constraints).

- Assess Computational Budget: Evaluate the available computational resources and the cost of a single function evaluation.

Resolution Methods:

- Leverage Problem Structure: If the problem is differentiable and convex, gradient-based methods (e.g., SGD, Adam) are typically efficient. For non-convex, non-differentiable, or discrete problems, evolutionary methods (e.g., GA, DE, PSO) are often more robust [12] [15].

- Consult Performance Tables: Refer to comparative studies and performance tables (see Table 2 below) to understand the strengths and weaknesses of different algorithms on benchmark problems.

- Start Simple, Then Scale: Begin with a well-understood, simple algorithm to establish a baseline performance. Then, progress to more sophisticated methods like self-adaptive PSO [10] or TDE [14] if needed.

Prevention Strategies:

- Maintain a toolkit of different optimization algorithms.

- Stay updated on recent algorithmic advancements and their proven application domains.

Performance Data Tables

Table 1: Quantitative Performance of Advanced Optimization Algorithms

This table summarizes the reported performance gains of several advanced algorithms over their traditional counterparts as cited in recent literature.

| Algorithm | Key Innovation | Benchmark/Application | Reported Improvement | Citation |

|---|---|---|---|---|

| Gradient GA | Incorporates gradient guidance into genetic algorithms | Molecular design benchmarks | Up to 25% improvement in Top-10 score vs. vanilla GA | [11] |

| TDE (Two-stage DE) | Novel mutation strategy using historical & inferior solutions | PEMFC parameter estimation (SSE minimization) | 41% reduction in SSE; 98% more efficient (0.23s vs 11.95s) | [14] |

| HSAPSO-SAE | Hierarchically Self-Adaptive PSO for autoencoder tuning | Drug classification (DrugBank, Swiss-Prot) | 95.5% accuracy; computational cost of 0.010s per sample | [10] |

Table 2: Troubleshooting Guide for Common Optimization Problems

This table provides a quick-reference guide for identifying and addressing common issues across different algorithm types.

| Problem | Likely Causes | Recommended Solutions |

|---|---|---|

| Vanishing Gradients | Saturating activation functions (Sigmoid/Tanh), poor weight initialization, very deep networks [9] | Use ReLU/Leaky ReLU, Batch Normalization, proper weight initialization [9] |

| Exploding Gradients | Large weights, high learning rate, unscaled input data [9] | Gradient clipping, lower learning rate, weight regularization, input normalization [9] |

| Premature Convergence (EA) | Loss of population diversity, excessive selection pressure, incorrect mutation rate [12] | Adaptive parameter tuning [12], hybrid approaches [11], fitness sharing |

| Slow Convergence (EA) | Poor exploration/exploitation balance, inadequate parameter settings [11] [12] | Incorporate gradient guidance [11], use adaptive parameter tuning [12] |

Experimental Protocols

Protocol 1: Demonstrating the Vanishing/Exploding Gradient Problem

Objective: To empirically observe and compare the effects of activation functions and initialization on gradient stability in a Deep Neural Network (DNN).

Materials:

- Dataset: A synthetic binary classification dataset (e.g., from

sklearn.datasets.make_classificationormake_moons). - Libraries: Python with TensorFlow/Keras or PyTorch, NumPy, Matplotlib.

Methodology:

- Model Architecture: Construct two DNNs with identical, sufficiently deep architectures (e.g., 10 layers with 10-50 neurons each).

- Variable Manipulation:

- For vanishing gradients, train one model with

sigmoidactivation and another withReLUactivation, using standard initialization (e.g., Glorot) [9]. - For exploding gradients, train a deep model (e.g., 50 layers) with a very high learning rate (e.g., 1.0) and large initial weights (e.g., stddev=3.0), comparing it to a model trained with a low learning rate and small initial weights [9].

- For vanishing gradients, train one model with

- Data Collection:

- Record the training loss over epochs for both models.

- For vanishing gradients, approximate the average gradient magnitude by comparing weight changes before and after a training epoch [9].

- Analysis:

- Plot the loss curves for both models. The model with

sigmoidactivation will typically show slow or stalled convergence, while theReLUmodel will converge faster [9]. - For the exploding gradient test, the model with high learning rate and large weights will show an unstable, spiking loss curve.

- Plot the loss curves for both models. The model with

Protocol 2: Benchmarking an Evolutionary Algorithm for Parameter Estimation

Objective: To evaluate the performance of a Two-stage Differential Evolution (TDE) algorithm against a traditional DE variant for a parameter estimation task.

Materials:

- Problem: A parameter estimation problem with a known ground truth or a standard benchmark function (e.g., from CEC test suites). The Proton Exchange Membrane Fuel Cell (PEMFC) model with seven critical parameters is used in the source study [14].

- Metrics: Sum of Squared Errors (SSE) between estimated and true values, convergence time, and robustness (standard deviation of results over multiple runs) [14].

Methodology:

- Algorithm Setup: Implement or obtain code for the TDE algorithm and a baseline DE algorithm (e.g., HARD-DE) [14].

- Experimental Run: Conduct multiple independent runs of each algorithm on the selected problem, ensuring both use the same computational budget (number of function evaluations).

- Data Collection: For each run, record the final best solution, the SSE at convergence, and the runtime.

- Analysis:

- Compare the minimum, maximum, and average SSE achieved by both algorithms. TDE is reported to achieve a lower minimum SSE and a significantly improved maximum SSE, indicating superior robustness [14].

- Compare the average runtime. TDE has been shown to be significantly more efficient [14].

- Perform statistical tests (e.g., Wilcoxon signed-rank test) to confirm the significance of the performance differences.

Workflow and Algorithm Diagrams

Gradient GA High-Level Workflow

Troubleshooting Gradient Problems

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Computational Tools and Resources

| Item | Function/Description | Relevance to Optimization Research |

|---|---|---|

| Stacked Autoencoder (SAE) | A deep learning model used for unsupervised feature learning and dimensionality reduction. | Serves as a powerful feature extractor in hybrid frameworks like optSAE+HSAPSO for drug classification [10]. |

| Differentiable Objective Function | A parameterized function (e.g., via a neural network) whose gradients can be computed with respect to its inputs. | Enables the incorporation of gradient guidance into traditionally non-gradient algorithms like the Genetic Algorithm [11]. |

| Discrete Langevin Proposal | A method for enabling gradient-based guidance in discrete spaces. | Critical for applying gradient techniques to discrete optimization problems, such as molecular design [11]. |

| Hierarchically Self-Adaptive PSO (HSAPSO) | A variant of Particle Swarm Optimization that dynamically adjusts its own parameters at multiple levels during the search process. | Used for hyperparameter tuning of deep learning models, improving accuracy and computational efficiency in drug discovery [10]. |

| Two-stage Differential Evolution (TDE) | A DE variant that uses a novel dual mutation strategy to enhance exploration and exploitation. | Provides high accuracy, robustness, and computational efficiency for complex parameter estimation tasks, such as in fuel cell modeling [14]. |

| Particle Swarm Optimization (PSO) | A swarm intelligence algorithm that optimizes a problem by iteratively trying to improve a candidate solution. | A foundational evolutionary algorithm often used as a baseline and enhanced with methods like adaptive parameter tuning [12]. |

The Impact of Accurate Parameter Estimation on Reducing Late-Stage Attrition and Development Costs

Frequently Asked Questions

1. Why is parameter estimation so critical in early-stage drug discovery? In drug discovery, parameter estimation involves using computational and statistical methods to precisely determine key biological and chemical variables, such as binding affinities, kinetic rates, and toxicity thresholds. Accurate estimates are foundational for building predictive models of a drug candidate's behavior. Errors at this stage can lead to flawed predictions, causing promising candidates to be wrongly abandoned or, conversely, allowing ineffective or toxic compounds to progress. This wastes significant resources, as the cost of development increases dramatically at each subsequent phase [16] [17].

2. How can machine learning models for parameter estimation be troubleshooted for overfitting? Overfitting occurs when a model learns the noise in the training data instead of the underlying relationship, harming its predictive power on new data. To troubleshoot this:

- Apply Resampling and Validation: Use techniques like k-fold cross-validation and hold back a portion of your data as a validation set to test the model's performance on unseen data [17].

- Use Regularization Methods: Apply regression methods like Ridge, LASSO, or elastic nets, which add penalties to parameters as model complexity increases, forcing the model to generalize [17].

- Implement Dropout: In deep neural networks, randomly remove units in the hidden layers during training to prevent the network from becoming too reliant on any single node [17].

- Simplify the Model: Check if your model structure is too complex for the amount of data available. Using a simpler model or reducing the model order can often prevent overfitting [18].

3. What are common data-related issues that hinder parameter estimation, and how can they be resolved? Data deficiencies are a primary source of estimation problems.

- Issue: Insufficient System Excitation: Simple inputs (e.g., a step input) may not adequately provoke the full range of system dynamics needed to estimate parameters accurately [18].

- Solution: Design experiments with input signals that perturb the system across a wider range of conditions to gather more informative data [18].

- Issue: Poor Data Quality: Data may contain drift, outliers, missing samples, or offsets [18].

- Solution: Preprocess estimation data to correct for these deficiencies before fitting models [18].

- Issue: Low-Volume or Low-Quality Training Data: The predictive power of any machine learning approach is highly dependent on high volumes of high-quality data [17].

- Solution: Invest in generating systematic, comprehensive, and high-dimensional data sets. The practice of ML is said to consist of at least 80% data processing and cleaning [17].

4. How should initial parameter guesses and model structures be selected? Poor initial choices can cause algorithms to converge to a local optimum or fail entirely.

- Initial Guesses: Base initial parameter guesses on prior knowledge of the system or from earlier, simpler experiments. When confident in your initial guesses, specify a smaller initial parameter covariance to give them more importance during estimation [18].

- Model Structure: Start with the simplest model structure that can capture the system dynamics. AR and ARX model structures are good first candidates for linear models due to their algorithmic simplicity and lower sensitivity to initial guesses. Avoid unnecessarily complex models [18].

Quantitative Impact of R&D Costs and Machine Learning

Table 1: Estimated R&D Cost per New Drug [16]

| Drug Type | Cost Range (2018 USD) | Key Notes |

|---|---|---|

| All New Drugs | $113 million - $6 billion+ | Broad range includes new molecular entities, reformulations, and new indications. |

| New Molecular Entities (NMEs) | $318 million - $2.8 billion | Narrower range for novel drugs; highlights high cost of innovative R&D. |

Table 2: Machine Learning Applications in Drug Discovery [17]

| Stage in Pipeline | ML Application | Potential Impact |

|---|---|---|

| Target Identification | Analyzing omics data for target-disease associations. | Provides stronger evidence for novel targets, reducing early scientific attrition. |

| Preclinical Research | Small-molecule compound design and optimization; bioactivity prediction. | Improves hit rates and reduces synthetic effort on poor candidates. |

| Clinical Trials | Identification of prognostic biomarkers; analysis of digital pathology data. | Enriches patient cohorts, predicts efficacy, and improves trial success probability. |

Experimental Protocols for Robust Parameter Estimation

Protocol 1: Building a Predictive Bioactivity Model using Machine Learning

Objective: To train a model that accurately predicts compound bioactivity to prioritize candidates for synthesis and testing.

- Data Curation: Collect a high-quality dataset of chemical compounds with associated bioactivity measurements (e.g., IC50, Ki). This is the most critical step.

- Feature Engineering: Represent each compound using numerical features (e.g., molecular descriptors, fingerprints).

- Model Selection and Training:

- Split data into training, validation, and test sets.

- Train a selected algorithm (e.g., Random Forest, Graph Neural Network) on the training set.

- Use the validation set to tune hyperparameters and apply techniques like dropout or regularization to avoid overfitting [17].

- Model Validation: Evaluate the final model's performance on the held-out test set using metrics such as AUC, precision-recall, and mean squared error [17].

Protocol 2: Tuning a Parameter Estimation Algorithm for a Specific Biological System

Objective: To optimize an optimization algorithm's performance for estimating parameters in a complex, non-linear biological model.

- Problem Formulation: Define the objective function, often as the Root Mean Square Error (RMSE) between model predictions and experimental data [19].

- Algorithm Selection and Enhancement:

- Select a base metaheuristic algorithm (e.g., a nature-inspired optimizer).

- To address common issues like premature convergence, integrate enhancement strategies such as a memory mechanism (to preserve elite solutions), an evolutionary operator (to encourage diversity), and a stochastic local search (for intensive refinement) [20].

- Benchmarking: Compare the performance of the enhanced algorithm against standard algorithms on your specific dataset, using the minimized objective function value and convergence speed as key metrics [20].

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Resources for Computational Drug Discovery

| Item | Function |

|---|---|

| High-Throughput Screening (HTS) Data | Provides large-scale experimental data on compound activity, used as a foundational dataset for training and validating ML models [17]. |

| Multi-Omics Datasets (Genomics, Proteomics, etc.) | Enables systems-level understanding of disease mechanisms and identification of novel drug targets [17]. |

| Graph Convolutional Network (GCN) | A type of deep neural network ideal for analyzing structured data like molecular graphs, directly from their structure without needing pre-defined fingerprints [17]. |

| Recursive Least Squares (RLS) Estimator | An online estimation algorithm useful for systems that are linear in their parameters; valued for its simplicity and ease of implementation [18]. |

Workflow and Impact Diagrams

Impact of Parameter Estimation

Parameter Estimation Workflow

Algorithmic Innovations: Applying Advanced Optimization to Drug Development Challenges

Troubleshooting Guides

Troubleshooting Guide 1: Handling Non-Identifiable PK/PD Models

Problem: The parameter estimation algorithm fails to converge, or results in highly uncertain parameter estimates for a parent drug and its metabolite.

Explanation: This often indicates an identifiability problem, where the available data is insufficient to uniquely estimate all parameters in the model [21]. The model structure may violate mathematical principles.

Solution:

- Simplify the Model: Reduce the number of compartments or parameters, especially for the metabolite kinetics, to match the information content of your data.

- Check Data Quality: Ensure your bioanalytical methods are properly validated and that the calibration curve accurately links instrument response to drug concentration [21].

- Re-eassay Study Design: Confirm that the sampling schedule captures key PK/PD events. For future studies, consider whether cassette dosing (administering multiple compounds simultaneously) is appropriate for your goals, though it can sometimes introduce unforeseen interactions [21].

Troubleshooting Guide 2: Optimization Algorithm Stuck in Local Minima

Problem: The machine learning optimization process converges, but the model fits poorly or is not biologically plausible. Small changes in initial parameter guesses lead to different results.

Explanation: Population PK/PD models create a complex, multi-dimensional parameter landscape. Conventional optimization methods like gradient descent can get trapped in a local minimum—a good but not the best solution—instead of finding the global minimum [22].

Solution:

- Use Metaheuristic Algorithms: Implement population-based algorithms like Genetic Algorithms or Ant Colony Optimization which are designed to explore a broader model space and are less prone to getting stuck in local minima [23].

- Run from Multiple Starting Points: Initialize your optimization algorithm from a wide range of different parameter values to survey the landscape.

- Apply Adaptive Optimizers: Use algorithms like Adam (Adaptive Moment Estimation) or RMSprop that adapt the learning rate for each parameter, which can help navigate challenging landscapes [24].

Troubleshooting Guide 3: Poor Out-of-Sample Predictive Performance

Problem: The AI/ML model fits the training data well but fails to accurately predict concentrations or responses for new dosing regimens or patient populations.

Explanation: Purely data-driven AI models (e.g., neural networks, tree-based models) may lack embedded mechanistic understanding of biology (e.g., absorption, distribution, elimination). They learn associations from data but cannot reliably extrapolate beyond the conditions represented in that data [23].

Solution:

- Adopt a Hybrid Approach: Combine mechanistic models (e.g., traditional PK/PD models defined by ordinary differential equations) with ML components. The mechanistic part provides biological structure, while ML identifies complex patterns [23].

- Prioritize Explainable AI (XAI): Use interpretable ML models or build "gray box" models that maintain transparency. This is crucial for both scientific understanding and regulatory acceptance [25].

- Increase Data Quality and Quantity: The core challenge is often limited access to large, high-quality, and reliable datasets for training. Focus on curating robust datasets that cover a wider range of expected conditions [25].

Troubleshooting Guide 4: Managing High Variability in Clinical Data

Problem: A population model cannot adequately capture the high degree of variability in patient responses, leading to poor fits for individual profiles.

Explanation: Understanding and predicting inter-individual variability is inherently difficult, especially when only a few samples are available per patient (sparse data) [25].

Solution:

- Leverage ML for Covariate Analysis: Use unsupervised and supervised ML methods to explore the data for unknown covariate effects and complex, non-linear relationships between patient factors (e.g., weight, genetics) and PK parameters [23].

- Define a Rich Model Space: When using ML-assisted modeling, define a broad model space that includes many potential covariate-parameter relationships and structural models for the algorithm to explore [22].

- Validate with Biological Plausibility: Always review the covariate relationships identified by ML for their biological and clinical plausibility. The role of the scientist is indispensable in this evaluation step [22].

Frequently Asked Questions (FAQs)

FAQ 1: When should I use a metaheuristic algorithm like a Genetic Algorithm over a traditional gradient-based method for PK/PD parameter estimation?

Answer: Use a Genetic Algorithm (GA) when dealing with complex, high-dimensional models where the parameter landscape is likely to have many local minima. GAs perform a global search by evaluating a population of models simultaneously, which gives them a better chance of finding a globally optimal solution compared to gradient-based methods that follow a single path [22] [23]. They are particularly useful for automated structural model selection.

FAQ 2: What are the most common causes of failure in AI/ML-driven PK/PD modeling, and how can I avoid them?

Answer: The most common causes are:

- Data Issues: Insufficient quantity or poor quality of training data. Solution: Invest in robust data generation and curation [25].

- Overfitting: The model learns noise instead of the underlying signal. Solution: Use regularization, cross-validation, and ensure the model is evaluated on a hold-out test set.

- Lack of Biological Plausibility: The model is a "black box" with no grounding in known physiology. Solution: Prefer hybrid models that combine mechanistic principles with ML pattern recognition [23].

FAQ 3: My ML model for predicting drug clearance works well in adults but fails in neonates. What is the likely issue?

Answer: This is a classic problem of extrapolation. Your model was trained on data from one population (adults) and is being applied to a physiologically distinct population (neonates) that was not well-represented in the training data [23]. To address this, use transfer learning techniques to adapt the model with neonatal data, or develop a hybrid PK/ML model that incorporates known physiological differences (e.g., organ maturation functions) as priors [23].

FAQ 4: How can I address the "black box" nature of complex ML models to gain regulatory acceptance for my PK/PD analysis?

Answer: Regulatory agencies emphasize model transparency, validation, and managing bias [25] [26].

- Use Explainable AI (XAI): Employ methods like SHAP (SHapley Additive exPlanations) to interpret the model's predictions.

- Adopt Hybrid Modeling: Ground your ML approach in established PBPK or population PK models. This provides a mechanistic foundation that regulators are familiar with [25].

- Practice Good Machine Learning Practice (GMLP): Maintain rigorous documentation of your model development process, including data provenance, model selection criteria, and validation results [26].

Experimental Protocols

Detailed Protocol: ML-Assisted Population PK Model Development with a Genetic Algorithm

Objective: To automate the selection of a population pharmacokinetic model structure and identify influential covariates using a genetic algorithm (GA).

Background: This non-sequential approach allows for the simultaneous evaluation of multiple model hypotheses and their interactions, which can be missed in traditional stepwise model building [22].

Materials:

- Pharmacokinetic concentration-time data from a clinical study.

- Patient covariate data (e.g., weight, sex, renal function).

- Software with GA capabilities (e.g., Pirana software, custom Python/R scripts).

Methodology:

- Define the Model Space:

- Structural Models: Specify a set of candidate models (e.g., one- vs. two-compartment, first-order vs. saturable elimination, first-order absorption with vs. without lag-time vs. distributed delay) [22].

- Statistical Model: Define options for inter-individual variability (e.g., exponential, proportional) and residual error models.

- Covariate Model: List potential continuous and categorical covariates and their potential relationships (e.g., linear, power) with PK parameters.

Encode the Model Space as "Genes": Represent each combination of structural, statistical, and covariate model choices as a chromosome (a string of genes) in the genetic algorithm.

Define the Fitness Function: Create a scoring function that balances goodness-of-fit (e.g., objective function value, diagnostic plots) with model parsimony (e.g., number of parameters, Akaike Information Criterion). Apply penalties for convergence failures [22].

Run the Genetic Algorithm:

- Initialization: Generate a random population of models.

- Evaluation: Calculate the fitness score for each model.

- Selection: Preferentially select the best-fitting models as "parents."

- Crossover: Create "offspring" models by combining features from two parent models.

- Mutation: Randomly alter a small number of genes in the offspring to introduce new features.

- Repeat: Iterate through selection, crossover, and mutation for a fixed number of generations or until convergence.

Final Model Evaluation: The analyst must review the top-performing model(s) proposed by the GA for biological plausibility, clinical relevance, and robustness before final acceptance [22].

Workflow Diagram: ML-Assisted PK/PD Modeling

Comparison of Optimization Algorithms for Parameter Estimation

| Algorithm | Key Mechanism | Advantages | Common Use Cases in PK/PD |

|---|---|---|---|

| Gradient Descent [24] | Iteratively moves parameters in the direction of the steepest descent of the loss function. | Simple, guaranteed local convergence. | Basic model fitting with smooth, convex objective functions. |

| Stochastic Gradient Descent (SGD) [24] | Uses a single data point (or mini-batch) to approximate the gradient for each update. | Computationally efficient for large datasets; can escape local minima. | Fitting models to very large PK/PD datasets (e.g., from dense sampling or wearable sensors). |

| RMSprop [24] | Adapts the learning rate for each parameter by dividing by a moving average of recent gradient magnitudes. | Handles non-convex problems well; adjusts to sparse gradients. | Useful for complex PK/PD models with parameters of varying sensitivity. |

| Adam [24] | Combines ideas from Momentum and RMSprop, using moving averages of both gradients and squared gradients. | Adaptive learning rates; generally robust and requires little tuning. | A popular default choice for a wide range of ML-assisted PK/PD tasks. |

| Genetic Algorithm (GA) [22] [23] | A metaheuristic that mimics natural selection, evolving a population of model candidates. | Global search; less prone to getting stuck in local minima; good for model selection. | Automated structural model and covariate model discovery in population PK/PD. |

Essential Research Reagent Solutions

| Tool / Reagent | Function in AI/ML-Enhanced PK/PD |

|---|---|

| Python/R Programming Environment | Primary languages for implementing ML algorithms (e.g., scikit-learn, TensorFlow, PyTorch) and performing statistical analysis [27]. |

| Population PK/PD Software (e.g., NONMEM, Monolix) | Industry-standard tools for nonlinear mixed-effects modeling; increasingly integrated with or guided by ML algorithms [25] [22]. |

| Genetic Algorithm Library (e.g., DEAP, GA) | Provides pre-built functions and structures for implementing genetic algorithms to search the model space [22]. |

| High-Quality, Curated PK/PD Datasets | The essential "reagent" for training and validating any ML model. Data must be reliable and representative [25] [23]. |

| Explainable AI (XAI) Toolkits (e.g., SHAP, LIME) | Software libraries used to interpret the predictions of "black box" ML models, crucial for scientific validation and regulatory submissions [25]. |

Key Algorithmic Relationships

Diagram: Genetic Algorithm Operations

The integration of artificial intelligence (AI) with mechanistic models represents a paradigm shift in computational biology and drug development. Mechanistic models describe system behavior based on underlying biological or physical principles, while AI models learn patterns directly from complex datasets. Combining these approaches merges the interpretability and causal understanding of mechanistic modeling with the predictive power and pattern recognition capabilities of AI [28]. This hybrid methodology is particularly valuable for modeling complex biological systems, estimating parameters difficult to capture experimentally, and creating surrogate models to reduce computational costs associated with expensive mechanistic simulations [28].

In pharmaceutical research and development, this integration addresses fundamental limitations of each approach used in isolation. Traditional mechanistic models often struggle with scalability and parameter estimation for highly complex systems, whereas AI models frequently lack interpretability and the ability to generalize beyond their training data [28]. The hybrid framework enables researchers to build more comprehensive and predictive models for critical biomedical applications including target identification, pharmacokinetic/pharmacodynamic (PK/PD) analysis, patient-specific dosing optimization, and disease progression modeling [28] [29].

Technical Support & Troubleshooting Guide

Frequently Asked Questions

Q1: Our hybrid model shows excellent training performance but poor generalization on validation data. What could be causing this issue?

This problem typically stems from overfitting or data mismatch. First, verify that your training and validation datasets follow similar distributions using statistical tests like Kolmogorov-Smirnov. Implement regularization techniques specifically designed for hybrid architectures, such as pathway-informed dropout where randomly selected biological pathways are disabled during training iterations. Additionally, employ cross-validation strategies that maintain temporal structure for time-series data or group structure for patient-derived data [29].

Q2: How can we effectively estimate parameters that are difficult to measure experimentally in our pharmacokinetic model?

Leverage AI-enhanced parameter estimation protocols. Train a deep neural network as a surrogate for the mechanistic model to rapidly approximate parameter likelihoods. Apply Bayesian optimization with AI-informed priors to explore the parameter space efficiently, using the mechanistic model constraints to narrow feasible regions. Transfer learning approaches can also be valuable, where parameters learned from data-rich similar systems provide initial estimates for your specific system [30].

Q3: Our integrated model has become computationally prohibitive for routine use. What optimization strategies can we implement?

Develop a surrogate modeling pipeline. Use active learning to identify the most informative regions of your parameter space, then train a lightweight AI surrogate model (such as a reduced-precision neural network) on targeted mechanistic model simulations. For real-time applications, implement model distillation to transfer knowledge from your full hybrid model to a compact architecture while preserving predictive accuracy for key outputs [28] [30].

Q4: We're experiencing inconsistencies between AI-predicted patterns and mechanistic constraints. How can we better align these components?

Implement physics-informed neural networks (PINNs) that explicitly incorporate mechanistic equations as regularization terms within the loss function. Alternatively, adopt a hierarchical approach where the mechanistic model defines the overall system architecture and conservation laws, while AI components model specific subprocesses with high uncertainty. This maintains biological plausibility while leveraging data-driven insights [30].

Q5: How can we validate that our hybrid model provides genuine biological insights rather than just data fitting?

Employ multiscale validation protocols. Test predictions at both molecular and systems levels, and use mechanistic interpretability techniques to analyze which features and circuits your AI components are leveraging. Design "knock-out" simulations where key biological mechanisms are disabled in the model and compare predictions to experimental inhibition studies. Additionally, use the model to generate novel, testable hypotheses and collaborate with experimentalists to validate these predictions [31] [32].

Common Error Messages and Solutions

Table 1: Troubleshooting Common Hybrid Modeling Issues

| Error Message/Symptom | Potential Causes | Recommended Solutions |

|---|---|---|

| Parameter identifiability warnings | High parameter correlations, insufficient data, structural non-identifiability | Apply regularization with biological constraints; redesign experiments to capture more informative data; reparameterize model to reduce correlations [29] |

| Numerical instability during integration | Stiff differential equations, inappropriate solver parameters, extreme parameter values | Switch to implicit solvers for stiff systems; implement adaptive step sizing; apply parameter boundaries based on biological feasibility [29] |

| Discrepancies between scales (e.g., molecular vs. cellular predictions) | Inadequate bridging between scales, missing emergent phenomena | Implement multiscale modeling frameworks with dedicated scale-bridging algorithms; incorporate additional biological context at interface points [28] [30] |

| Training divergence when incorporating mechanistic constraints | Conflicting gradients between data fitting and constraint terms, learning rate too high | Implement gradient clipping; use adaptive learning rate schedules; progressively increase constraint weight during training rather than fixed weighting [30] |

| Long inference times despite surrogate modeling | Inefficient model architecture, unnecessary complexity for application needs | Perform model pruning and quantization; implement early exiting for simple cases; use model cascades where simple models handle straightforward cases [30] |

Key Experimental Protocols

Protocol 1: Development of AI-Enhanced Quantitative Systems Pharmacology (QSP) Models

Objective: To construct a hybrid QSP model that integrates AI-based parameter estimation with mechanistic disease pathophysiology for optimizing clinical trial design [29].

Materials and Methods:

- Data Integration Layer: Collect and preprocess multi-scale experimental data including omics data (genomics, proteomics), clinical measurements, and literature-derived pathway information. Apply natural language processing (NLP) tools for automated literature mining to extract PK/PD parameters [29].

- Mechanistic Framework Establishment: Define the core biological structure using systems of ordinary differential equations representing key disease mechanisms, drug targets, and physiological interactions. Incorporate known biological constraints and conservation laws [29].

- AI-Hybridization:

- Train machine learning models (random forests, gradient boosting) on structural relationships to estimate difficult-to-measure parameters

- Implement neural ordinary differential equations (Neural ODEs) to model poorly characterized subsystems

- Develop surrogate models for rapid sensitivity analysis and uncertainty quantification [29]

- Validation Framework: Use k-fold cross-validation with temporal holding for time-series data. Compare predictions against experimental outcomes not used in training. Perform virtual patient simulations to assess population-level predictive performance [29].

Expected Outcomes: A validated hybrid QSP model capable of predicting patient-specific treatment responses, optimizing dosing regimens, and informing clinical trial designs with quantified uncertainty estimates.

Protocol 2: Hybrid Mechanistic-AI Approach for Chemical Process Scale-Up

Objective: To accelerate chemical process scale-up by combining molecular-level kinetic models with deep transfer learning to address reactor-specific transport phenomena [30].

Materials and Methods:

- Mechanistic Model Development: Construct a molecular-level kinetic model using laboratory-scale experimental data. For complex systems like fluid catalytic cracking, employ single-event kinetic modeling or bond-electron matrix approaches to represent fundamental reaction mechanisms [30].

- Deep Transfer Learning Architecture:

- Design a specialized network with three residual multi-layer perceptrons (ResMLPs): Process-based ResMLP for process conditions, Molecule-based ResMLP for feedstock composition, and Integrated ResMLP to predict product distribution

- Generate comprehensive training data through mechanistic model simulations across varied conditions

- Pre-train the network on laboratory-scale data (source domain)

- Implement transfer learning using limited pilot-scale data (target domain) with property-informed fine-tuning [30]

- Cross-Scale Optimization: Utilize the trained hybrid model with multi-objective optimization algorithms to identify optimal process conditions at pilot and industrial scales, balancing yield, selectivity, and economic considerations [30].

Expected Outcomes: A unified modeling framework capable of predicting product distribution across different reactor scales, significantly reducing experimental requirements for process scale-up while maintaining molecular-level predictive accuracy.

Visualization of Hybrid Modeling Approaches

Workflow for Hybrid Model Development

Hybrid Model Development Workflow

Parameter Estimation with AI Enhancement

AI-Enhanced Parameter Estimation Process

Research Reagent Solutions

Table 2: Essential Computational Tools for Hybrid Mechanistic-AI Research

| Tool/Category | Specific Examples | Primary Function | Application Context |

|---|---|---|---|

| Mechanistic Modeling Platforms | MATLAB SimBiology, COPASI, DBSolve, GNU MCSim | Solve systems of differential equations; parameter estimation; sensitivity analysis | Building quantitative systems pharmacology (QSP) models; pharmacokinetic/pharmacodynamic modeling [29] |

| AI/ML Frameworks | PyTorch, TensorFlow, JAX, Scikit-learn | Implement neural networks; deep learning; standard machine learning algorithms | Developing surrogate models; parameter estimation; pattern recognition in complex data [33] [30] |

| Hybrid Modeling Specialized Tools | Neural ODEs, Physics-Informed Neural Networks (PINNs), TensorFlow Probability | Integrate differential equations with neural networks; incorporate physical constraints | Creating hybrid architectures where AI learns unknown terms in mechanistic models [30] |

| Transfer Learning Libraries | Hugging Face Transformers, TLlib, Keras Tuner | Adapt pre-trained models to new domains with limited data | Cross-scale modeling; adapting models from laboratory to industrial scale [30] |

| Optimization & Parameter Estimation | Bayesian optimization tools (BoTorch, Scipy), Markov Chain Monte Carlo (PyMC3, Stan) | Efficient parameter space exploration; uncertainty quantification | Parameter estimation for complex models; design of experiments [29] [30] |

| Data Mining & Curation | NLP tools (spaCy, NLTK), automated literature mining pipelines | Extract and structure knowledge from scientific literature | Populating model parameters; building prior distributions; validating biological mechanisms [29] |

| Visualization & Interpretation | TensorBoard, Plotly, Matplotlib, mechanistic interpretability tools | Model debugging; feature visualization; results communication | Understanding AI component behavior; explaining model predictions [31] [32] |

Advanced Implementation Considerations

Addressing Scale Discrepancies in Multi-Source Data

A significant challenge in hybrid modeling is reconciling data from different scales and resolutions. Laboratory-scale data often includes detailed molecular-level characterization, while pilot and industrial-scale systems typically provide only bulk property measurements [30]. Implement property-informed transfer learning by integrating mechanistic equations for calculating bulk properties directly into neural network architectures. This approach bridges the data gap between scales by enabling the model to learn from molecular-level laboratory data while predicting bulk properties relevant to larger scales [30].

For cross-scale parameter estimation, develop multi-fidelity modeling strategies that combine high-fidelity experimental data with larger volumes of lower-fidelity simulation data. Use adaptive sampling techniques to strategically allocate computational resources between mechanistic simulations and AI training, maximizing information gain while minimizing computational expense [30].

Interpretability and Validation Frameworks

As hybrid models grow in complexity, maintaining interpretability becomes increasingly important, particularly for regulatory acceptance in drug development [31] [32]. Implement mechanistic interpretability techniques to analyze how AI components process information, including:

- Activation Patching: Systematically replace activations in the network to identify causal relationships between components and predictions [31]

- Circuit Analysis: Identify subnetworks responsible for specific computational functions within larger AI architectures [31] [32]

- Feature Visualization: Understand what input patterns maximally activate different components of the hybrid model [32]

Develop comprehensive validation protocols that test both quantitative prediction accuracy and qualitative biological plausibility. Include "stress tests" where the model is evaluated under extreme conditions not represented in training data, assessing whether it maintains physiologically reasonable behavior. For regulatory applications, document both the model development process and the final model architecture, as the process itself provides valuable insights into system behavior [29].

The Hierarchically Self-adaptive Particle Swarm Optimization - Stacked AutoEncoder (HSAPSO-SAE) framework is a novel deep learning approach designed to overcome critical limitations in drug discovery, such as overfitting, computational inefficiency, and limited scalability of traditional models like Support Vector Machines and XGBoost [10]. By integrating a Stacked Autoencoder for robust feature extraction with an advanced PSO variant for hyperparameter tuning, this framework achieves superior performance in drug classification and target identification tasks [10].

Frequently Asked Questions (FAQs)

Q1: What is the primary advantage of using HSAPSO over standard optimization algorithms for tuning the SAE? The primary advantage lies in its hierarchically self-adaptive nature. Unlike standard PSO or other static optimization methods, HSAPSO dynamically balances exploration and exploitation during the training process. It adaptively tunes the hyperparameters of the SAE, such as the number of layers, nodes per layer, and learning rate, which leads to faster convergence, greater resilience to variability, and significantly reduces the risk of converging to suboptimal local minima [10].

Q2: My model is achieving high training accuracy but poor validation accuracy. What could be the cause and how can HSAPSO-SAE address this? This is a classic sign of overfitting. The HSAPSO-SAE framework is specifically designed to mitigate this issue through two key mechanisms. First, the SAE component performs hierarchical feature learning, which helps to learn more generalizable and abstract representations from the input data. Second, the HSAPSO algorithm optimizes the model's hyperparameters to ensure a good trade-off between model complexity and generalization capability, thereby enhancing performance on unseen validation and test datasets [10].

Q3: What are the computational performance benchmarks for the HSAPSO-SAE framework? Experimental evaluations on benchmark datasets like DrugBank and Swiss-Prot have demonstrated that the framework achieves a high accuracy of 95.52%. Furthermore, it exhibits low computational complexity, requiring only 0.010 seconds per sample, and shows exceptional stability with a standard deviation of ±0.003 [10]. This makes it suitable for large-scale pharmaceutical datasets.

Q4: Which datasets were used to validate the HSAPSO-SAE framework, and where can I find them? The framework was validated on real-world pharmaceutical datasets, primarily sourced from DrugBank and Swiss-Prot [10]. These repositories provide comprehensive data on drugs, protein targets, and their interactions, which are standard for benchmarking in computational drug discovery. You can access these databases through their official websites.

Troubleshooting Guides

Issue 1: Slow Convergence or Failure to Converge During Training

- Potential Cause: Poorly chosen initial ranges (search space) for the hyperparameters being optimized by HSAPSO.

Solution:

- Conduct a preliminary sensitivity analysis to understand the impact of individual hyperparameters on your model's performance. This helps in defining a more effective and bounded search space [34].

- Consider initializing the HSAPSO swarm with values derived from simpler optimization methods like Random Search, which can provide a good starting point for the more refined HSAPSO algorithm [35].

Potential Cause: Inadequate configuration of the HSAPSO's own parameters (e.g., swarm size, inertia weight).

- Solution: Refer to the foundational literature on the HSAPSO-SAE framework for recommended initial settings [10]. The hierarchical and self-adaptive properties of the algorithm should help, but may require calibration for a new problem domain.

Issue 2: Poor Overall Model Performance (Low Accuracy)

- Potential Cause: The quality of the input feature data is low, or the data contains a significant number of missing values.

Solution: Implement rigorous data preprocessing. For the HSAPSO-SAE framework, this includes:

- Handling Missing Values: Use robust imputation techniques such as MICE (Multivariable Imputation by Chained Equations), k-Nearest Neighbor (kNN) imputation, or Random Forest (RF) imputation, as these have been shown to be effective for clinical and pharmaceutical datasets [35].

- Data Normalization: Apply standardization (e.g., z-score normalization) to continuous features to ensure all features are on a comparable scale [35]. The formula is ( z = \frac{x - \mu}{\sigma} ), where ( x ) is the original value, ( \mu ) is the feature mean, and ( \sigma ) is its standard deviation.

Potential Cause: The architecture of the Stacked Autoencoder is not complex enough to capture the intricacies of your data.

- Solution: Allow the HSAPSO algorithm to search over a larger space of architectural hyperparameters, such as a greater number of hidden layers and a higher number of nodes per layer. The optimization process is designed to find an optimal balance between complexity and performance [10].

Issue 3: Model Performance is Highly Variable Across Different Runs

- Potential Cause: High variance inherent in the training process or optimization algorithm.

- Solution: Implement k-fold cross-validation (e.g., 10-fold cross-validation) to ensure the model's performance is robust and not dependent on a particular split of the data. This technique was used in the original study to validate the framework's stability [10] [35].

Experimental Performance Data

The following table summarizes the key quantitative results from the evaluation of the HSAPSO-SAE framework as reported in the scientific literature [10].

Table 1: Key Performance Metrics of the HSAPSO-SAE Framework

| Metric | Reported Value | Notes / Comparative Context |