Solving Genome Assembly Challenges: From T2T Breakthroughs to Clinical Applications

This article provides a comprehensive overview of the current state and future trajectory of genome assembly, a critical technology for biomedical research and drug development.

Solving Genome Assembly Challenges: From T2T Breakthroughs to Clinical Applications

Abstract

This article provides a comprehensive overview of the current state and future trajectory of genome assembly, a critical technology for biomedical research and drug development. We explore the foundational challenges, including complex repetitive regions and polyploid genomes, that have historically hindered progress. The article details cutting-edge methodological solutions, from PacBio HiFi long-read sequencing and Hi-C scaffolding to innovative quantum computing algorithms, which are now enabling the construction of complete telomere-to-telomere (T2T) reference genomes. A dedicated section on troubleshooting and optimization offers practical guidance for improving assembly quality, while a final segment on validation and comparative analysis establishes benchmarks for accuracy and completeness. This resource is designed to equip researchers and drug development professionals with the knowledge to leverage high-quality genomic data for advancing personalized medicine and therapeutic discovery.

Understanding the Core Hurdles in Modern Genome Assembly

Frequently Asked Questions

What makes tandem repeats and rDNA so challenging to assemble? These regions are characterized by long, highly similar DNA sequences repeated in a head-to-tail fashion. During the assembly process, where short sequencing reads are reconstructed into a continuous genome, these repeats are longer than the individual reads. This lack of unique anchoring points makes it impossible for assembly algorithms to determine the correct order and number of repeats, often causing the assembly to collapse or break [1].

My assembly has gaps or misassemblies in a tandem repeat region. How can I resolve this? Resolving these issues requires a combination of advanced sequencing data and specialized tools. Using ultra-long reads from Oxford Nanopore Technologies (ONT) or highly accurate long reads (HiFi) from PacBio provides the necessary length to span entire repetitive units. Specialized assemblers like Verkko, which is designed for telomere-to-telomere assembly, and Hi-C scaffolding techniques are particularly effective for ordering and orienting contigs in these problematic regions [1] [2].

Can I use Hi-C data to improve an assembly with problematic repeats? Yes, Hi-C is a powerful method for scaffolding. It captures the three-dimensional proximity of DNA segments within the nucleus. Even if two genomic regions have nearly identical sequences, their 3D positions in the nucleus are unique. Tools like the Juicer and 3D-DNA pipeline use this proximity information to correctly order, orient, and assign contigs to chromosomes, thereby detecting and correcting misassemblies caused by repeats [2].

What is "polishing" and will it help with errors in repetitive sequences? Polishing is the process of using the original sequencing reads to correct small errors (like indels and base substitutions) in a draft assembly. While it can improve accuracy, its effectiveness in repetitive regions is mixed. In some cases, it can introduce new errors. For bacterial genomes, studies show that one round of long-read polishing is often sufficient, and that using methylation-aware models (like Medaka) can correct errors linked to base modifications [3].

Are some genomes simply too difficult to assemble completely? While the goal of telomere-to-telomere (T2T) assembly is now achievable for many species, significant challenges remain. The assembly of ultra-long, highly similar tandem repeats, particularly in rDNA regions, and the haplotype-resolved assembly of complex polyploid genomes are still considered critical challenges for the field [1]. Ongoing methodological innovations, including AI-driven assembly graph analysis, are being developed to address these hurdles.

Troubleshooting Guides

Problem: Collapsed Tandem Repeats

A collapsed repeat manifests as a region in your assembly with a lower than expected sequencing coverage and an absence of known repeat variants.

| Troubleshooting Step | Action and Rationale |

|---|---|

| Assess Read Length | Confirm your long-read sequencing data (ONT or PacBio HiFi) has a read length distribution that exceeds the length of the individual repetitive units. This is a prerequisite for spanning repeats. |

| Re-assemble with Specialized Tools | Use assemblers specifically designed for complex regions, such as Verkko or hifiasm, which use phased assembly graphs to better resolve repeats [1]. |

| Integrate Hi-C Data | Incorporate Hi-C sequencing data into your workflow. Process the data with Juicer and use the 3D-DNA pipeline to scaffold the assembly, which helps order contigs using 3D proximity ligation information [2]. |

Problem: Incomplete rDNA Assembly

Ribosomal DNA (rDNA) clusters are often missing or fragmented in draft genome assemblies.

| Troubleshooting Step | Action and Rationale |

|---|---|

| Sequence with Ultra-Long Reads | Generate ONT ultra-long reads or PacBio HiFi reads. The extreme length of these reads is critical for spanning the entire, highly conserved rDNA operon. |

| Manual Curation with Hi-C | Use the Juicebox Assembly Tools to manually curate the assembly. The Hi-C contact map will show a distinct, high-interaction block for the rDNA region, allowing you to correctly place and orient the contig [2]. |

| Targeted Assembly | Extract reads mapping to the rDNA region and attempt a local, targeted assembly with different parameters or tools. The resulting contig can then be integrated back into the main assembly. |

Problem: Persistent Misassemblies After Automated Scaffolding

The initial assembly and automated scaffolding with Hi-C data still contain errors in repetitive regions.

| Troubleshooting Step | Action and Rationale |

|---|---|

| Check for Misjoins | The 3D-DNA pipeline automatically identifies and breaks potential misassemblies based on inconsistent Hi-C contact signals. Review its output log for broken misjoins [2]. |

| Manual Curation in Juicebox | Load the .hic file and .assembly file from 3D-DNA into Juicebox. Visually inspect the contact map for scaffolds. Misassemblies often appear as off-diagonal contacts or sudden drops in interaction frequency along a contig, which can be manually corrected [2]. |

| Validate with Optical Maps | If available, use Bionano optical mapping data as an independent source of long-range information to validate the assembly structure and correct large-scale errors. |

Experimental Protocols

Protocol 1: Hi-C Scaffolding with Juicer and 3D-DNA

This protocol uses Hi-C data to order, orient, and scaffold a draft genome assembly, which is crucial for resolving repetitive regions [2].

Research Reagent Solutions

| Item | Function |

|---|---|

| Draft Genome Assembly | The initial contig-level assembly to be improved. |

| Hi-C Sequencing Library | Paired-end sequencing library prepared from cross-linked chromatin, providing proximity ligation data. |

| Juicer Pipeline | Processes raw Hi-C reads: aligns them to the draft assembly, filters, and deduplicates to produce a contact map. |

| 3D-DNA Pipeline | Uses the Juicer output to scaffold the draft assembly, correcting misjoins and producing chromosome-length scaffolds. |

| Juicebox Assembly Tools | A visualization interface for manually curating and correcting the automated assembly. |

Methodology:

Prepare Input Files:

- Ensure your draft genome is in a FASTA file (

Genome.fasta). - Ensure Hi-C fastq files are named with the

_R1.fastqand_R2.fastqsuffix.

- Ensure your draft genome is in a FASTA file (

Generate Required Indexes:

Run the Juicer Pipeline:

The key output file for 3D-DNA is

aligned/merged_nodups.txt.Run the 3D-DNA Scaffolding Pipeline:

Manually Curate with Juicebox:

- Load the

Genome.0.hicandGenome.0.assemblyfiles produced by 3D-DNA into Juicebox. - Visually inspect the contact map and correct any remaining scaffolding errors.

- Load the

Protocol 2: Assembly Polishing for Bacterial Genomes

This protocol details how to polish a long-read assembly to correct small errors, which can also affect repetitive regions [3].

Key Considerations from Recent Studies:

- One round of long-read polishing is often sufficient; additional rounds may degrade assembly quality by over-correcting in repetitive regions.

- For Oxford Nanopore data, using the methylation-aware Medaka polishing model can correct errors caused by base modifications.

- In studies, 81% of errors in ONT assemblies were located within coding sequences (CDS), highlighting the importance of polishing for gene annotation accuracy [3].

Table 1: Assembly Accuracy Across Bacterial Pathogens Data from a 2025 study assessing nanopore sequencing and assembly of various bacterial species, highlighting variations in final assembly quality even with modern methods [3].

| Species | Nucleotide Differences vs. Reference | Key Finding / Error Profile |

|---|---|---|

| Bacillus anthracis | Almost perfect assembly | Achieved nearly complete accuracy. |

| Brucella melitensis | 5 - 46 differences | Variation between assemblers; errors persisted. |

| Brucella abortus | Varied by basecaller | Older basecalling model sometimes produced higher accuracy. |

| Klebsiella variicola, Listeria spp. | Perfect genomes | Demonstrated species-specific success. |

| Overall Error Location | 81% within CDS | Highlights impact on gene annotation. |

Table 2: Key Tools for Resolving Problematic Regions A summary of software solutions and their specific applications for tackling challenging genomic areas [1] [2].

| Tool | Primary Function | Application to Repetitive Regions |

|---|---|---|

| Verkko | Telomere-to-telomere (T2T) diploid assembly | Specialized for assembling complete chromosomes through phased repeat graphs [1]. |

| hifiasm | Haplotype-resolved de novo assembly | Uses phased assembly graphs to separate haplotypes and resolve repeats [1]. |

| Juicer / 3D-DNA | Hi-C data processing and scaffolding | Orders and orients contigs using 3D proximity, correcting misassemblies in repeats [2]. |

| Juicebox | Visual assembly curation | Enables manual correction of scaffolding errors visible in the Hi-C contact map [2]. |

| Medaka | Long-read polishing | Includes methylation-aware models to correct errors in bases like 5mC [3]. |

Frequently Asked Questions (FAQs)

Q1: What is the fundamental difference between assembling autopolyploid versus allopolyploid genomes?

Autopolyploids arise from whole-genome duplication within a single species, resulting in highly similar homologous chromosomes that are extremely challenging to separate during assembly due to their high allelic similarity [4]. In contrast, allopolyploids are formed from hybridization between different species followed by genome doubling. Their subgenomes are more divergent, which allows them to often be assembled more like diploids, as demonstrated in species such as rapeseed, wheat, and strawberry [4] [5].

Q2: What are the primary data requirements for achieving a high-quality, haplotype-resolved polyploid genome assembly?

Recent evaluations suggest that a robust assembly pipeline requires a combination of data types. For optimal results, you should aim for approximately 20× coverage of high-quality long reads (PacBio HiFi or ONT Duplex), combined with 15–20× coverage of ultra-long ONT reads per haplotype, and at least 10× coverage of long-range data (such as Omni-C or Hi-C) [6]. This multi-faceted approach ensures both contiguity and accurate phasing.

Q3: Beyond standard long-read sequencing, what innovative methods are available for phasing autopolyploid genomes?

Several advanced methods have been developed to tackle the specific challenge of autopolyploid phasing. These include:

- Gamete Binning: This involves single-cell DNA sequencing of hundreds of gametes (e.g., pollen). Contigs are phased based on their similar read coverage profiles across the gametes [7] [5].

- Offspring k-mer Analysis: This method uses low-coverage sequencing of a population of offspring (from a cross) and unique k-mers to cluster assembly graph nodes into haplotypes based on shared inheritance patterns [5].

- Integrated Approaches (PolyGH): Novel algorithms like PolyGH combine the strengths of Hi-C data and gametic data to improve phasing accuracy beyond what either method can achieve alone [7].

Q4: How can I validate my haplotype assembly and be confident in the results?

It is critical to implement rigorous quality control measures. This includes:

- Dosage Analysis: Verify that the sequencing coverage of assembled unitigs shows distinct peaks corresponding to the expected dosages (e.g., 1x, 2x, and 3x for a tetraploid) [5].

- Switch Error Screening: Use specialized tools (e.g.,

switch_error_screen) to detect regions where the assembly incorrectly switches from one haplotype to another, which is common in repetitive regions [8]. - Assembly Graph Interrogation: Tools like

gfa_parsercan extract all possible contiguous sequences from Graphical Fragment Assembly (GFA) files, helping to quantify assembly uncertainty, particularly in complex regions like tandem gene arrays [8].

Troubleshooting Guide

| Common Problem | Underlying Cause | Potential Solutions |

|---|---|---|

| Highly Fragmented Assembly & Imbalanced Haplotypes | Excessive proportion of collapsed sequences in the initial assembly graph; common in autopolyploids with high heterozygosity [7]. | 1. Use Hifiasm with the -l 3 option to generate a more sensitive assembly that retains more haplotype information [5].2. Integrate gamete binning or offspring data to resolve collapsed regions [7] [5]. |

| Poor Phasing Accuracy & Frequent Switch Errors | Insufficient long-range phasing information; inherent difficulty in distinguishing highly similar haplotypes in repetitive regions [6] [8]. | 1. Increase the volume of ultra-long ONT reads (>100 kb) to at least 15-20× per haplotype to bridge repetitive regions [6].2. Combine Hi-C with gametic data using tools like PolyGH to strengthen phasing signals [7].3. Systematically screen for and correct switch errors post-assembly [8]. |

| Inaccurate Copy Number Variation (CNV) Estimation | Assembly artifacts and misassembly in repetitive tandem arrays can be mistaken for genuine genetic variation [8]. | 1. Analyze the raw assembly graph (GFA) with gfa_parser to evaluate all possible paths and assess assembly uncertainty [8].2. Cross-validate CNV calls with an orthogonal method, such as digital PCR or comparative read depth analysis. |

| Prohibitively High Cost of Phasing | Single-cell sequencing of hundreds of gametes, as required by some methods, is expensive [7]. | 1. Utilize a low-coverage offspring population sequencing strategy as a cost-effective alternative to single-cell gamete sequencing [5].2. Optimize data types based on project needs; PacBio HiFi may offer better phasing accuracy, while ONT Duplex can generate more T2T contigs [6]. |

Detailed Experimental Protocols

Protocol 1: PolyGH Phasing for Autopolyploid Genomes

This protocol combines Hi-C and gametic data for superior haplotyping of complex autopolyploid genomes, such as potato [7].

Workflow Overview:

Step-by-Step Methodology:

- Gametic Data Binning:

- Utilize single-cell DNA sequencing data from a large number of gametes (e.g., 200-700 pollen nuclei).

- Align the short reads from each gamete to the assembled contigs.

- Build a feature vector for each contig, where each component represents the read coverage from a specific gamete.

- Perform initial clustering of contigs based on their similar coverage profiles, which indicates they belong to the same haplotype [7].

Hi-C Signal Extraction:

- Perform k-mer counting on the contig sequences using Jellyfish with parameters

-m 21 -s 3G -c 7to identify unique k-mers. - Build a k-mer position library to map these k-mers back to their locations in the contigs.

- Process Hi-C paired-end reads to extract interaction signals between contig fragments that are linked by shared unique k-mers [7].

- Perform k-mer counting on the contig sequences using Jellyfish with parameters

Integrated Clustering and Phasing:

- Combine the linkage information from the gametic feature vectors and the Hi-C interaction signals.

- Execute the PolyGH pipeline to cluster the contig fragments, assigning them to the correct haplotypes (e.g., four for a tetraploid).

- The final output is a set of haplotype-resolved chromosomes [7].

Protocol 2: Haplotype Assembly Using Offspring k-mer Analysis

This method is suitable for common breeding scenarios where a population of offspring from a known cross is available [5].

Workflow Overview:

Step-by-Step Methodology:

- Initial Assembly and Dosage Estimation:

- Assemble PacBio HiFi reads using Hifiasm to produce a raw unitig graph.

- Align the HiFi reads back to the unitigs and compute the sequencing depth in non-overlapping regions.

- Estimate the dosage (number of haplotypes a unitig represents) based on coverage peaks (e.g., ~23x for dosage 1, ~46x for dosage 2 in a tetraploid) [5].

k-mer Analysis and Offspring Profiling:

- Extract all k-mers (e.g., k=71) from the unitigs and identify a set of unique k-mers that appear exactly once in the entire assembly graph and are specific to the parent being assembled.

- For each of the ~200 offspring samples, sequence with low-coverage Illumina (~1.5x per haplotype).

- Count the parent-specific unique k-mers in the short-read data from each offspring [5].

Chromosomal Clustering and Haplotype Resolution:

- For each unitig, create a k-mer count pattern across all offspring. Unitigs with similar inheritance patterns (i.e., inherited by the same subset of offspring) are clustered together, effectively grouping them by chromosome.

- Within each chromosomal cluster, distinguish the four haplotypes by analyzing the segregation patterns of dosage-1 unitigs.

- Finally, integrate unitigs with higher dosages (2, 3, 4) into the resolved haplotypes based on their k-mer count patterns [5].

Research Reagent Solutions

| Essential Material | Function in Haplotype-Resolved Assembly | Key Considerations |

|---|---|---|

| PacBio HiFi Reads | Generates highly accurate long reads for initial contig assembly. Essential for resolving complex, repetitive regions. | Provides base-level accuracy >99.9%. A minimum of 20× coverage per haplotype is recommended for polyploid assembly [6]. |

| Oxford Nanopore Technologies (ONT) Duplex Reads | Produces very long reads with high accuracy (Q30), facilitating the spanning of massive repeats and improving telomere-to-telomere (T2T) assembly. | Duplex reads are, on average, twice as long as HiFi reads, aiding in the resolution of structural variants. 20× coverage is a typical target [6]. |

| ONT Ultra-long (UL) Reads | Provides reads exceeding 100 kb, crucial for bridging the largest repetitive regions, such as centromeres and telomeres. | Combining 15-20× of UL data with HiFi/Duplex data significantly enhances assembly continuity and haplotype phasing [6]. |

| Hi-C / Omni-C Data | Captures long-range chromatin interaction information. Used for scaffolding contigs into chromosomes and for phasing. | A minimum of 10× coverage is sufficient for effective scaffolding and improving phasing accuracy when combined with other data types [6] [7]. |

| Gamete (Pollen) Single-Cell DNA | Enables gamete binning by providing the data to link contigs that co-segregate across hundreds of meiotic events. | Critical for autopolyploid phasing. Typically requires sequencing hundreds of gametes (e.g., 200-700) for precise phasing [7] [5]. |

| Low-Coverage Offspring Population DNA | A cost-effective alternative to gamete binning. Allows phasing via inheritance patterns of unique k-mers in a segregating population. | Ideal for breeding programs. Sequencing ~200 offspring at low coverage (~1.5x per haplotype) provides robust phasing information [5]. |

Genome assembly is a fundamental process in genomics, transforming raw sequencing data into contiguous genomic sequences. For years, two primary algorithmic approaches have dominated this field: Overlap-Layout-Consensus (OLC) and de Bruijn graphs. While both have enabled significant scientific progress, they possess inherent limitations that can impede the assembly of high-quality, complete genomes. Understanding these shortfalls is crucial for selecting appropriate tools and methodologies, especially for complex projects such as clinical diagnostics and drug development. This guide provides a technical troubleshooting resource to help researchers identify and address common challenges associated with these traditional assembly algorithms.

Frequently Asked Questions (FAQs)

1. What are the core differences between OLC and de Bruijn graph algorithms?

The table below summarizes the fundamental differences between the two algorithmic approaches.

| Feature | OLC (Overlap-Layout-Consensus) | De Bruijn Graph |

|---|---|---|

| Core Principle | Finds overlaps between full-length reads before building a layout and consensus sequence [9]. | Breaks reads into short k-mers (substrings of length k) and builds a graph where nodes are k-mers and edges represent overlaps [10] [11]. |

| Ideal Read Type | Long reads (Sanger, PacBio, Nanopore) [12] [9]. | Short reads (Illumina) [9] [11]. |

| Computational Load | High, as it requires all-vs-all read comparison [9]. | Lower for short reads, as it avoids pairwise comparisons of all reads [11]. |

| Handling Repeats | Struggles with long, identical repeats that cause tangles in the overlap graph [9]. | Can resolve short repeats by increasing the k-mer size, but collapses long, identical repeats [10] [9]. |

2. My de Bruijn graph assembly is fragmented. What could be the cause?

Fragmentation in de Bruijn graphs often stems from a combination of factors related to k-mer choice and data quality.

- Incorrect K-mer Size: A k-mer value that is too high can break the graph in regions of low sequencing coverage, while a value that is too low fails to resolve small repeats, creating tangled connections instead of clear paths [9].

- Sequencing Errors: Errors in the reads create spurious k-mers with low frequency, leading to "bulges" or "dead ends" in the graph that fragment the assembly [10] [11].

- Low Coverage: Insufficient sequencing depth means some k-mers from the true genome are missing, breaking the continuous path in the graph [9].

- Heterozygosity: In diploid or polyploid organisms, variations between homologous chromosomes create "bubbles" in the graph. While these represent real biology, they can complicate the assembly process and lead to fragmentation [9].

3. Why does my OLC assembly fail with high-error long reads, and how can I improve it?

OLC algorithms are highly sensitive to error rates because they rely on detecting true overlaps between reads. A high error rate, such as those historically associated with Nanopore sequencing, leads to two main problems [12]:

- Failed Overlap Detection: True overlaps may be missed if the error rate obscures sequence similarity.

- False Overlap Detection: Incorrect overlaps may be called based on spurious sequence matches.

To improve an OLC assembly with error-prone reads, consider these steps:

- Error Correction: Implement a dedicated error-correction step before assembly. This can be done by using high-accuracy short reads (hybrid correction) or by leveraging the long-read data itself with self-correction tools [12].

- Parameter Tuning: Adjust the overlap identity threshold and minimum overlap length. Lowering these parameters can help detect true overlaps in noisy data, but may also increase false positives.

- Algorithm Selection: Use assemblers specifically designed for noisy long-read data. Benchmarking studies have shown that modern OLC-based assemblers like Canu and Celera are capable of generating high-quality assemblies from Nanopore data, outperforming de Bruijn graph and greedy approaches [12].

4. How can I resolve complex, highly similar repeats that neither algorithm handles well?

Highly similar tandem repeats, such as those found in rDNA regions, remain a critical challenge for both OLC and de Bruijn graph assemblers [1]. When automated algorithms fail, consider these advanced strategies:

- Ultra-Long Reads: Sequence with technologies that produce ultra-long reads (e.g., Nanopore). Reads that span the entire repetitive region provide unambiguous evidence for its structure.

- Complementary Technologies: Integrate data from other technologies that are less sensitive to repeats.

- Optical Maps: Provide a large-scale restriction map of the genome, which can be used to validate the overall scaffold structure and the placement of repeats [9].

- Hi-C Sequencing: Captures chromatin conformation data, helping to order and orient contigs over long distances, even across repetitive regions [9].

- Manual Curation: Use interactive curation tools (e.g., within the Galaxy platform) to make targeted breaks, joins, and reorientations of scaffolds based on all available evidence. "Dual curation" of both haplotypes simultaneously using a single Hi-C map has been shown to streamline this process [13].

Troubleshooting Guides

Issue 1: Poor Assembly Quality with Short Reads (De Bruijn Graph)

Symptoms: Low N50, a high number of contigs, and gaps in gene models.

| Potential Cause | Diagnostic Steps | Solution |

|---|---|---|

| Suboptimal k-mer size | Run the assembler with multiple k-values and plot N50 vs. k. Look for a peak in performance. | Select the k-value that maximizes contiguity without excessive breaks. Use k-mer spectrum analysis to find an optimal value [9]. |

| High sequencing error rate | Generate a k-mer multiplicity histogram. A large number of low-frequency k-mers indicates errors. | Apply a k-mer-based error correction tool (e.g., within the assembler or as a separate pre-processing step) to remove low-coverage k-mers [10] [11]. |

| Low sequencing coverage | Calculate the coverage: (total bases sequenced) / (genome size). Below 50x may be insufficient for complex genomes. | Sequence to a higher depth. For mammalian genomes, 60x coverage or higher is often recommended. |

Issue 2: Excessive Memory Usage and Runtime with Long Reads (OLC)

Symptoms: Assembly process runs for an extremely long time or fails due to insufficient memory.

| Potential Cause | Diagnostic Steps | Solution |

|---|---|---|

| All-vs-all read comparison | Check the number of input reads. The computational load scales quadratically with the number of reads. | Reduce the dataset by sub-sampling reads, ensuring you retain sufficient coverage (e.g., 40-50x). Use a pre-filtering step to remove the shortest reads. |

| Inefficient overlap detection | Check if the assembler uses a "seed-and-extend" or MinHash strategy to find overlaps faster. | Switch to an assembler that uses more computationally efficient overlap detection methods. For Nanopore data, benchmarks indicate OLC is optimal, but implementation matters [12]. |

| Lack of hardware resources | Monitor memory usage during the initial overlap detection phase. | Allocate more RAM if possible. If not, you must sub-sample your reads or use a cloud/computing cluster. |

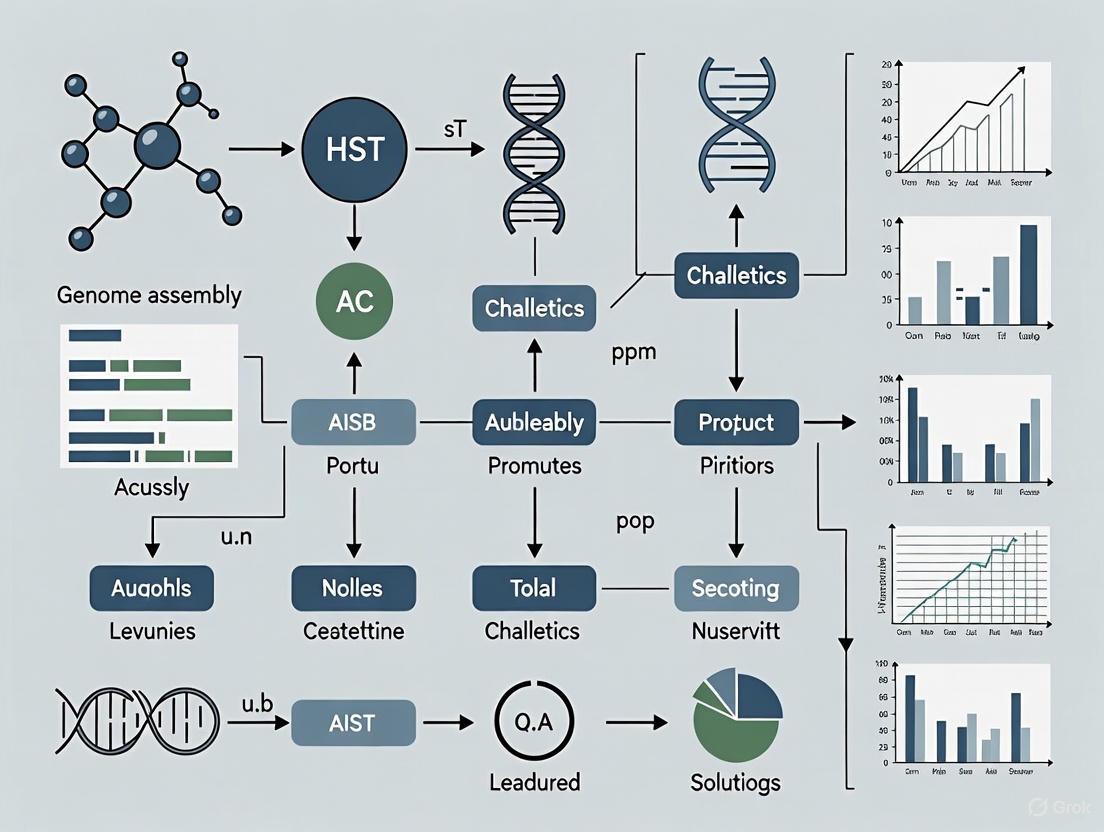

Algorithmic Workflows and Limitations

The diagrams below illustrate the standard workflows for de Bruijn graph and OLC assembly, highlighting stages where specific limitations and challenges arise.

De Bruijn Graph Assembly Workflow

OLC Assembly Workflow

Research Reagent and Tool Solutions

The following table lists key experimental reagents and computational tools essential for overcoming genome assembly challenges.

| Item Name | Type | Primary Function in Assembly |

|---|---|---|

| PacBio HiFi Reads | Sequencing Reagent | Generates long reads (10-20 kb) with very high accuracy (>99.9%), ideal for resolving repeats and producing high-quality assemblies with both OLC and de Bruijn graph algorithms [1] [14]. |

| Oxford Nanopore Ultra-Long (UL) Reads | Sequencing Reagent | Produces reads exceeding 100 kb, capable of spanning even the most complex repetitive regions, enabling telomere-to-telomere assemblies [1]. |

| Hi-C Library Kit | Library Prep Reagent | Captures chromatin proximity data, used after contig assembly to scaffold, order, and orient contigs into chromosomes, bridging repetitive regions [9] [14]. |

| Canu | Software Tool | An OLC-based assembler designed for noisy long reads (Nanopore, PacBio CLR), incorporating error correction and consensus steps [1]. |

| Hifiasm | Software Tool | A fast and efficient assembler for PacBio HiFi reads, capable of producing haplotype-resolved (phased) assemblies [1] [14]. |

| Verkko | Software Tool | A hybrid assembler designed for telomere-to-telomere assembly of diploid chromosomes, integrating both long and ultra-long read data [1]. |

The limitations of traditional OLC and de Bruijn graph algorithms are not dead ends but rather defined frontiers in genomics research. A modern solution to genome assembly challenges rarely relies on a single algorithm or data type. Instead, it involves a strategic integration of multiple sequencing technologies (short, long, and ultra-long reads), complementary data (Hi-C, optical maps), and sophisticated assembly pipelines that can leverage the strengths of different algorithmic paradigms. Furthermore, the emergence of interactive curation platforms, like those in Galaxy, acknowledges that fully automated assembly is not always possible, and human-guided intervention is a powerful tool for achieving the highest-quality reference genomes [13]. By understanding these shortfalls and the available solutions, researchers can better design their experiments and navigate the complex landscape of de novo genome assembly.

Frequently Asked Questions (FAQs)

Q1: My genome assembly job is stuck in a queue or running very slowly. What could be the cause? Excessive runtimes and job queuing are often due to the high computational burden of processing long-read sequencing data. For eukaryotic organisms, sequencing coverage of >60x is often required for a contiguous assembly, but errors can accumulate and assembly statistics can plateau if depth is increased without proper read selection and correction [15]. Ensure you are using pre-assembly filtering and read correction to improve contiguity.

Q2: What are the key computational resource requirements for a genome assembly project? The requirements vary significantly by genome size and complexity. The table below summarizes key resource considerations based on current assembly projects as of 2025.

| Resource Type | Consideration & Specification |

|---|---|

| Sequencing Coverage | >60x coverage for eukaryotes using long-read technologies (e.g., ONT, PacBio) is often necessary for contiguous assemblies [15]. |

| Data Storage | Genome assembly datasets frequently surpass terabytes in size. The Galaxy platform, for instance, allocates substantial dedicated storage for such projects [16]. |

| Computing Infrastructure | Long-read assembly and polishing are computationally intensive. Leveraging dedicated platforms like Galaxy, which provides access to over 100 assembly-specific tools, can eliminate local computational barriers [16]. |

Q3: How do I choose between a long-read-only and a hybrid assembly approach? The choice depends on your data and resources. A pure long-read sequencing and assembly approach often outperforms hybrid methods in terms of contiguity [15]. However, if you have lower coverage long reads, correcting them with short reads prior to assembly is a viable strategy. For high-coverage long reads, a long-read-only assembly followed by polishing with short reads to increase base-level accuracy is recommended [17].

Q4: What is the difference between a GenBank (GCA) and a RefSeq (GCF) assembly? A GenBank (GCA) assembly is an archival record submitted to an International Nucleotide Sequence Database Collaboration (INSDC) member; it is owned by the submitter and may not include annotation. A RefSeq (GCF) assembly is an NCBI-derived copy of a GenBank assembly that is maintained by NCBI; all RefSeq assemblies include annotation [18].

Q5: How can I access large public genomic datasets without downloading them entirely? To manage large data transfers, consider using a dehydrated data package. This is a zip archive containing metadata and pointers to data files on NCBI servers. You can "rehydrate" it later to download the actual sequence data, which is the recommended method for packages containing over 1,000 genomes or more than 15 GB of data [18].

Troubleshooting Guides

Problem: High Error Rates in Final Assembly

- Symptoms: The assembled genome has poor agreement with validation data; high rates of single-base errors.

- Solution: Implement a robust post-assembly polishing protocol.

- Polish with high-accuracy short reads: Use Illumina data to correct base-level errors in the draft assembly. This step significantly increases accuracy, even with low sequencing depths of short-read data [15].

- Use specialized polishing tools: Leverage tools integrated into platforms like Galaxy that are designed for this purpose, such as those used in the VGP and ERGA-BGE workflows [16].

- Validate: Run BUSCO analysis and contamination screens to assess gene content completeness and assembly quality [16].

Problem: Discontiguous Assembly with Many Short Contigs

- Symptoms: The N50 statistic is low; the assembly is fragmented into thousands of pieces.

- Solution: Optimize input data and assembly algorithm selection.

- Start with High-Molecular-Weight (HMW) DNA: The quality of the input DNA is critical. Use extraction methods and size selection kits (e.g., Circulomics Short Read Eliminator Kit) that preserve long fragments [15].

- Select an Appropriate Assembler: Use state-of-the-art assemblers designed for your sequencing technology. For long-read assembly, tools like HiFiasm, Flye, and Canu are integrated into reproducible workflows on platforms like Galaxy [16].

- Incorporate Hi-C or Long-Range Data: Use chromatin interaction data (Hi-C) with a scaffolder like YaHS to order and orient contigs into chromosomes, dramatically improving contiguity [16].

Problem: Contamination in the Draft Assembly

- Symptoms: Taxonomic classification tools identify non-target sequences (e.g., bacterial contigs in a eukaryotic assembly).

- Solution: Perform systematic decontamination.

- Run BlobTools2: This tool uses taxonomic assignment, read coverage, and GC content to identify and help remove contaminant contigs [15].

- Apply SIDR: Use this ensemble-based machine learning tool to discriminate target and contaminant contigs based on multiple predictor variables, including alignment coverage from DNA and RNA-seq data [15].

- Filter: Retain only contigs taxonomically identified as your target organism (e.g., Nematoda) and discard common contaminants like E. coli and Pseudomonas [15].

Experimental Protocols for Scalable Genome Assembly

Protocol 1: Standardized Workflow for High-Quality Vertebrate Genomes This methodology is derived from workflows developed for the Vertebrate Genomes Project (VGP) and the European Reference Genome Atlas (ERGA) [16].

- Sequencing: Generate a combination of PacBio HiFi long reads, Oxford Nanopore long reads, and Hi-C data.

- Assembly: Assemble the genome using a pipeline such as HiFiasm or a specialized pipeline like ONT+Illumina & HiC (NextDenovo-HyPo + Purge_Dups + YaHS).

- Haplotype Purging: Use purge_dups to remove haplotypic duplications.

- Scaffolding: Scaffold the assembly into chromosomes using YaHS with the Hi-C data.

- Quality Control: Generate an ERGA Assembly Report (EAR) to evaluate contiguity (N50), completeness (BUSCO), and contamination. This can be automated with the ERGA Bot for large-scale projects [16].

Protocol 2: Optimized ONT Sequencing and Assembly for Eukaryotes This protocol is designed to overcome the high error rate of Oxford Nanopore Technologies (ONT) reads for eukaryotic organisms [15].

- DNA Extraction: Perform a phenol-chloroform extraction from flash-frozen tissue. Verify HMW gDNA on a 0.8% agarose gel.

- Size Selection: Treat the DNA with a Short Read Eliminator Kit (e.g., from Circulomics) to enrich for long fragments.

- Library Prep & Sequencing: Prepare a library using the SQK-LSK109 kit, modifying the protocol by adding an extra Short Read Eliminator clean-up step. Sequence on an R9.4.1 flow cell for 48 hours on a GridION, basecalling in high-accuracy mode.

- Adapter Trimming: Trim adapters and remove chimeric reads using Porechop.

- Assembly & Polishing: Perform the assembly with a long-read assembler (e.g., Canu). Subsequently, polish the resulting assembly using Illumina short reads to correct base-level errors.

Visualization: End-to-End Genome Assembly and Curation Workflow The diagram below outlines the logical flow of a modern, high-quality genome assembly process.

The Scientist's Toolkit: Key Research Reagents & Solutions

The following table details essential materials and computational tools used in modern genome assembly pipelines [16] [15].

| Item | Function & Application |

|---|---|

| Circulomics Short Read Eliminator Kit | Used during DNA extraction to remove short fragments and select for High-Molecular-Weight (HMW) DNA, which is critical for long-read sequencing [15]. |

| SQK-LSK109 Ligation Sequencing Kit | Standard library preparation kit for Oxford Nanopore sequencing on R9.4.1 flow cells, often modified with additional clean-up steps for improved results [15]. |

| HiFiasm Assembler | A state-of-the-art tool for phased assembly using PacBio HiFi data, integrated into workflows for the Vertebrate Genomes Project (VGP) [16]. |

| YaHS | A scaffolder used to order and orient contigs into chromosomes using Hi-C data, a key step in producing chromosome-level assemblies [16]. |

| purge_dups | A tool for haplotype purging that identifies and removes haplotypic duplications from the primary assembly, improving accuracy [16]. |

| BRAKER & AUGUSTUS | Tools for structural gene prediction, which are part of sophisticated annotation workflows available on platforms like Galaxy [16]. |

| BlobTools2 & SIDR | Software for identifying and removing contaminant contigs from draft genome assemblies using taxonomic and coverage information [15]. |

| MM 77 dihydrochloride | MM 77 dihydrochloride, CAS:159187-70-9, MF:C19H29Cl2N3O3, MW:418.359 |

| Viscidulin III tetraacetate | Viscidulin III tetraacetate, MF:C25H22O12, MW:514.4 g/mol |

Next-Generation Sequencing and Advanced Assembly Pipelines

For researchers, scientists, and drug development professionals, the pursuit of complete, accurate, and haplotype-resolved genome assemblies has long been hampered by technological limitations. Repetitive regions, high heterozygosity, and complex structural variations have remained persistent challenges, particularly in clinical and conservation genomics where missing variation can impact diagnostic outcomes or evolutionary insights. The integration of PacBio HiFi long-read sequencing with Hi-C chromatin conformation data represents a transformative methodological advance, establishing a new gold standard for de novo genome assembly. This approach leverages the base-pair resolution and read lengths of HiFi sequencing (typically 10-25 kb with >99.9% accuracy) with the long-range spatial information provided by Hi-C to generate chromosome-scale, haplotype-phased assemblies [19] [20]. This technical framework enables researchers to overcome traditional barriers in genome assembly, providing unprecedented resolution for studying complex genomic architectures, population variation, and disease mechanisms.

Experimental Protocols: Methodologies for Integrated Genome Assembly

The CiFi Protocol: Chromatin Conformation Capture with HiFi Sequencing

The CiFi (Hi-C with HiFi) protocol represents a significant advancement for haplotype-resolved genome assembly from low-input samples. Developed by researchers from UC Davis, USDA, Sanger Institute, and PacBio, this method achieves "haplotype-resolved, chromosome-scale de novo genome assemblies with data from one sequencing technology" [19].

Key Methodological Steps:

- Standard 3C Protocol: Begin with cross-linking chromatin using formaldehyde to capture chromosomal interactions.

- Amplifi Workflow: Implement the improved PacBio low-input protocol for library preparation. This step is critical for low-input scenarios and results in a

>500-foldimprovement in efficiency compared to previous approaches [19]. - HiFi Sequencing: Perform sequencing on PacBio Revio or Vega systems to generate long, accurate reads containing chromatin interaction information.

- Data Integration: Use the CiFi data in conjunction with standard HiFi Whole Genome Sequencing (WGS) for assembly.

This protocol has been successfully demonstrated to generate "multiple chromosome-interacting segments per HiFi read," enabling haplotype-resolved connectivity across scales exceeding 100 Mb, including in repetitive and low-complexity regions such as segmental duplications and centromeres [19]. The method's efficiency has been validated using minimal biological material, including studies where "a single insect was dissected in half and run for HiFi and CiFi libraries simultaneously on a single Revio SMRT Cell" [19].

The DipAsm Workflow: A Streamlined Bioinformatics Approach

For bioinformaticians seeking efficient computational phasing, the DipAsm workflow developed by Dr. Shilpa Garg and colleagues provides a streamlined method for chromosome-level phasing that combines HiFi reads with Hi-C data. This workflow significantly reduces computational time while maintaining high accuracy [21].

Key Methodological Steps:

- HiFi Data Input: Use HiFi reads as the primary input to generate continuous, accurate contigs.

- Hi-C Scaffolding: Employ Hi-C data to scaffold contigs into longer sequences and link heterozygous single nucleotide polymorphisms (SNPs) over long distances.

- Haplotype Partitioning: Partition HiFi reads by haplotype using the linkage information from Hi-C.

- Separate Assembly: Assemble each haplotype partition separately to produce fully phased sequences.

The standout benefit of this workflow is its remarkable speed, "producing chromosome-level haplotype-resolved assemblies within a day, which previously took weeks" [21]. The method has been rigorously tested on benchmark genomes (HG002, NA12878, and PGP1) and produces results comparable to alternative approaches with superior efficiency, making it particularly valuable for large-scale genomic projects [21].

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 1: Essential Research Reagents and Platforms for HiFi and Hi-C Integration

| Item | Function | Application Note |

|---|---|---|

| PacBio Revio System | Platform for generating HiFi reads (10-25 kb, >Q20 accuracy) | Provides scalable throughput for large genomes; enables CiFi workflow [19]. |

| SMRTbell Express Template Prep Kit 2.0 | Library preparation for PacBio HiFi sequencing | Essential for constructing sequencing libraries from extracted DNA [22]. |

| Qiagen MagAttract Kit | Total genomic DNA isolation | Used for high-quality DNA extraction critical for long-read sequencing [23]. |

| Hi-C Library Preparation Kit | Captures chromatin conformation interactions | Enables scaffolding of contigs into chromosome-scale assemblies [20]. |

| Purge_Dups v1.2.5 | Bioinformatics tool for removing heterozygous regions | Improves assembly accuracy by eliminating haplotypic duplications [22]. |

| Juicer v1.6.2 | Aligns Hi-C reads to the assembly | First step in Hi-C data integration for scaffolding and phasing [22]. |

| 3D-DNA v.180922 | Software for chromosomal anchoring of contigs | Performs scaffolding using aligned Hi-C data to build chromosome-scale sequences [22]. |

| p-Fluorobenzylamine-d4 | 4-Fluorobenzyl-2,3,5,6-d4-amine Deuterated Reagent | |

| Methyl 7,15-dihydroxydehydroabietate | Methyl 7,15-dihydroxydehydroabietate, CAS:155205-65-5, MF:C21H30O4, MW:346.5 g/mol | Chemical Reagent |

Troubleshooting Guides and FAQs: Addressing Common Experimental Challenges

Frequently Asked Questions

Q1: What are the primary advantages of integrating HiFi reads with Hi-C data over using either technology alone? The integration provides a synergistic effect that neither technology can achieve independently. HiFi reads deliver long, highly accurate sequences that are excellent for assembling through repetitive elements and resolving complex regions. Hi-C data provides long-range spatial information that links these sequences into chromosome-scale scaffolds and allows for phasing of haplotypes. This combination enables researchers to generate "haplotype-resolved, chromosome-scale de novo genome assemblies" that are both continuous and accurately partitioned by parental origin [19] [21].

Q2: How does this integrated approach handle the challenge of high heterozygosity, which often fragments assemblies? The integrated approach specifically addresses heterozygosity through phasing. Tools like hifiasm and DipAsm use the combination of HiFi reads and Hi-C linkage information to separate heterozygous alleles into distinct haplotype blocks. This process prevents the assembler from interpreting divergent haplotypes as separate loci, thereby avoiding "haplotypic duplications" and producing a more accurate representation of the diploid genome [20] [21].

Q3: What level of completeness and continuity can we expect from a HiFi+Hi-C assembly?

When executed properly, HiFi+Hi-C assemblies routinely achieve chromosome-level continuity with high completeness scores. For example, the Vertebrate Genome Project (VGP) pipeline, which uses this combination, aims for "near-error-free, gap-free, chromosome-level, haplotype-phased" assemblies [20]. In practical terms, an assembly of a blowfly genome demonstrated 97.05% of sequences anchored to five chromosomes with a scaffold N50 of 121.37 Mb and 98.90% BUSCO completeness [22].

Q4: Our research involves low-input or precious samples. Is this integrated approach feasible?

Yes, recent methodological advances have significantly reduced input requirements. The CiFi (Hi-C with HiFi) protocol, part of the Amplifi workflow, has demonstrated success with ">500-fold improved efficiency" and ">100-fold reduced input" compared to previous approaches. This has enabled chromosome-scale assembly from single insects, demonstrating feasibility for low-input scenarios [19].

Troubleshooting Common Experimental Issues

Table 2: Troubleshooting Common Issues in HiFi and Hi-C Integration

| Problem | Potential Cause | Solution |

|---|---|---|

| Poor phasing continuity (short haplotype blocks) | Insufficient density of heterozygous SNPs; low-quality Hi-C data. | Ensure sample heterozygosity is adequate. Optimize Hi-C library preparation to increase valid long-range contact pairs. |

| False duplications in the primary assembly | Failure to purge divergent haplotypes recognized as separate contigs. | Run purging tools like Purge_Dups to identify and remove haplotigs, moving them to an alternate assembly [20]. |

| High fraction of misassemblies | Incorrect joining of non-adjacent sequences, often in repetitive regions. | Use the Hi-C contact map for manual curation to identify and correct misjoins. Validate with an orthogonal technology like Bionano [20]. |

| Low sequence yield from HiFi library | Degraded DNA or inefficiencies in SMRTbell library construction. | Use high molecular weight DNA extraction protocols. Follow the low-input PacBio protocol with additional bead cleaning for precious samples [23]. |

| High sequencing coverage but low assembly completeness (BUSCO score) | Unremoved contaminants or adapter sequences. | Use tools like MMseqs2 to screen for and remove potential contaminants from sequencing reads prior to assembly [22]. |

Workflow Visualization: From Sample to Chromosome-Scale Assembly

The following diagram illustrates the integrated experimental and computational workflow for achieving a chromosome-scale, haplotype-resolved assembly:

Diagram 1: Integrated HiFi and Hi-C workflow for chromosome-scale assembly.

Impact and Applications: Transforming Genomic Discovery Across Fields

The integration of HiFi and Hi-C technologies has demonstrated profound impacts across diverse research domains by providing a more complete and accurate genomic context for biological questions.

Human Genomics and Rare Disease: In pediatric rare disease, a clinical study demonstrated that long-read sequencing (incorporating HiFi and Hi-C capabilities) achieved a

37%diagnostic yield compared to27%with standard methods, while reducing turnaround time from62to27days [24]. The integrated capability to detect "aberrant methylation, rare expansion disorders, phasing of single-nucleotide variation... and detection or refinement of SVs" provided explanations for previously unsolved cases [24].Immunology and Antibody Diversity: Researchers have utilized HiFi sequencing to build a high-quality haplotype and variant catalog of the immunoglobulin heavy chain constant (IGHC) locus, uncovering "tremendous diversity" that was previously undocumented. Strikingly, "

89.6%" of the262identified IGHC coding alleles were undocumented in the IMGT database, representing a235%increase in known alleles [19]. This hidden variation, missed by short-read sequencing, is crucial for complete genetic association studies.Gene Therapy Safety: The power of HiFi sequencing to reveal hidden contaminants was demonstrated in the characterization of lentiviral vectors used for gene therapy. Studies identified "multiple aberrantly packaged nucleic acid species," including exogenous viral sequences and human endogenous retrovirus (HERV) elements within vector preparations [19]. This finding has critical implications for manufacturing safer recombinant vectors by enabling quality control steps to remove these contaminants.

Conservation and Evolutionary Genomics: In non-model organisms, this integrated approach has enabled the creation of high-quality reference genomes essential for conservation. For instance, the genome assembly of the New Zealand Blue cod (RÄwaru) utilized HiFi data with Hifiasm, achieving a BUSCO completeness score of

97.70%and an N50 of551.4 Kb, providing a vital resource for population genomics and fisheries management [25].

Frequently Asked Questions

Q1: How do I choose the right assembler for my specific genome project? The choice of assembler depends heavily on your data type, genome complexity, and desired balance between contiguity, accuracy, and computational resources [26] [27].

- For eukaryotic genomes with HiFi reads:

hifiasmandhifiasm-metashould be your first choice, as they consistently generate high-contiguity assemblies with superior haplotype phasing [27]. - When seeking the best balance of accuracy and contiguity:

Flyeoffers a strong compromise, though it can be sensitive to pre-corrected input data [26]. - For achieving highly accurate but potentially more fragmented assemblies:

Canuprovides high accuracy but typically produces 3–5 contigs and requires the longest runtimes [26]. - For combining HiFi and Oxford Nanopore Technologies (ONT) data:

Verkkois specifically designed to assemble both data types simultaneously and was used for telomere-to-telomere human genome assembly [27].

Q2: What are the recommended data requirements for a high-quality haplotype-resolved assembly? Achieving chromosome-level haplotype-resolved assembly requires specific data types and volumes [28]:

- 20× coverage of high-quality long reads (PacBio HiFi or ONT Duplex)

- 15–20× coverage of ultra-long ONT reads per haplotype

- 10× coverage of long-range data (Omni-C or Hi-C)

Assembly contiguity typically plateaus when high-quality long-read coverage exceeds 35×. Inclusion of ultra-long reads significantly enhances assembly contiguity and telomere-to-telomere contig assembly, with optimal results achieved at 30× ULONT coverage [28].

Q3: Why is my assembly highly fragmented, and how can I improve contiguity? Fragmentation often occurs in highly complex, repetitive regions where conventional algorithms struggle [29]. Consider these approaches:

- Integrate multiple data types: Combine HiFi reads with ultra-long ONT reads and Hi-C/Omni-C data, as this provides both accuracy and long-range information to resolve repetitive regions [28].

- Adjust assembler parameters: For

hifiasm, leverage its phased assembly graph capabilities for diploid genomes [27]. - Explore emerging methods: Geometric deep learning frameworks like

GNNomeshow promise for path identification in complex graph tangles without relying solely on traditional algorithmic simplifications [29].

Q4: How does preprocessing affect assembler performance? Preprocessing decisions significantly impact assembly quality [26]:

- Filtering improves genome fraction and BUSCO completeness

- Trimming reduces low-quality artifacts

- Correction benefits overlap-layout-consensus (OLC)-based assemblers but may increase misassemblies in graph-based tools

The effect varies by assembler type, with OLC-based assemblers generally benefiting from correction, while graph-based tools may perform better with uncorrected reads [26].

Assembler Performance Benchmarking

| Assembler | Best Use Case | Runtime | Contiguity (NG50) | Completeness | Key Strengths |

|---|---|---|---|---|---|

| hifiasm | Eukaryotic genomes, diploid assembly | Moderate | High (e.g., 87.7 Mb for CHM13) | High (99.55% for CHM13) | Superior haplotype phasing, state-of-the-art for HiFi |

| Flye | Balance of accuracy and contiguity | Moderate | High | High | Strong all-around performer, reliable contiguity |

| Canu | Maximum accuracy | Very Long | Moderate (e.g., 69.7 Mb for CHM13) | High (99.54% for CHM13) | High accuracy, proven track record |

| Verkko | Hybrid HiFi+ONT assembly | Moderate | Variable | High (99.44% for CHM13) | Designed for telomere-to-telomere assembly |

| HiCanu | HiFi-specific variant of Canu | Long | High | High | Optimized for HiFi read characteristics |

| NextDenovo | Near-complete, single-contig assemblies | Fast | High | High | Progressive error correction with consensus refinement |

| Miniasm | Rapid draft assemblies | Very Fast | Variable | Lower without polishing | Ultrafast, useful for initial assessment |

| Data Type | Recommended Coverage | Role in Assembly | Impact on Metrics |

|---|---|---|---|

| PacBio HiFi | 20-35× | Base assembly with high accuracy | Primary determinant of base accuracy and phasing |

| ONT Duplex | 20-35× | Alternative to HiFi with longer reads | Comparable contiguity to HiFi, slightly lower phasing accuracy |

| ULTRA-LONG ONT | 15-30× per haplotype | Resolving repeats and complex regions | Significantly improves T2T contigs and contiguity |

| Hi-C/Omni-C | 10× | Scaffolding and phasing | Reduces phasing errors, improves chromosome-scale assembly |

Experimental Protocols

Protocol 1: Benchmarking Assembler Performance

Objective: Systematically evaluate and compare genome assemblers using standardized metrics.

Materials:

- Sequencing data (HiFi, ONT, or both)

- Computational resources (high-memory nodes recommended)

- Assessment tools: QUAST, BUSCO, Merqury

Methodology:

- Data Preparation: Use standardized datasets (real or synthetic) with known characteristics

- Assembly Execution: Run each assembler with recommended parameters using identical computational resources

- Metric Calculation:

- Run QUAST for contiguity metrics (NG50, contig count)

- Run BUSCO for completeness assessment

- Calculate quality value (QV) for accuracy

- Record computational requirements (runtime, memory)

- Comparative Analysis: Normalize results across assemblers and identify performance patterns

Expected Output: Performance rankings tailored to specific genome types and data characteristics.

Protocol 2: Optimal Data Volume Determination

Objective: Establish minimum data requirements for cost-effective high-quality assemblies.

Materials:

- Mixed sequencing data (HiFi/Duplex, ULONT, Hi-C/Omni-C)

- Down-sampling tools (e.g., seqtk)

- Assembly pipeline (e.g., hifiasm)

Methodology:

- Data Down-sampling: Create subsets with varying coverage (e.g., 10×, 20×, 30×, 40×)

- Assembly with Subsets: Assemble each down-sampled dataset independently

- Saturation Analysis: Plot assembly metrics (NG50, completeness) against coverage

- Plateau Identification: Determine coverage point where metric improvement becomes negligible

- Validation: Verify optimal coverage with biological validation metrics

Expected Output: Data volume recommendations that maximize quality while minimizing sequencing costs.

Workflow Visualization

Figure 1: Genome Assembly Benchmarking Workflow

Figure 2: Assembly Methods and Evaluation Framework

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for Genome Assembly

| Resource | Function | Application Notes |

|---|---|---|

| PacBio HiFi Reads | High-accuracy long reads (>10 kb, <0.01% error) | Ideal for base assembly, variant calling, and haplotype phasing |

| ONT Ultra-Long Reads | Extreme length reads (up to 100+ kb) | Critical for resolving repetitive regions and complex tangles |

| Hi-C/Omni-C Data | Chromatin conformation capture | Provides long-range information for scaffolding and phasing |

| BUSCO Gene Sets | Benchmarking Universal Single-Copy Orthologs | Assess assembly completeness using evolutionarily conserved genes |

| QUAST/MetaQUAST | Quality Assessment Tool for Genome Assemblies | Evaluates contiguity, misassemblies, and other structural metrics |

| Merqury | Reference-free assembly evaluation | Estimates quality value and assembly accuracy without reference |

| hifiasm | Haplotype-resolved assembler for HiFi reads | Specifically designed for PacBio HiFi data with superior phasing |

| Flye | de Bruijn graph-based assembler | Excellent balance of accuracy, contiguity, and computational efficiency |

| Bimatoprost methyl ester | Bimatoprost Acid Methyl Ester|Research Compound | Bimatoprost acid methyl ester is a key intermediate in prostaglandin analog research. This product is for Research Use Only (RUO). Not for human consumption. |

| Benzyl-PEG2-CH2-Boc | Benzyl-PEG3-CH2CO2tBu|CAS 1643957-26-9|PEG Linker |

Chromosome-level, high-quality genomes are essential for advanced genomic analyses, including 3D genomics, epigenetics, and comparative genomics [30]. Hi-C scaffolding has become a cornerstone of modern genome assembly by using the three-dimensional proximity information of chromatin to order, orient, and assign contigs to chromosomes [31]. This guide provides a comprehensive technical resource for researchers employing two powerful tools in this domain: the Juicer pipeline for processing raw Hi-C data, and the 3D-DNA pipeline for performing the actual scaffolding [2]. By following these protocols and utilizing the included troubleshooting resources, you can overcome common genome assembly challenges and produce more accurate, contiguous reference genomes.

Understanding the Hi-C Scaffolding Workflow

The process of transforming raw Hi-C sequencing reads into a chromosome-scale assembly involves a multi-step workflow. The following diagram illustrates the key stages and how Juicer and 3D-DNA integrate within a larger assembly process.

Diagram 1: The Hi-C scaffolding workflow with Juicer and 3D-DNA.

What is Hi-C and Why Use It for Scaffolding?

Hi-C is a chromosome conformation capture technique that measures the 3D spatial organization of genomes by crosslinking, digesting, and ligating DNA, followed by paired-end sequencing [2]. The resulting reads represent pairs of DNA fragments that were physically close in the nucleus. For scaffolding, this proximity information is invaluable because it reveals long-range interactions (>1 Mb) that are difficult to obtain from short-read sequencing alone. These interactions allow bioinformatic tools to order contigs along chromosomes, orient them correctly, and detect misassemblies in initial genome drafts [2].

Essential Setup and Protocols

The Scientist's Toolkit: Key Research Reagents and Software Solutions

| Tool/Reagent | Function in Hi-C Scaffolding | Key Notes |

|---|---|---|

| Juicer Pipeline [32] [2] | Processes raw Hi-C FASTQ files. Aligns reads, filters duplicates, and generates contact maps (.hic files). | A one-click system; requires Java and BWA. Critical for quality control and producing input for 3D-DNA. |

| 3D-DNA Pipeline [2] | Uses the Juicer output to scaffold a draft assembly. Clusters, orders, and orients contigs into chromosomes, correcting misassemblies. | An iterative pipeline; can be run in haploid or diploid mode. Outputs final FASTA and AGP files. |

| Juicebox Assembly Tools [2] [33] | Provides a visual interface for manually curating and reviewing automated scaffolding results. | Essential for verifying and correcting the output of 3D-DNA, especially for complex genomes. |

| BWA Aligner [2] | Aligns Hi-C read pairs to the draft genome assembly. Integrated directly within the Juicer pipeline. | Must be used to index the reference genome before running Juicer. |

| Restriction Enzyme (e.g., Sau3AI/MboI) [34] | Used in the wet-lab Hi-C protocol to digest the crosslinked DNA. Informs the bioinformatic analysis. | The sequence (e.g., GATC) must be specified for generating the restriction site file. Isoschizomers are interchangeable in the pipeline. |

| BMS-933043 | BMS-933043, MF:C16H19N7O, MW:325.37 g/mol | Chemical Reagent |

| CGP 20712 dihydrochloride | CGP 20712 dihydrochloride, MF:C23H27Cl2F3N4O5, MW:567.4 g/mol | Chemical Reagent |

Step-by-Step Experimental Protocol

Part 1: Running the Juicer Pipeline

The first step is to process your raw Hi-C data into a meaningful contact map.

Prerequisite: Genome Preparation

Prerequisite: Restriction Site File

Input Data and Directory Structure

- Create a

fastqdirectory and place your Hi-C reads there. Critical: Files must be named with the_R1.fastqand_R2.fastqextensions for the pipeline to recognize them [2]. - Create a

splitsdirectory for temporary processing files.

- Create a

Execute Juicer

Run the main script. A typical command looks like:

This command specifies the working directory (

-d), chromosome sizes (-p), restriction enzyme (-s), reference genome (-z), and number of threads (-t) [2].

Juicer Output Files

- The primary outputs are in the

alignedfolder. The most important file for downstream scaffolding ismerged_nodups.txt, which contains the deduplicated list of valid Hi-C contacts [2].

- The primary outputs are in the

Table: Key Juicer Output Files and Their Uses [2]

| File | Definition | Use |

|---|---|---|

merged_nodups.txt |

Deduplicated list of valid Hi-C contacts. | Main input for 3D-DNA and for building .hic files for visualization. |

merged_dedup.bam |

BAM file of aligned, deduplicated Hi-C reads. | Useful for visualization in genome browsers like IGV. |

inter.txt & inter_30.txt |

Contact statistics between contigs/scaffolds. | Used for basic quality control. |

inter_hists.m |

MATLAB script with histograms of Hi-C contact distributions. | Helps visualize contact decay with distance for QC. |

Part 2: Scaffolding with the 3D-DNA Pipeline

With the contact data from Juicer, you can now scaffold your assembly.

Setup

- Create a new working directory for 3D-DNA (e.g.,

3D_DNA/). - Create symbolic links to the two essential input files:

- Create a new working directory for 3D-DNA (e.g.,

Execute 3D-DNA

3D-DNA Output and Manual Curation

- The pipeline produces

Genome.hicandGenome.assemblyfiles. It is highly recommended to load these into Juicebox Assembly Tools for manual review and correction [2]. This visual curation step often significantly improves the final assembly quality.

- The pipeline produces

Troubleshooting Guides and FAQs

This section addresses specific, common problems encountered when using Juicer and 3D-DNA.

Frequently Asked Questions (FAQs)

Q1: My reference genome is from a different genotype than my Hi-C sample. Can Juicer and 3D-DNA still be used for scaffolding? Yes. It is a common application to use Hi-C data from one genotype to scaffold the reference genome of another genotype from the same species. The high degree of sequence similarity allows the Hi-C reads to map successfully, and the 3D chromatin organization is largely conserved, providing valid scaffolding information [34].

Q2: My restriction enzyme isn't listed in the Juicer script. What should I do?

You can use the generate_site_positions.py script to create a custom restriction site file for your enzyme [34] [32]. Furthermore, if your enzyme is an isoschizomer (an enzyme that recognizes the same sequence) of a default one, you can use the default file. For example, since Sau3AI and MboI both recognize "GATC", you can use the MboI parameters and restriction site file without modification [34].

Q3: What is the purpose of the chrom.sizes file and how do I generate it?

The chrom.sizes file is a two-column, tab-delimited file that lists the name and length of every chromosome or contig in your draft assembly. It is required by Juicer for generating the contact map. You can create it from your genome's FASTA index file using the command: cut -f 1,2 references/Genome.fasta.fai > chrom.sizes [2].

Troubleshooting Common Errors

Problem: Deduplication step in Juicer is extremely slow or appears to hang.

- Cause: This is often due to low-complexity or highly repetitive regions (e.g., ribosomal DNA, tandem repeats) in the genome. These regions can map an excessive number of reads, creating a memory and computation bottleneck during deduplication [2].

- Solution: Identify and create a blacklist of these problematic regions. You can use a repeat finder on your genome, then mask these regions before mapping. After running Juicer, you can swap the genome back to the unmasked version before proceeding to 3D-DNA [2].

Problem: Juicer script does not submit any jobs to my cluster.

- Cause: The Juicer script has not been properly configured for your specific HPC job scheduler (e.g., SLURM, UGER). The built-in queue names and parameters may not match your system's configuration [2].

- Solution: You will need to modify the

juicer.shscript itself to match the queue names, job submission commands, and parameters used by your cluster. Check the script's internal configuration section.

Problem: 3D-DNA pipeline fails with "gawk: fatal: division by zero attempted" and hic file errors.

- Cause: This error can occur for several reasons. The "division by zero" itself is a poorly handled exit scenario and may not be the root cause. The underlying issue is often that the

.hicfile was not created, which can happen if the scaffolder fails to launch due to an issue with the inputmerged_nodups.txtfile [35]. - Solution:

- Check the contents and formatting of your

merged_nodups.txtfile to ensure it is valid and was generated correctly by Juicer. - Check what round of editing the error occurred in to help isolate the problem stage [35].

- Ensure you are using compatible versions of Juicer and 3D-DNA.

- Check the contents and formatting of your

Problem: 3D-DNA pipeline degrades a previously good assembly, introducing misassemblies.

- Cause: The default parameters of 3D-DNA are designed to be aggressive in misjoin correction, which can sometimes break correctly assembled regions, especially if the initial assembly is already of high quality (e.g., from a linkage map) [36].

- Solution: Use less aggressive parameters for the editor and polisher steps. You can adjust stringency and resolution parameters to make the pipeline more conservative [36]. For example:

Frequently Asked Questions (FAQs)

FAQ 1: What is the fundamental promise of using VQE for genome assembly optimization? The Variational Quantum Eigensolver (VQE) is a hybrid quantum-classical algorithm designed to find the minimum eigenvalue of a Hamiltonian. For genome assembly, specific optimization problems, such as scaffolding or resolving haplotypes, can be formulated as Hamiltonian minimization problems. VQE's promise lies in its potential to find high-quality solutions to these complex combinatorial problems, which can be challenging for classical solvers, especially as problem sizes increase [37] [38].

FAQ 2: My VQE energy convergence is slow or has stalled. What could be the cause? Slow convergence is a common challenge, often attributable to the classical optimizer or the parameterized quantum circuit (PQC) itself. Gradient-free optimizers like Nelder-Mead or COBYLA are robust but can require many iterations [39]. For circuits with many parameters, gradient-based optimizers like Adam may offer faster convergence [39]. Furthermore, the phenomenon of "barren plateaus," where gradients vanish exponentially with system size, can severely impede convergence. This is often linked to poorly chosen or random ansätze [37].

FAQ 3: How do I choose an ansatz for a genome assembly-related problem? The choice of ansatz is critical. The table below compares the two primary categories [37]:

| Ansatz Class | Key Features | Typical Limitations | Suitability for Assembly |

|---|---|---|---|

| Hardware-Efficient | Uses native gate sets for low depth on specific hardware. | May break physical symmetries; prone to barren plateaus. | Good for initial prototyping on NISQ devices. |

| Problem-Inspired | Incorporates constraints of the optimization problem. | Can be harder to design; may have greater circuit depth. | Highly recommended for assembly; restricts search to feasible solutions [40]. |

For genome assembly, a problem-specific ansatz is often beneficial. For example, if a constraint requires exactly one contig to be placed in a specific position (akin to a one-hot encoding), the ansatz can be designed to explore only the subspace of quantum states that satisfy this constraint, such as W states, significantly improving efficiency [40].

- FAQ 4: What are the key hardware limitations for running VQE on today's quantum devices?

Current Noisy Intermediate-Scale Quantum (NISQ) devices face several key limitations:

- Qubit Count and Connectivity: Problems are limited by the number of available qubits and their connectivity.

- Gate Fidelity and Coherence Time: Errors in gate operations and short qubit coherence times restrict the depth of circuits that can be reliably executed.

- Measurement Overhead: Estimating expectation values requires a large number of circuit repetitions ("shots"), which is time-consuming [41] [37].

Troubleshooting Guides

Problem 1: Poor Convergence or Stalling in the VQE Optimization Loop

Symptoms: The energy expectation value does not decrease significantly over multiple iterations, oscillates wildly, or converges to a value far above the expected ground state energy.

Diagnosis and Resolution:

Review Classical Optimizer Selection:

- Diagnosis: The choice of optimizer is problem-dependent. Gradient-free methods can be slow for high-dimensional parameter spaces [39].

- Resolution: Benchmark different optimizers. Start with COBYLA or Nelder-Mead. For circuits with many parameters (e.g., >50), test gradient-based methods like Adam if your framework supports automatic differentiation [39]. Advanced strategies like Bayesian optimization or homotopy continuation can also help escape local minima [37].

Check Initial Parameter Values:

- Diagnosis: Random initialization can place the algorithm in a flat region of the landscape (a barren plateau) [37].

- Resolution: Instead of random initialization, use strategies like:

- Problem-Informed Guesses: Use classical solutions to inform initial parameters.

- Transfer Learning: Use parameters optimized for a smaller, related problem.

- Heuristic Strategies: Implement layer-by-layer training or other initialization heuristics.

Mitigate Barren Plateaus:

- Diagnosis: The gradient variance is exponentially small, making it impossible to find a descent direction.

- Resolution: This is a core research challenge. Mitigation strategies include using problem-specific ansätze that naturally limit the explored Hilbert space [40], incorporating symmetries into the cost function [37], and employing local rather than global cost functions.

Problem 2: Formulating a Genome Assembly Problem as a VQE-Compatible Hamiltonian

Symptoms: Difficulty in mapping a concrete assembly task (e.g., scaffolding, haplotype phasing) onto a qubit representation and a corresponding Hamiltonian whose ground state encodes the solution.

Diagnosis and Resolution:

Define the Qubit Encoding:

- Diagnosis: The mapping from biological data to qubits is incorrect or inefficient.

- Resolution: Clearly define what each qubit represents. For example, in a scaffolding problem, a binary variable (and thus a qubit) could indicate whether a specific contig connection exists. For representing DNA bases (A,T,G,C), two qubits per base are required [42].

Construct the Hamiltonian with Penalty Terms:

- Diagnosis: The Hamiltonian's ground state does not correspond to a valid biological solution because problem constraints are not enforced.

- Resolution: Incorporate constraints (e.g., a contig can only connect to two others, or a haplotype must be self-consistent) as penalty terms in the Hamiltonian. The general form is:

H_problem = H_objective + Σ_i μ_i * (C_i - target_value)^2whereH_objectiveencodes the optimization goal (e.g., maximize overlap score), and the penalty terms enforce the constraintsC_iwith weightsμ_i[37] [40].

Problem 3: High Measurement Error and Noise on Real Hardware

Symptoms: The computed energy expectation is noisy and biased, leading to poor optimization performance, even for small problems that fit on current devices.

Diagnosis and Resolution:

Increase Shot Count:

Employ Error Mitigation Techniques:

- Diagnosis: Hardware noise (e.g., decoherence, gate infidelity) systematically biases measurements.

- Resolution: Implement basic error mitigation strategies:

- Readout Error Mitigation: Characterize the measurement error matrix and apply its inverse to the results.

- Zero-Noise Extrapolation (ZNE): Intentionally increase the circuit noise level and extrapolate back to the zero-noise result.

- Use Denoising Algorithms: Explore advanced methods like quantum autoencoder-based variational denoising to post-process noisy VQE outputs [37].

Experimental Protocols & Data Presentation

Protocol: Benchmarking VQE for a Simplified Scaffolding Problem

Objective: To compare the performance of different VQE ansätze and optimizers on a simplified genome scaffolding Hamiltonian.

Methodology:

- Problem Definition: Define a small scaffolding graph with 4 contigs and known optimal connections. Formulate a Hamiltonian

H_scaffoldwhere the ground state energy corresponds to the optimal layout. - Ansatz Preparation: Prepare two types of parameterized quantum circuits (PQCs):

- Optimizer Setup: Configure two classical optimizers:

- Execution: Run the VQE algorithm for each (ansatz, optimizer) combination on a quantum simulator. Record the final energy, number of iterations to converge, and total computation time.

Expected Outcome: The problem-specific ansatz (PSA) should converge faster and to a more accurate ground state energy than the hardware-efficient ansatz (HEA), demonstrating the value of incorporating domain knowledge.

Quantitative Data: Optimizer Performance Comparison

The following table summarizes hypothetical results from the benchmarking protocol above, illustrating typical performance metrics.

Table 1: VQE Optimizer Performance on a Model Scaffolding Hamiltonian (Simulated)

| Ansatz Type | Classical Optimizer | Final Energy | Target Energy | Iterations to Converge | Converged? |

|---|---|---|---|---|---|

| Hardware-Efficient | COBYLA | -1.12 | -1.21 | 73 | Yes |

| Hardware-Efficient | Adam | -1.09 | -1.21 | 45 | No |

| Problem-Specific | COBYLA | -1.20 | -1.21 | 28 | Yes |

| Problem-Specific | Adam | -1.21 | -1.21 | 18 | Yes |

Visualization of Workflows and Relationships

VQE for Genome Assembly Workflow

Ansatz Selection Logic

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Quantum-Enhanced Genome Assembly Research

| Item | Function | Example / Note |

|---|---|---|

| Quantum SDKs & Simulators | Provides the programming environment to construct and simulate quantum circuits. | PennyLane [39], Qristal [41], Qiskit. |